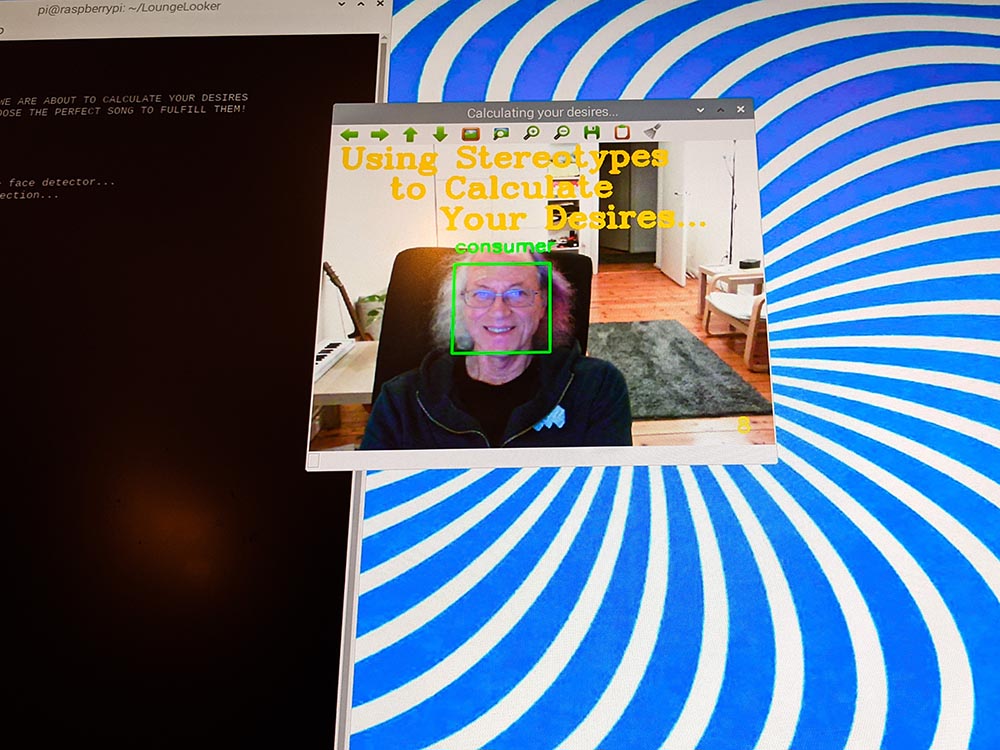

I developed this project as Artist-in-Residence with Monochrome/Q21 at MuseumsQuartier in Vienna in August/September-2021, and presented at Roboexotica as part of Ars Electronica in September-2021.

This project uses:

- Raspberry Pi 4 Model B 8GB (with cooling fan added)

- 3 ArduTouch music synthesizer kits

- 3 FTDI-equivalent USB-Serial Cables

- 4-port USB hub

- openCV computer vision software

- eSpeak text-to-speech engine

- 4-channel stereo audio mixer, stereo audio amp/speakers

Here is a 15-minute video describing the project, and giving a demo of it in action:

https://youtu.be/23EKJEPwm7c

I am thankful for the super tutorial for installing OpenCV from Q-engineering

I am also super grateful for the great set of tutorials on using OpenCV for facial-recognition on Raspberry Pi from Adrian Rosenrock

And many thanks to the developers of eSpeak text-to-speech engine

Also, thanks to the great support from Monochrom and Q21 at the MuseumsQuartier in Vienna.

==================================================

==================================================

This project is Open Hardware

This work is licenses under the

Creative Commons Attribution-ShareAlike 4.0

CC BY-SA 4.0

To view a copy of this license, visit

https://creativecommons.org/licenses/by-sa/4.0/

==================================================

==================================================