This repository implements our ICRA 2022 submission on

Recursive Feasibility Guided Optimal Parameter Adaptation of Differential Convex Optimization Policies for Safety-Critical Systems

https://ieeexplore.ieee.org/abstract/document/9812398

Authors: Hardik Parwana and Dimitra Panagou, University of Michigan

An arxiv version can be found at https://arxiv.org/abs/2109.10949

Note: this repo is under development. While all the relevant code is present, we will work on making it more readable and customizable soon! Stay Tuned!

We pose the question:

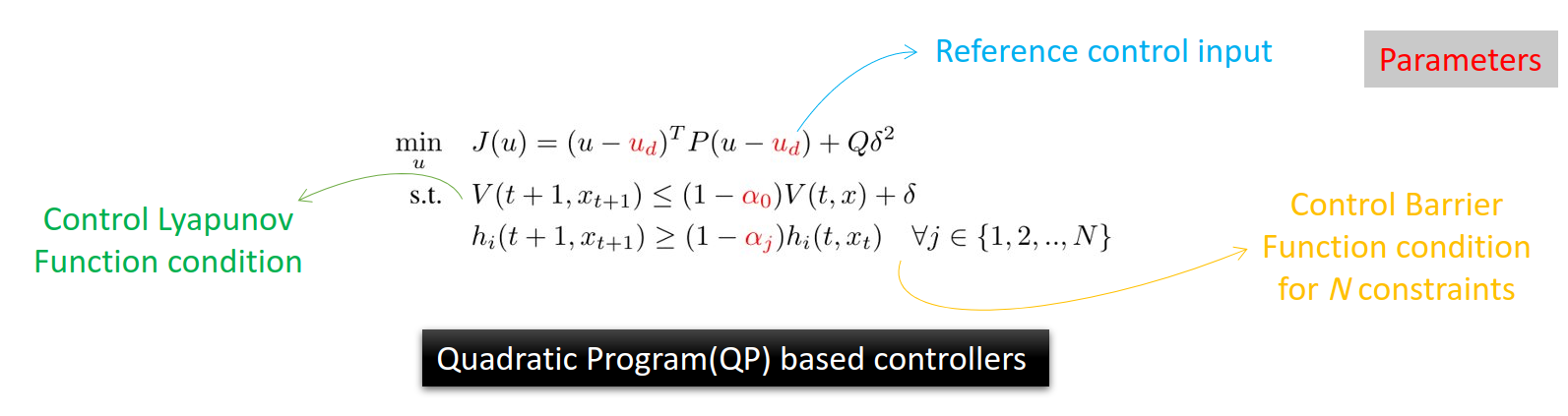

What is the best we can do given a parametric controller? How to adapt their parameters in face of different external conditions? And what if the controller is an optimization problem itself? Can we maintain feasibility over a horizon if we change the parameter? In other words, how do we relate (optimization based) state-feedback controllers(that depend on current state only) to their long-term performance.

We propose a novel combination of backpropagation for dynamical systems and use FSQP algorithms to update parameters of QP so that:

- Performance is improved with guarantees of feasible trajectory over the same time horizon.

- The horizon over which the QP controller remains feasible is increased compared to its previous value.

The code was run on Ubuntu 20 with Python 3.6 and following packages

- cvxpy==1.1.14

- cvxpylayers==0.1.5

- torch==1.9.0

- matplotlib==3.3.4

- numpy==1.19.5

In addition to above dependencies, run source export_setup.sh from main folder to set the paths required to access submodules.

To examine how the car behaves with different values of parameters, see our notebook example car_example.ipynb.

For running our algorithm to increase horizon for feasibility of QP controller, run the following code where you should be able to see improvement of time at which QP fails.

python QPpolicy/QPpolicyTorchCar.py

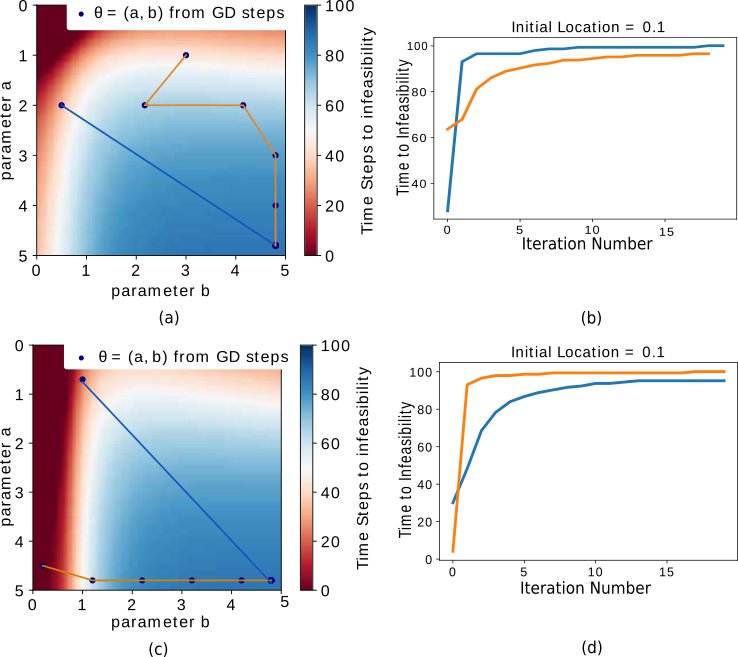

Following figures show how parameters change with each step of proposed GD

| a = 2.5, b=4.5 | a = 1.0, b = 3.0 | a = 3.0, b = 1.0 |

|---|---|---|

|

|

|

Run the following code

python QPpolicy/QPpolicy.py

| Adaptive Parameters (proposed) | Constant Parameter | Reward Plot |

|---|---|---|

|

|

|