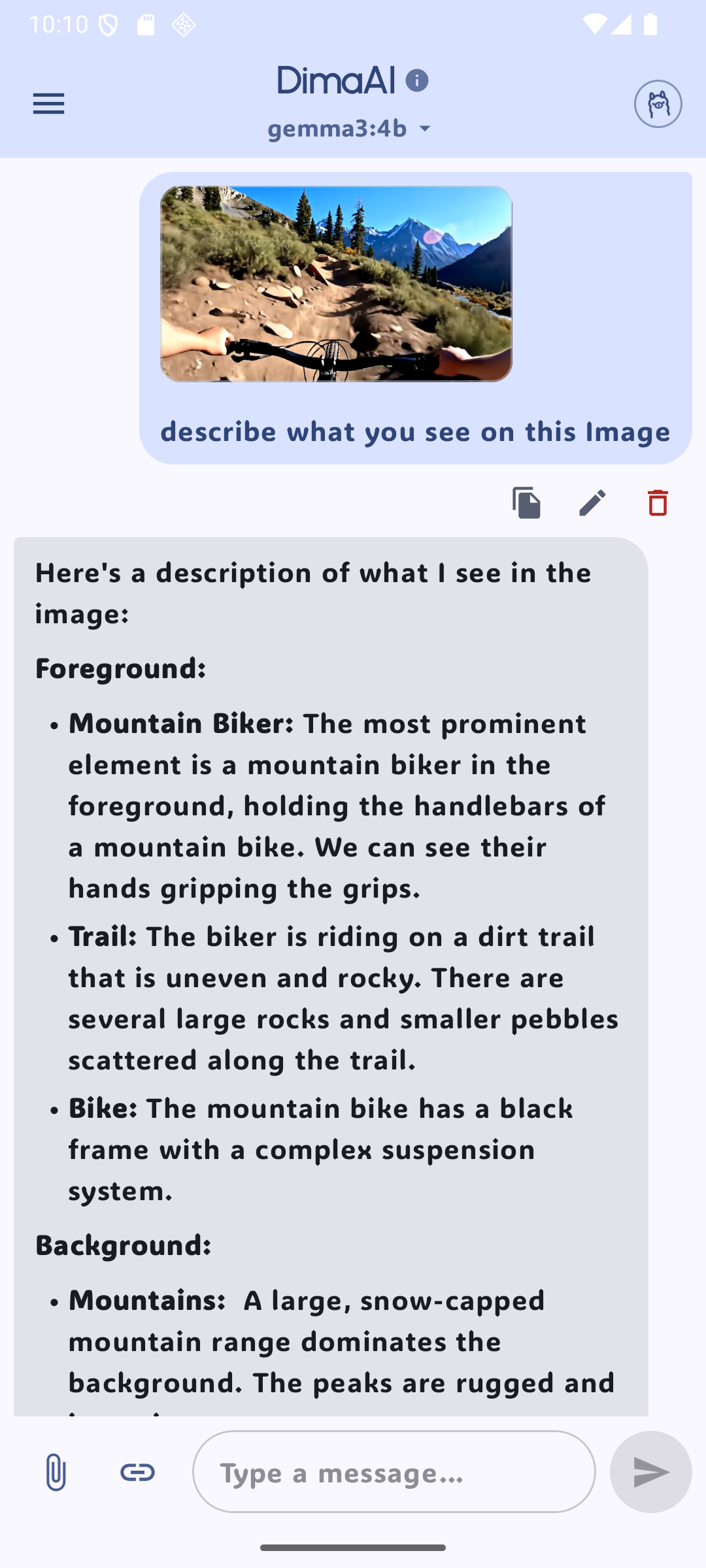

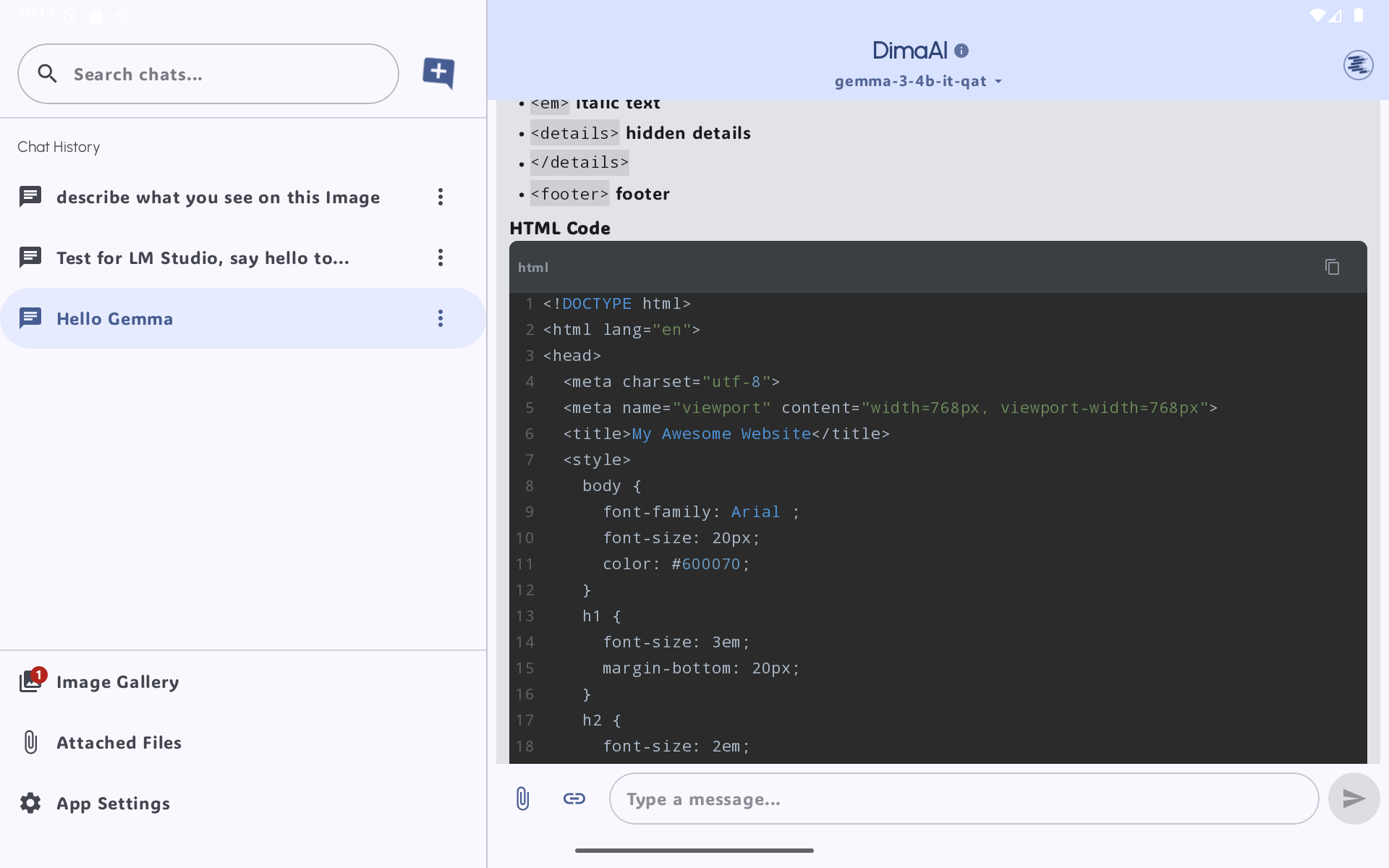

DimaAI is an offline-capable AI chat app built with Jetpack Compose.

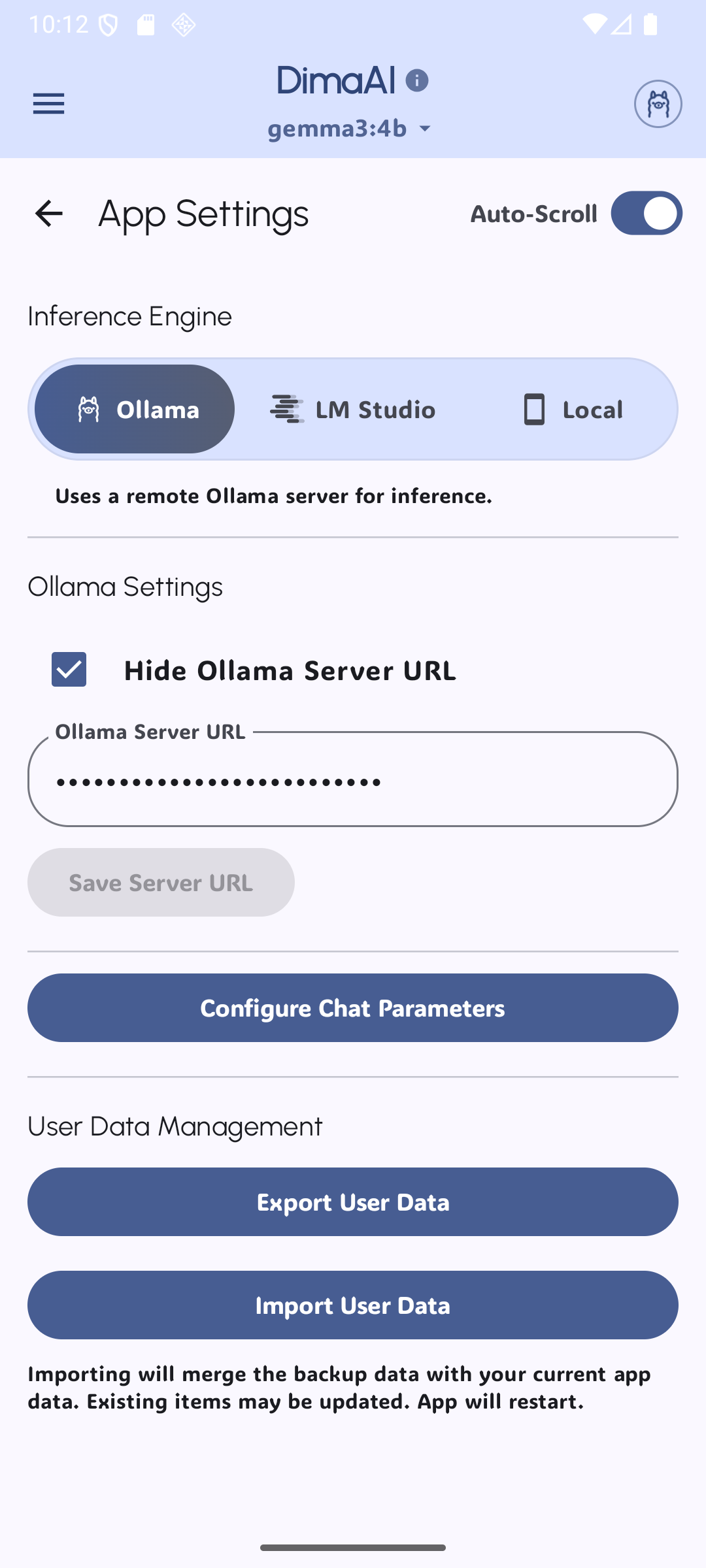

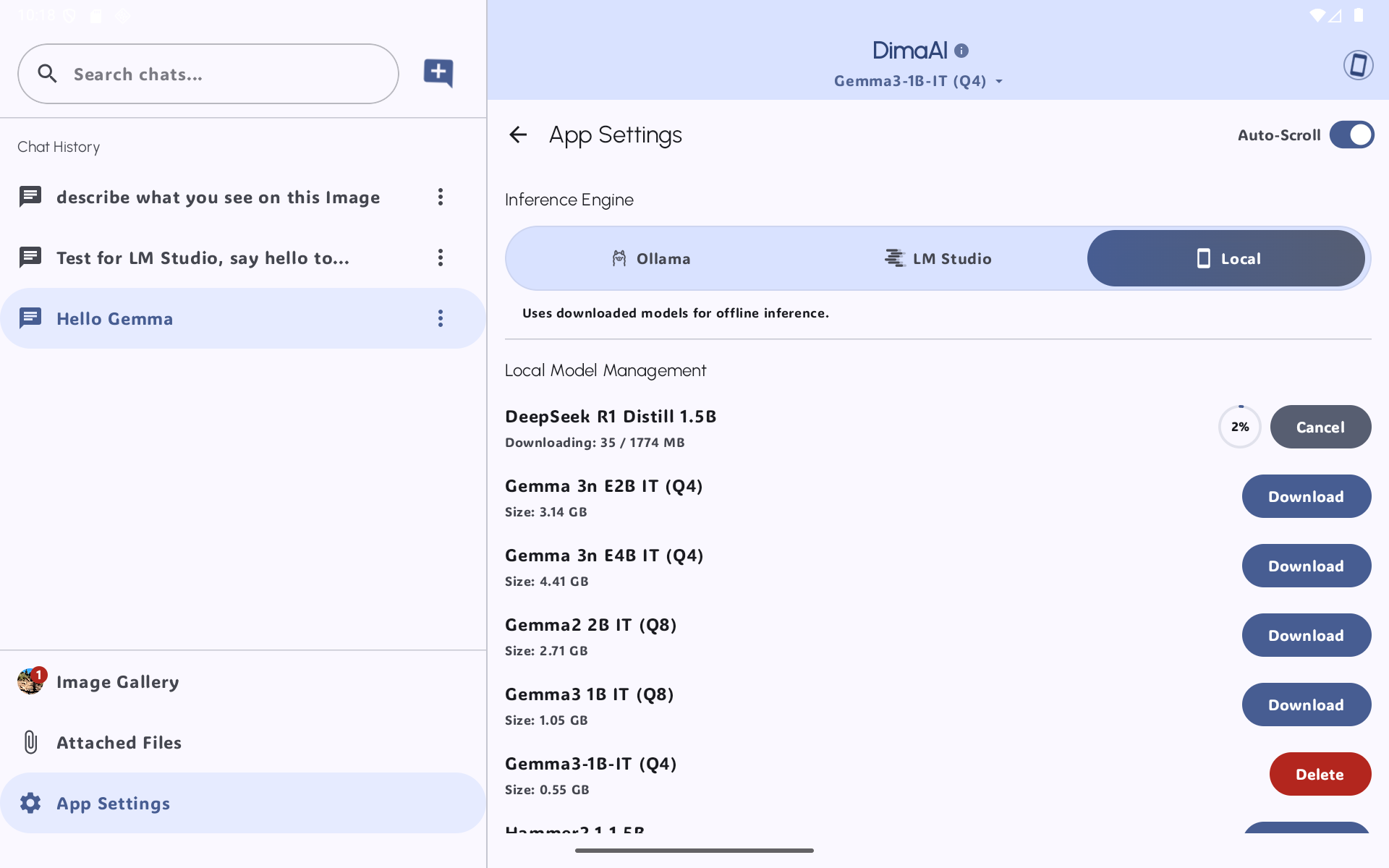

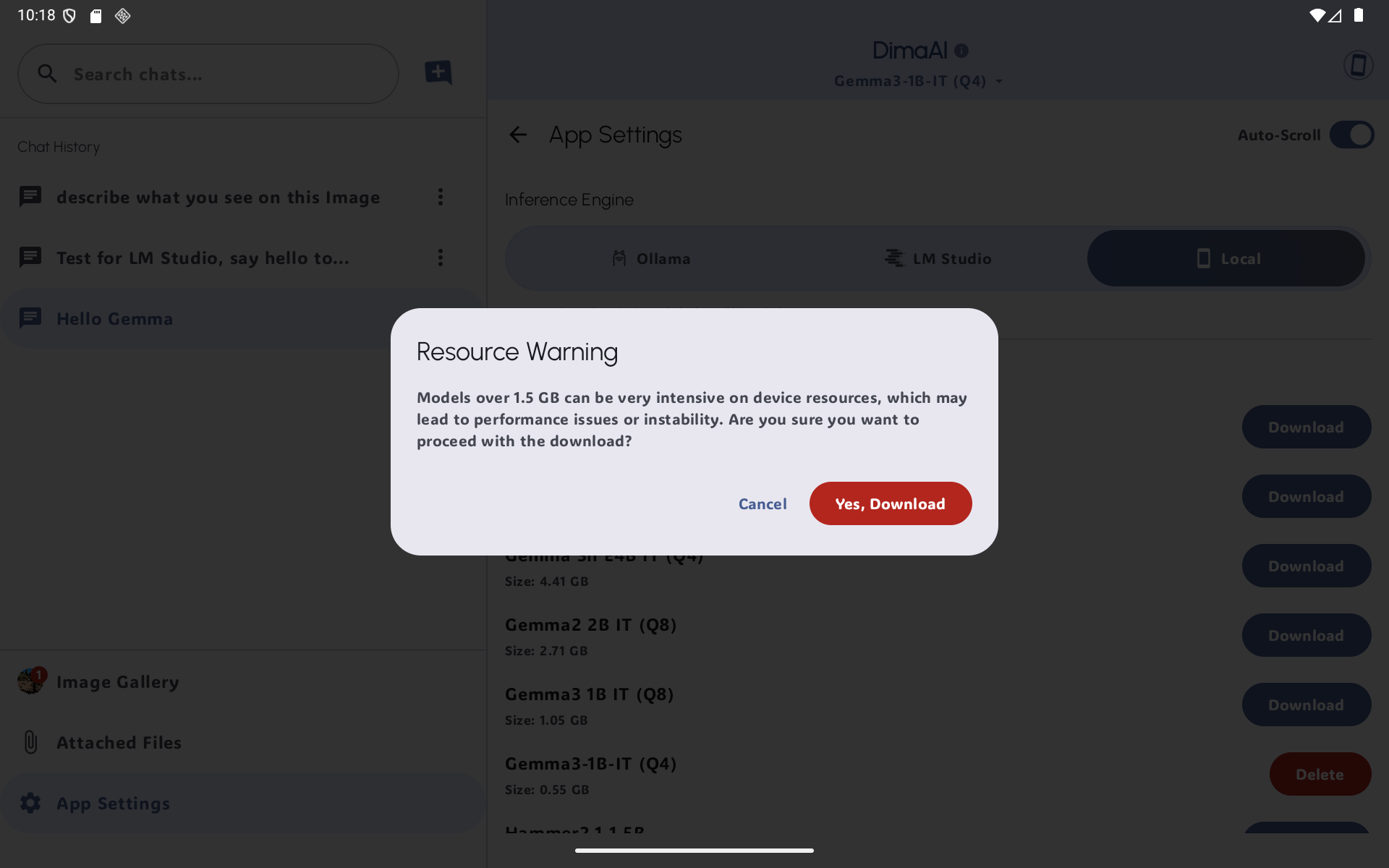

It supports local and self-hosted models via Ollama, LM Studio, or on-device MediaPipe LLMs via downloads, and features rich file support, encrypted access token storage, and advanced model management with WorkManager.

- ✅ Multi-backend LLM support (Ollama, LM Studio, Local)

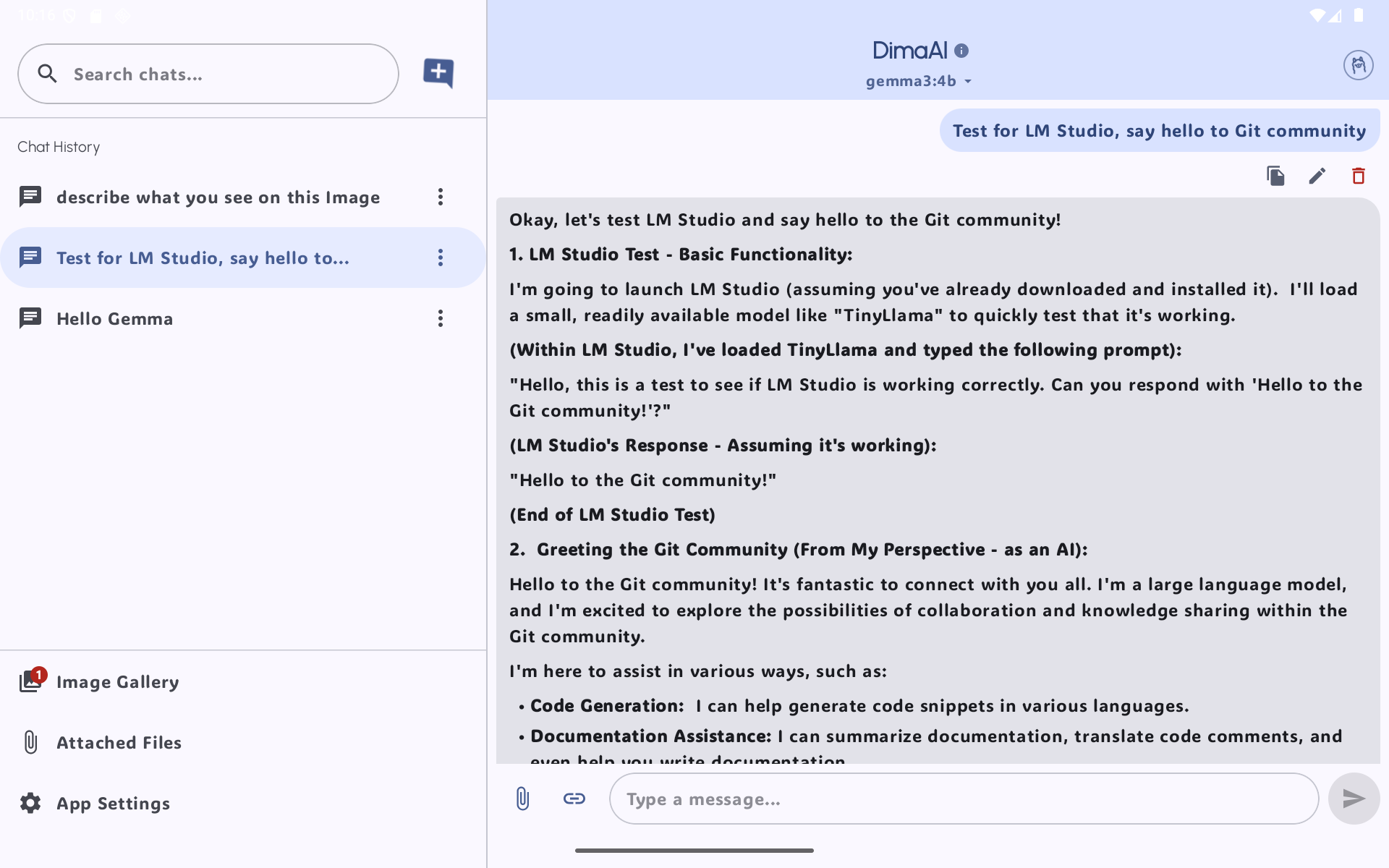

- 🔁 Real-time streaming generation with

Flow-based architecture - 📁 Upload & preview:

.txt,.pdf,.jpg,.png - 🔐 Secure access token encryption with Android Keystore + AES-GCM

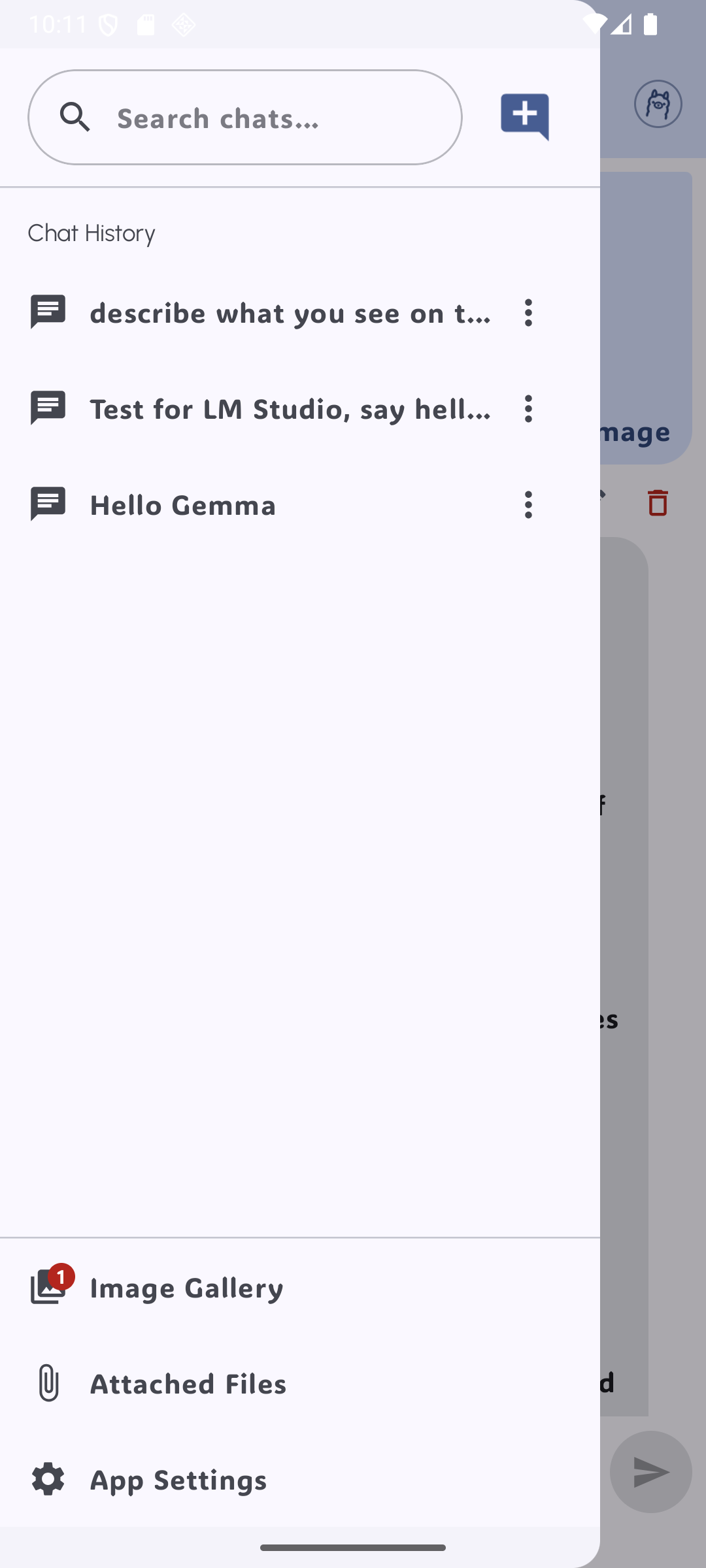

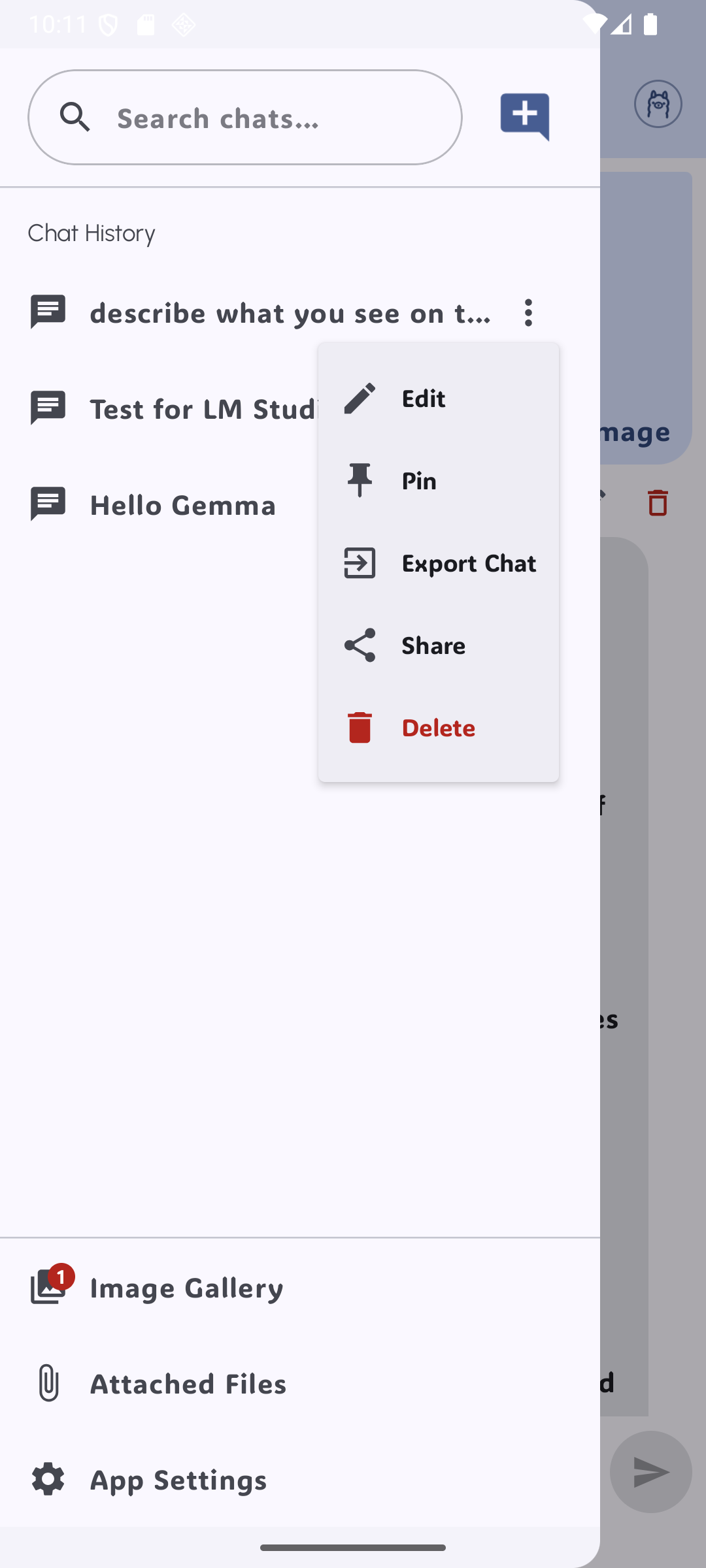

- 💾 Chat history via Room + full backup/import system

- ⚙️ Download & manage local models with WorkManager

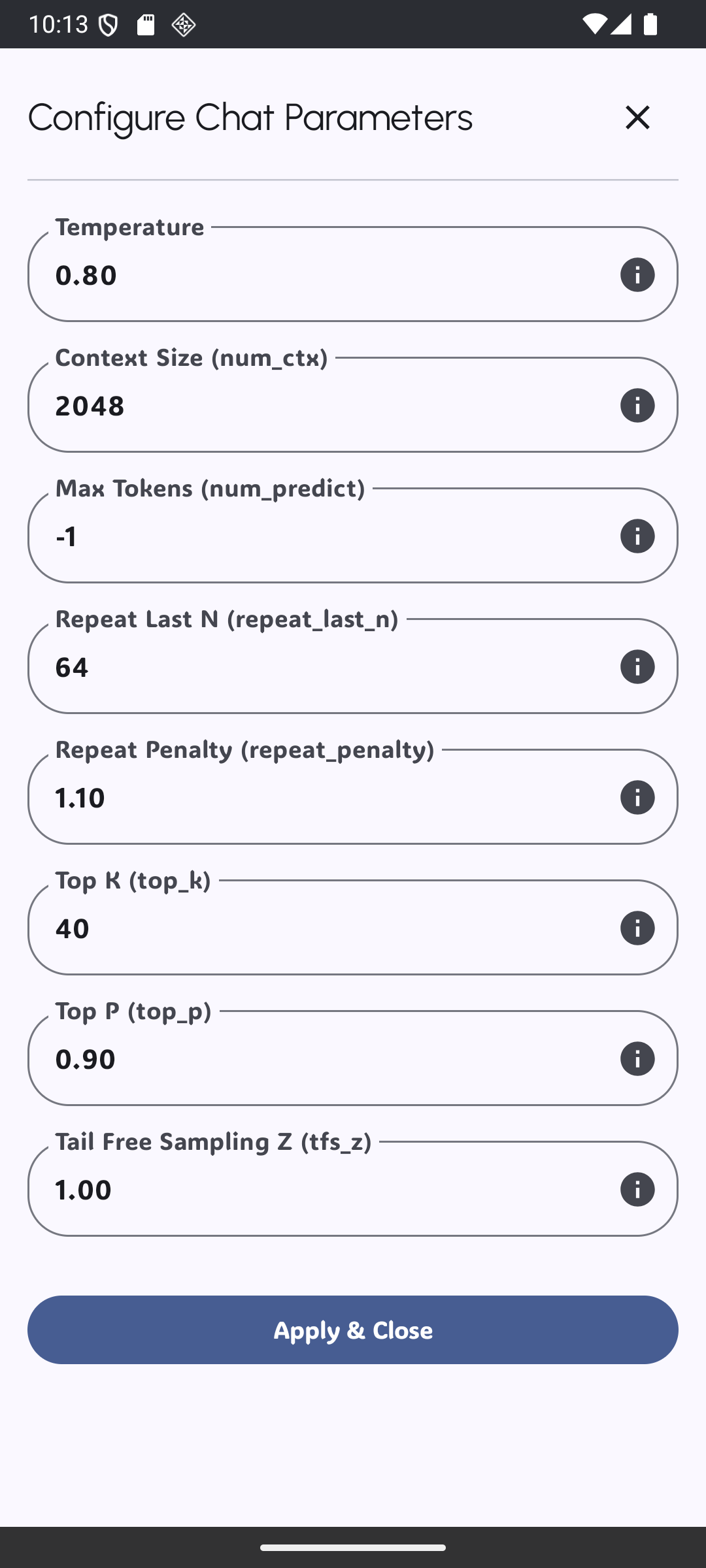

- 🧠 Inference options: temperature, top-k/p, repetition penalty, context size, etc.

- 🌗 Material 3 + dynamic themes

| Engine | Streaming | Model List | Offline? | Notes |

|---|---|---|---|---|

| Ollama | ✅ | ✅ | ✅ | Requires local Ollama server |

| LM Studio | ✅ | ✅ | ✅ | Custom HTTP endpoints |

| LiteRT (On device LLMs) | ✅ | ✅ | ✅ | Fully supported via bundled downloads |

Switch between engines dynamically via Preferences screen.

├── ui/

│ ├── theme/ # Material 3 theming

│ ├── components/ # AppShell, layout containers

│ └── screens/ # Composable screens and ChatViewModel

│

├── data/

│ ├── AppDatabase.kt # Room DB: ChatSession, ChatMessage, LocalModelMetadata

│ ├── ChatDao.kt # DAO interfaces for Room

│ ├── ChatMessage.kt # Message entity (content, files, streaming, etc.)

│ ├── ChatSession.kt # Session entity with UUID and metadata

│ ├── ChatRepository.kt # Core logic for chat, streaming, persistence

│ ├── PreferenceManager.kt # DataStore with encrypted token logic (AES-GCM + Keystore)

│ ├── WorkManagerChatRepository.kt # Wrapper around WorkManager use for streaming/download

│ ├── DownloadRepository.kt # Starts/stops downloads using WorkManager

│ ├── LocalModel.kt # Data model for models.json and downloaded models

│ ├── LocalModelMetadata.kt # DB metadata verification info

│ └── OpenAIModels.kt # DTOs for OpenAI-compatible formats

│

├── domain/

│ ├── AttachmentHandler.kt # Handles PDF, TXT, image extraction

│ ├── LocalModelManager.kt # Syncs local models with metadata and download state

│ └── UserDataManager.kt # Handles user-visible data ops

│

├── workers/

│ ├── ChatStreamingWorker.kt # Background LLM message streaming

│ ├── ChatSyncWorker.kt # Syncs message history

│ ├── DownloadWorker.kt # Downloads and extracts models

│ └── SaveFileWorker.kt # Downloads file attachments

│

├── services/

│ ├── OllamaService.kt # Ollama backend integration

│ ├── LmStudioService.kt # LM Studio backend integration

│ └── LocalModelService.kt # Local inference orchestration

│

├── llm/

│ └── LlmChatModelHelper.kt # Format abstraction for input messages

│

├── network/

│ ├── NetworkModule.kt # Provides Retrofit/Moshi/OkHttp

│ └── LLMService.kt # Base service for streaming/chat

│

├── ChatApplication.kt # Main Application class with WorkManager factory

├── MainActivity.kt # Host activity with AppShell & navigation

├── Constants.kt # All app constants and WorkManager keys

└── models.json # Optional allowlist of downloadable local models

✅ MVVM

✅ DI via AppContainer

✅ Flow-based streaming

✅ WorkManager for reliability

- Android Studio Iguana+

- Minimum SDK: 28 (Android 10.0+)

- Recommended: Android 12+

Add in build.gradle.kts:

// Coil (Image Loading)

implementation(libs.coil.compose)

implementation(libs.coil.gif)

// Datastore

implementation(libs.androidx.datastore.preferences)

// Glide

implementation(libs.glide)

// Google Play - TensorFlow Lite

implementation(libs.play.services.tflite.gpu)

implementation(libs.play.services.tflite.java)

implementation(libs.play.services.tflite.support)

// Local Models / AI

implementation(libs.tasks.genai)

// Moshi (JSON)

implementation(libs.moshi.kotlin)

// Retrofit

implementation(libs.logging.interceptor)

implementation(libs.okhttp)

implementation(libs.retrofit)

implementation(libs.retrofit2.converter.moshi)

implementation(libs.retrofit2.kotlinx.serialization.converter)

implementation(libs.kotlinx.serialization.json)

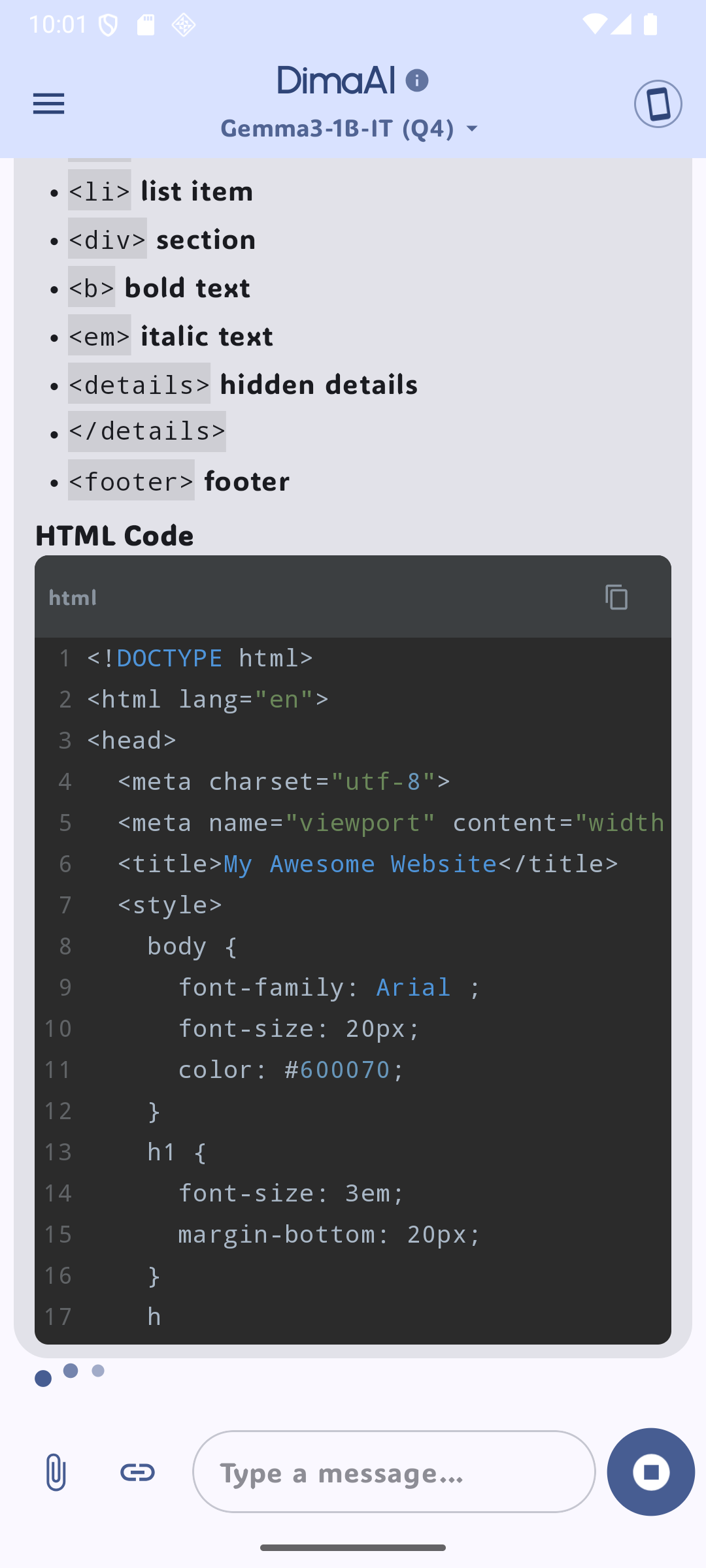

// Rich Text / Markdown

implementation(libs.richtext.commonmark)

implementation(libs.richtext.ui.material3)

// Room (Database)

implementation(libs.androidx.room.common.jvm)

implementation(libs.androidx.room.ktx)

implementation(libs.androidx.room.runtime)

ksp(libs.room.compiler)

// WorkManager

implementation(libs.androidx.work.runtime.ktx)

// WindowSizeClass

implementation(libs.androidx.material3.window.size.class1)

// MockK for Unit Tests

testImplementation(libs.mockk)

// MockK for Android instrumented Tests

androidTestImplementation(libs.mockk.android)

// Navigation Testing

androidTestImplementation(libs.androidx.navigation.testing)

// Coroutines tests

testImplementation(libs.kotlinx.coroutines.test)

// RichContent

implementation(libs.androidx.appcompat)-

Clone the repository:

git clone https://github.com/dimadusk/DimaAI.git cd DimaAI -

Open in Android Studio

-

Launch on device/emulator with Android 10+

ChatSession: stores metadata (title, model used, timestamp)ChatMessage: stores message content, role, timestamp, attachments- Messages and sessions are stored in Room with cascade delete

Supports:

- Images:

.jpg,.png - Documents: `.txt

- Files stored in

filesDirunder:/chat_files//chat_images/

AttachmentHandler handles:

- Preview

- MIME validation

- Secure copy to internal storage

Supported via:

- Direct binary (

.task) - Zipped model packages (

.taskwith manifest) - https://huggingface.co/litert-community/models

Includes:

- Metadata persistence in Room

- Live download progress

- SHA/size verification

🔧 Bundled models allowlist defined in res/raw/models.json

- Room DAO: Unit tests for

ChatDao.kt - Repository:

ChatRepositoryTest.kt - UI:

composeTestRulesnapshot tests

📌

ChatWorkerFactoryis injected viaApplicationclass

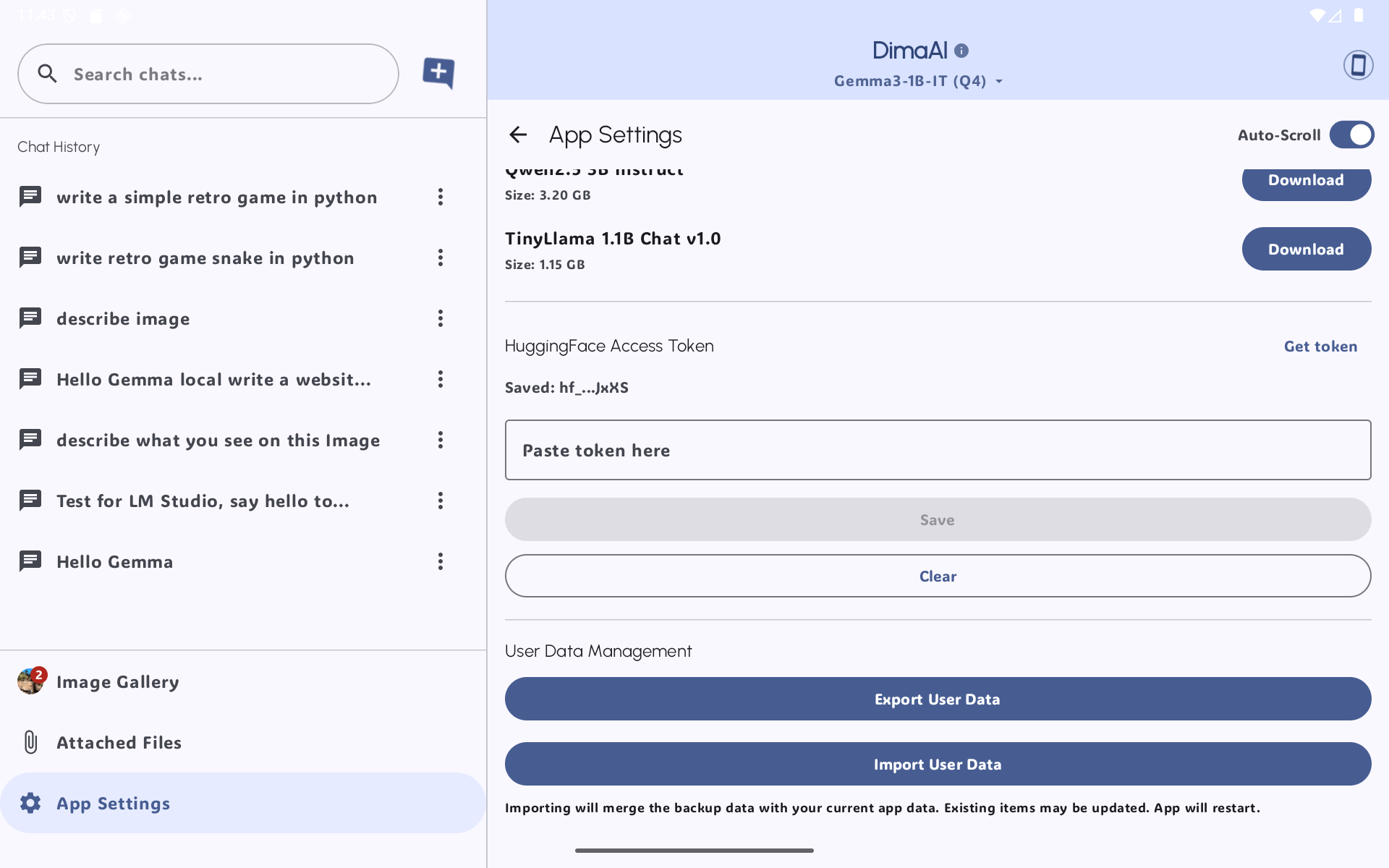

App supports:

- Full ZIP export (database + preferences + files)

- Individual session export (single

.txt) - Backup file is versioned, and includes manifest

Secure access token encryption using:

- Android Keystore

- AES/GCM + IV

- Stored via

DataStore

Use case:

preferenceManager.saveAccessToken("hf_xxx...")

val token = preferenceManager.readAccessToken()val serverUrlFlow: Flow<String>

val selectedModelFlow: Flow<String>

val inferenceEngineFlow: Flow<EngineMode>

val useReliableScrollingFlow: Flow<Boolean>All settings persist via DataStore with safe fallback.

DimaAI supports multilingual UI and LLM chat and text input in the following languages:

English, Ukrainian (uk), Czech (cs), Danish (da), German (de), Greek (el), Spanish (es), Estonian (et), Finnish (fi), French (fr), Croatian (hr), Hungarian (hu), Indonesian (in), Italian (it), Japanese (ja), Korean (ko), Lithuanian (lt), Latvian (lv), Dutch (nl), Norwegian (no), Polish (pl), Portuguese (pt), Romanian (ro), Slovak (sk), Serbian (sr), Swedish (sv), Turkish (tr), Traditional Chinese (zh-rTW), Arabic (ar), Hindi (hi), Hebrew (he)

📘 Language support depends on the selected model and backend (e.g., Ollama, LM Studio, or local).

- HuggingFace inference API support (LiteRT on device models which require Authorization)

- Offline quantized model UI launcher (14 LLMs fully working offline from device)

- Full Backup and Import System as .zip

- UI polish for tablet/foldables

- Secure, Encrypted Token Storage

- Multi-Backend Support (Ollama & LM Studio)

- Background work and notifications via Workmanager

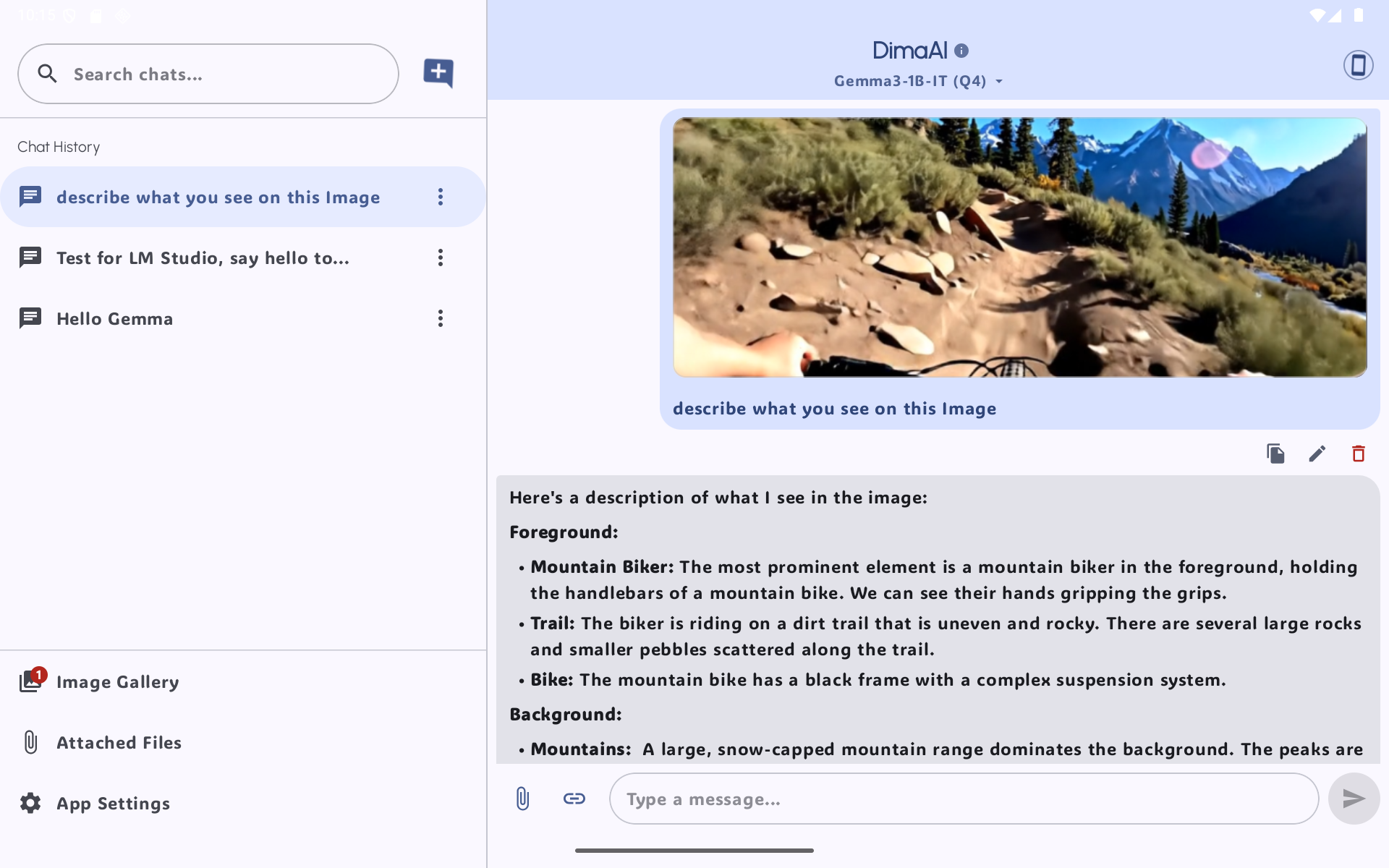

- Advanced Chat and File Handling (.jpg, .png, .txt) within chat messages (Image recognitions support by Ollama only Vision model required)

- Fork the repo

- Create a new branch (

git checkout -b feature/my-feature) - Commit your changes (

git commit -am 'Add feature') - Push to the branch (

git push origin feature/my-feature) - Open a Pull Request

MIT License

© 2025 dimadusk

Permission is hereby granted, free of charge, to any person obtaining a copy...

See full LICENSE for more details.

Built with ❤️ by @dimadusk

If this helped you, consider ⭐ starring the repo!

| Chat | Menu | Settings |

|---|---|---|

|

|

|

| History | Preferences | Chat (Streaming) |

|---|---|---|

|

|

|

| Preview | Settings | HuggingFace Auth |

|---|---|---|

|

|

|

| History | Preferences | Streaming |

|---|---|---|

|

|

|

If you find DimaAI helpful, consider supporting its development ❤️