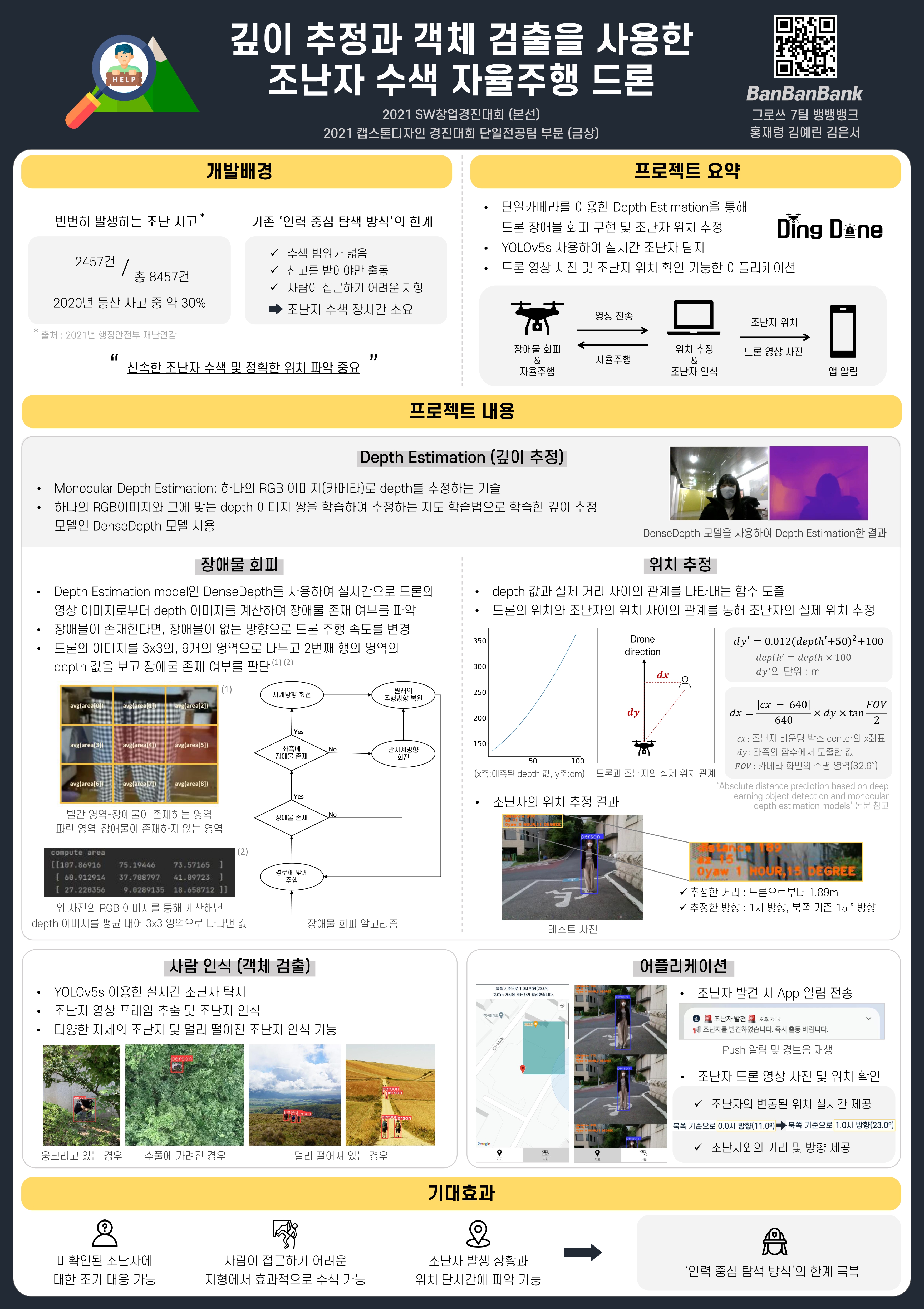

DingDone: Autonomous Flight Drone for Searching Survivor with Monocular Depth Estimation and Object Detection

Avoidance, Localization

- This code is tested with Keras 2.2.4, Tensorflow 1.13, CUDA 10.0, on a machine with an NVIDIA Titan V and 16GB+ RAM running on Windows 10 or Ubuntu 16.

- Other packages needed keras pillow matplotlib scikit-learn scikit-image opencv-python pydot and GraphViz for the model graph visualization and PyGLM PySide2 pyopengl for the GUI demo.

- Minimum hardware tested on for inference NVIDIA GeForce 940MX (laptop) / NVIDIA GeForce GTX 950 (desktop).

- Training takes about 24 hours on a single NVIDIA TITAN RTX with batch size 8.

- avoiding obstacle with monocular depth estimation

- detecting survivor with object detecting

- estimating distance and direction of survivor from drone

- Clone this repository

$ git clone https://github.com/Ewha-BanBanBank/DingDone_final.git

- Connect drone to your computer

- Run Demo - avoiding obstacle

$ cd Avoidance

$ python3 DingDone_path.py

- Run Demo - detecting survivor, estimating position of survivor and showing those information to application

$ cd ../Localization

$ python3 localization.py

- Monocular Depth Estimation : https://github.com/ialhashim/DenseDepth

- Object Detection : https://github.com/ultralytics/yolov5