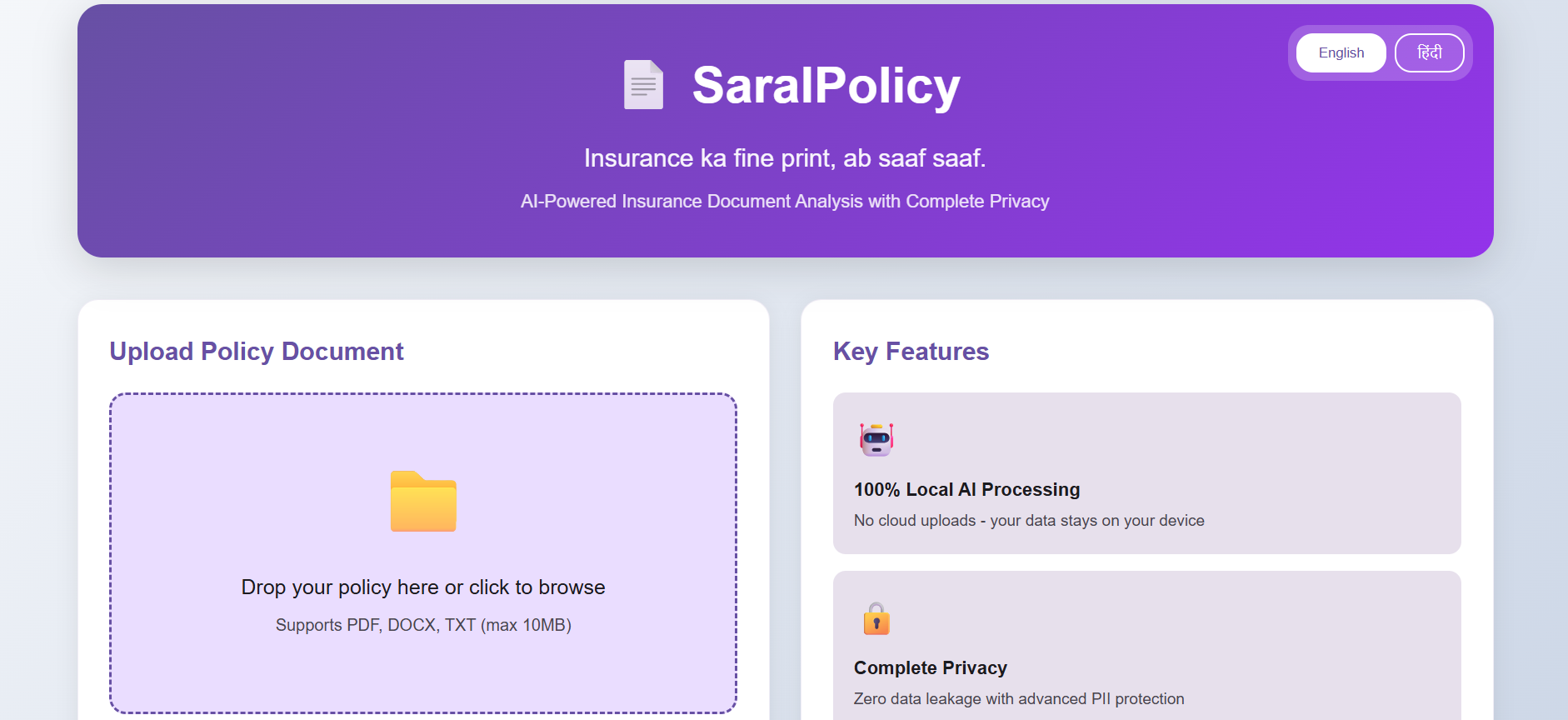

Tagline: "Insurance ka fine print, ab saaf saaf."

Status: 🟢 POC/Demo (Remediation Complete) | Progress: 26/26 Issues Resolved (100%) | View Status | Implementation Report

An AI-powered insurance document analysis system (POC/Demo) that uses local AI models to provide clear, easy-to-understand summaries in Hindi and English, with comprehensive guardrails and human-in-the-loop validation. All processing happens locally for complete privacy.

Status: 🟡 POC/Demo - See Status and Production Engineering Evaluation for details.

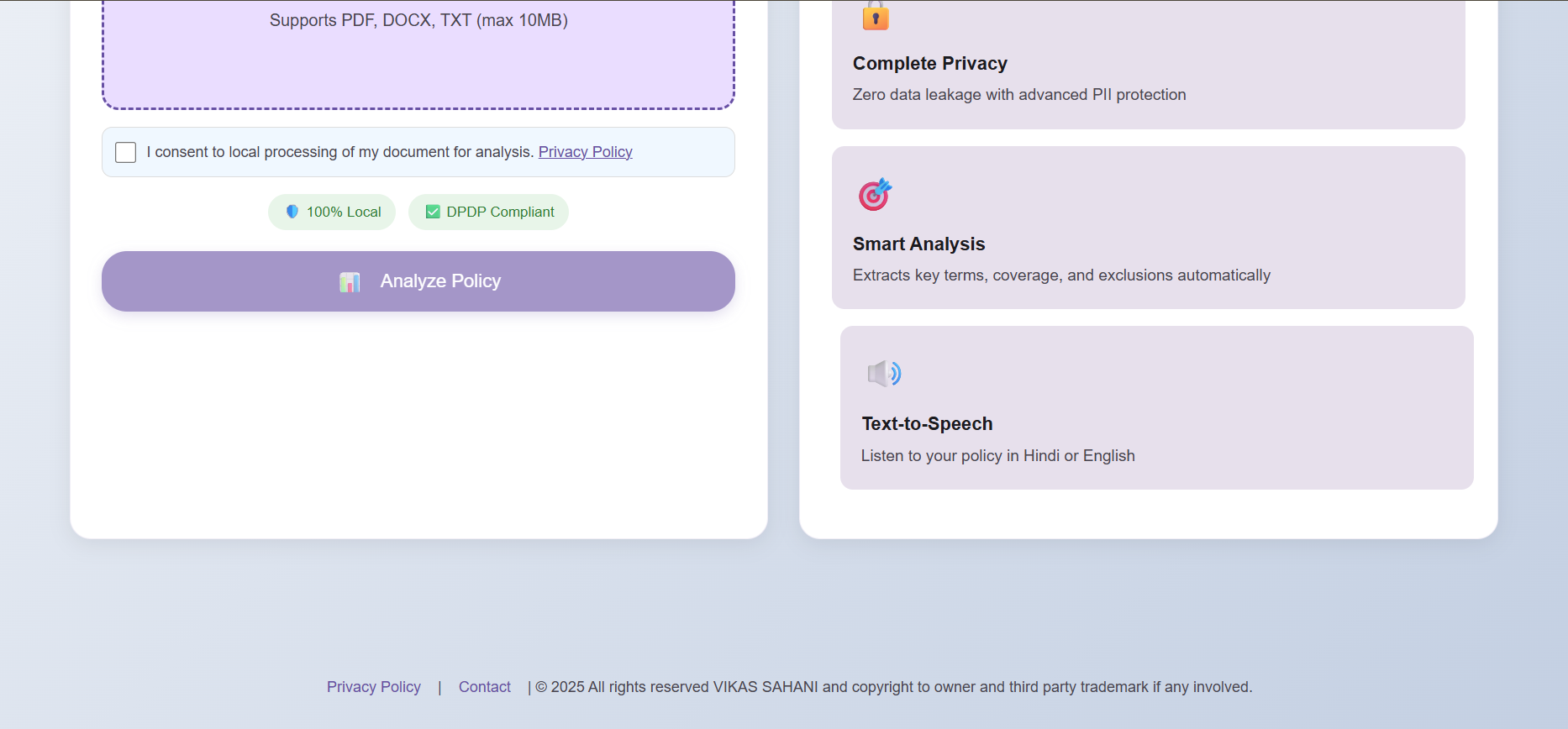

Beautiful Material 3 design with drag & drop document upload

Beautiful Material 3 design with drag & drop document upload

Real-time policy analysis with AI-powered insights

Real-time policy analysis with AI-powered insights

IRDAI knowledge base indexing with ChromaDB

IRDAI knowledge base indexing with ChromaDB

Hybrid search combining BM25 + Vector embeddings

Hybrid search combining BM25 + Vector embeddings

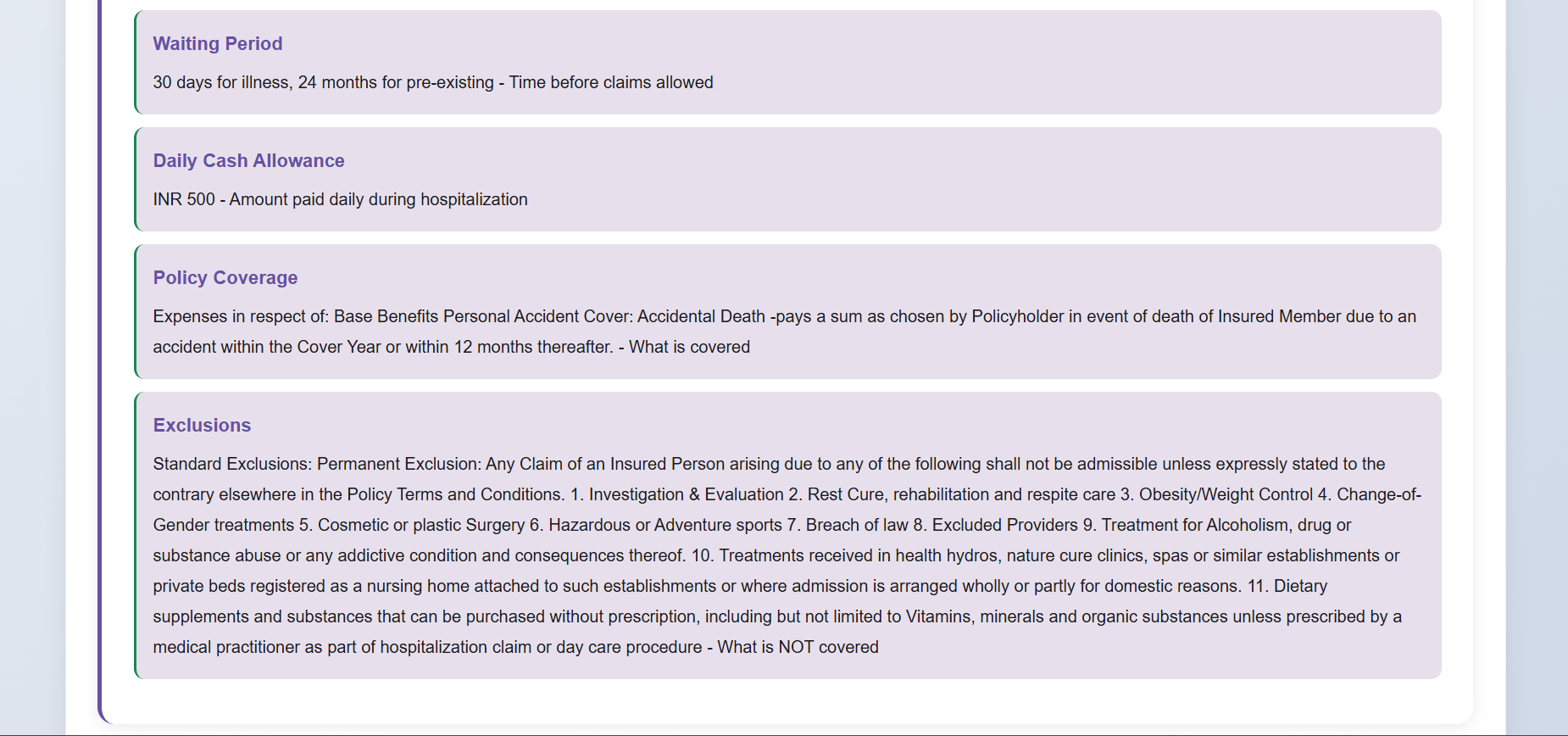

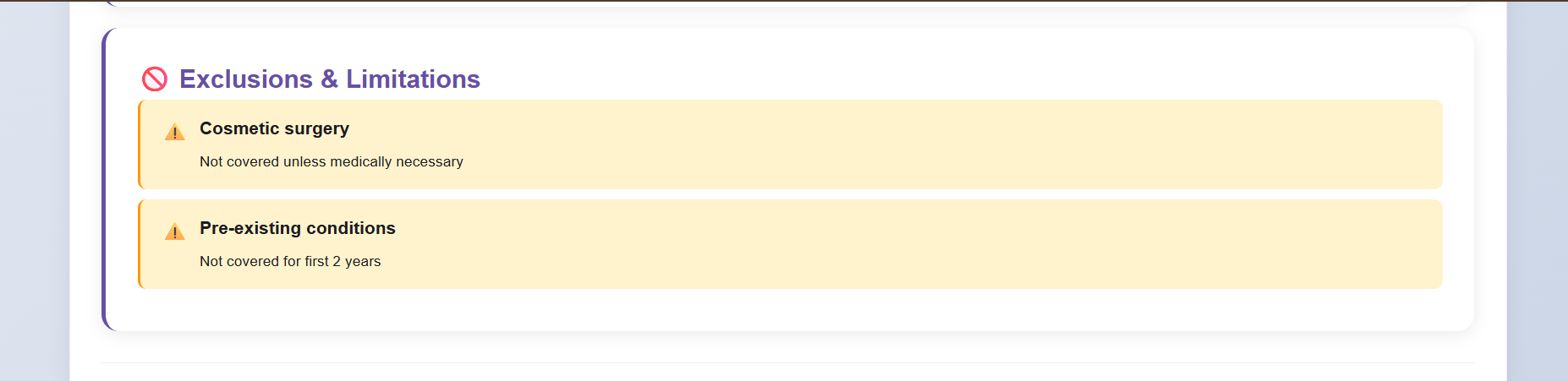

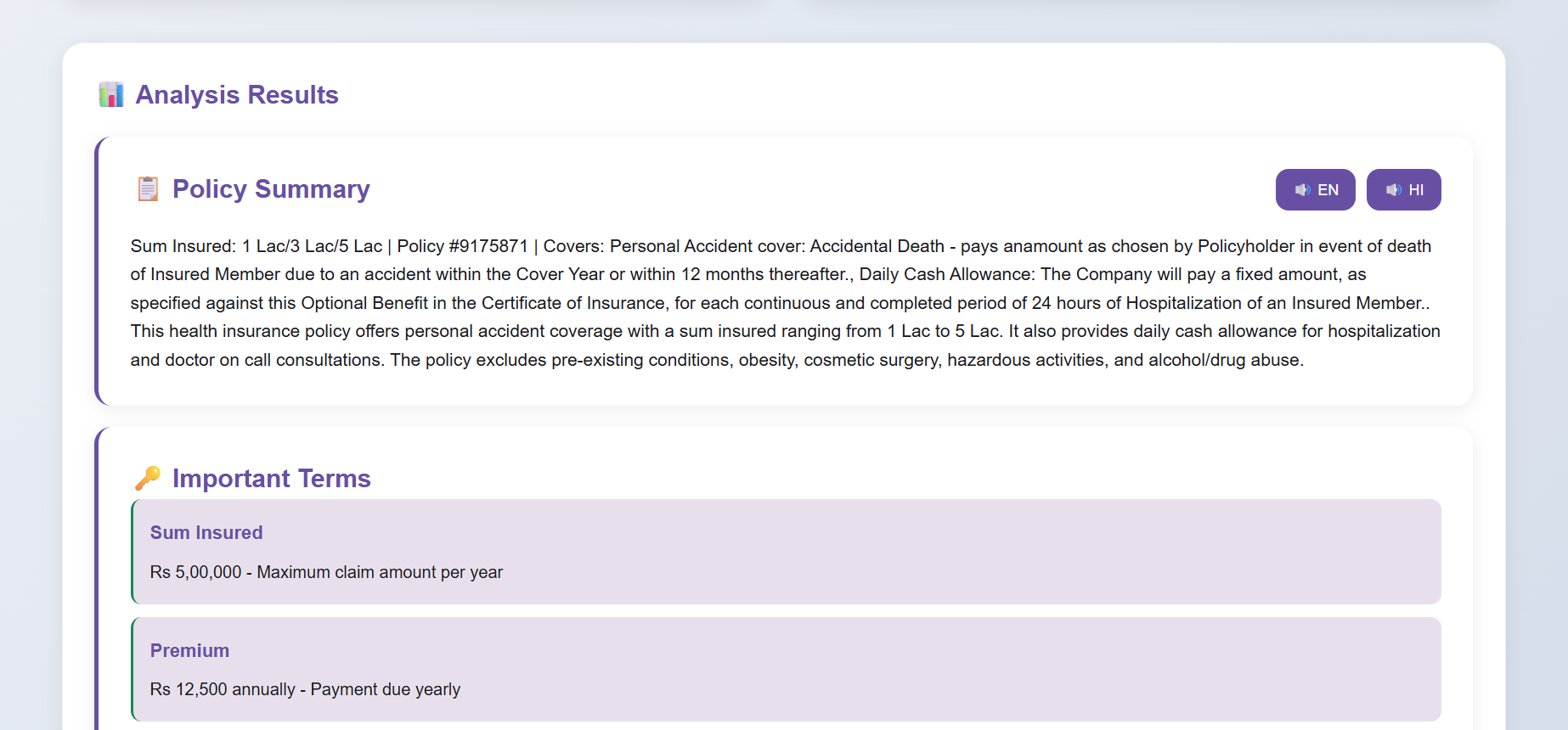

Comprehensive policy breakdown with key terms

Comprehensive policy breakdown with key terms

- ⚡ Optimized Document Parsing: Parallel PDF processing with ThreadPoolExecutor (4 workers)

- 📦 Smart Caching: Document and embedding caching with MD5 hashing

- 🔄 Batch Operations: Parallel embedding generation for faster RAG indexing

- 📊 Performance Monitoring: Real-time metrics tracking for all operations

- 🤖 Local AI Analysis: Ollama + Gemma 2 2B model running locally for privacy

- 🧠 RAG-Enhanced: Retrieval-Augmented Generation with IRDAI knowledge base (39 chunks)

- 🔍 Hybrid Search: BM25 (keyword) + Vector (semantic) search with query caching

- 📤 Advanced Embeddings: nomic-embed-text (274MB) with connection pooling

- 🔒 Advanced Guardrails: Input validation, PII protection, and safety checks

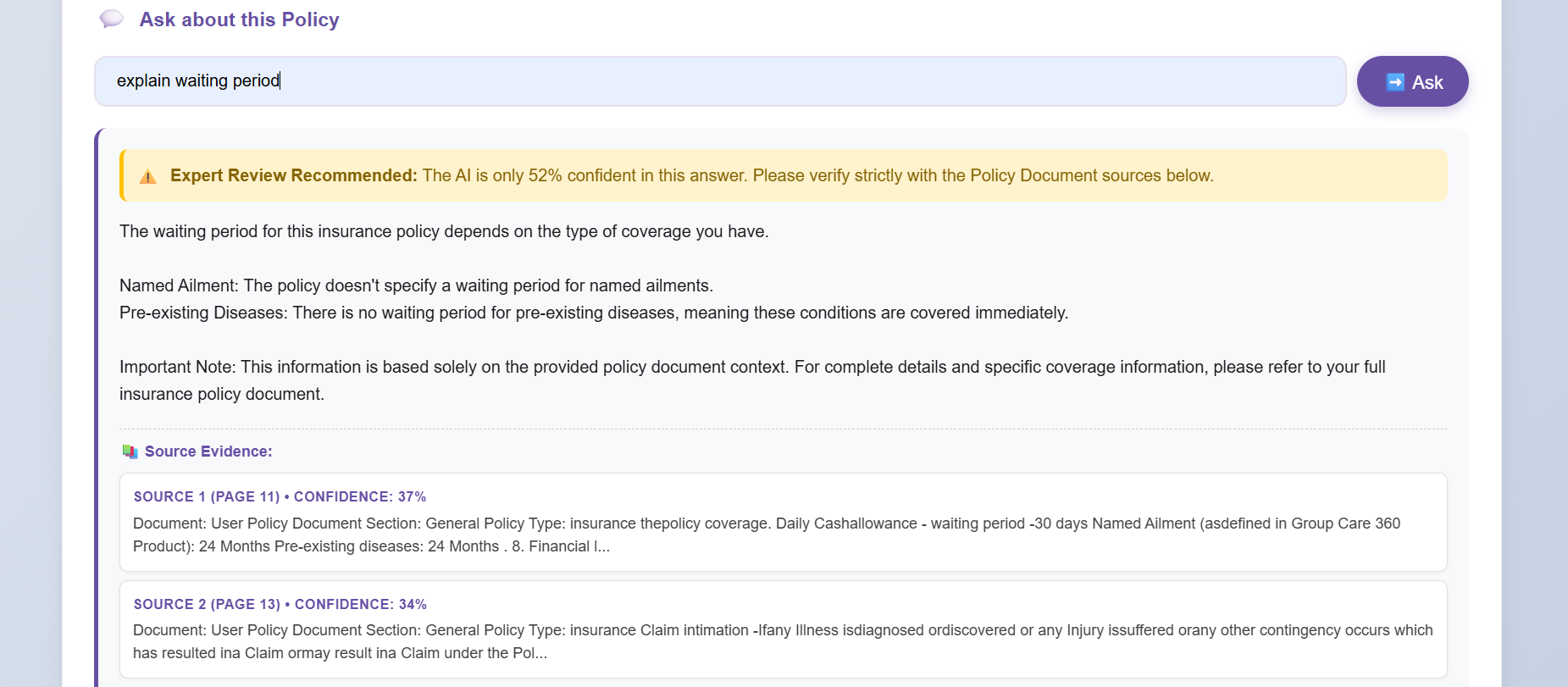

- 👥 Human-in-the-Loop: Expert review for low-confidence analyses

- 📊 RAGAS Evaluation: Faithfulness, relevancy, precision metrics (Apache 2.0)

- 📁 Multi-Format Support: PDF, DOCX, and TXT files with drag & drop

- 🔑 Key Insights: Coverage details, exclusions, and important terms

- ❓ Interactive Q&A: Document-specific Q&A with IRDAI augmentation

- 🎨 Modern UI: Beautiful Material 3 design with responsive layout

- 🌐 Bilingual: Results in both Hindi and English

- 📊 Text-to-Speech: High-quality Hindi neural TTS (Indic Parler-TTS)

- 🔒 100% Privacy: Complete local processing, no data leaves your machine

- OS: Windows, Linux, or macOS

- Python: 3.9 or higher

- RAM: Minimum 8GB (16GB recommended)

- Disk Space: ~10GB (for models and virtual environment)

# Clone the repository

git clone https://github.com/VIKAS9793/SaralPolicy.git

cd SaralPolicy

# Create virtual environment

python -m venv venv

venv\Scripts\activate # Windows

# source venv/bin/activate # Linux/Mac

# Upgrade pip

python -m pip install --upgrade pipcd backend

pip install -r requirements.txt# Download and install Ollama from https://ollama.ai/download

# Pull required models

ollama pull gemma2:2b

ollama pull nomic-embed-text

# Start Ollama service (keep running in background)

ollama serve# Copy environment template

copy .env.example .env # Windows

# cp .env.example .env # Linux/Mac

# Edit .env and add your HuggingFace token (optional, for Indic Parler-TTS)

# HF_TOKEN=hf_your_token_herepython scripts/index_irdai_knowledge.pypython main.pyVisit http://localhost:8000 to access the Material 3 web interface.

SaralPolicy/

├── backend/

│ ├── main.py # Main FastAPI application

│ ├── requirements.txt # All dependencies (pinned versions)

│ ├── .env.example # Environment template (safe to commit)

│ ├── .env # Your secrets (NEVER commit)

│ ├── app/

│ │ ├── config.py # Validated configuration (Pydantic)

│ │ ├── dependencies.py # Dependency Injection Container

│ │ ├── routes/ # API Endpoints

│ │ ├── services/ # Business Logic Services

│ │ │ ├── ollama_llm_service.py # Local LLM via Ollama

│ │ │ ├── rag_service.py # RAG with ChromaDB

│ │ │ ├── policy_service.py # Policy analysis orchestration

│ │ │ ├── guardrails_service.py # Input/output validation

│ │ │ ├── tts_service.py # Text-to-speech orchestration

│ │ │ ├── indic_parler_engine.py # Hindi neural TTS (Apache 2.0)

│ │ │ ├── rag_evaluation_service.py # RAGAS evaluation

│ │ │ ├── observability_service.py # OpenTelemetry metrics

│ │ │ └── task_queue_service.py # Huey task queue

│ │ ├── middleware/ # Security middleware

│ │ ├── prompts/ # Versioned prompt registry

│ │ ├── models/ # Data models

│ │ └── db/ # Database layer

│ ├── tests/ # All tests (197 passing)

│ ├── data/

│ │ ├── chroma/ # ChromaDB persistent storage

│ │ └── irdai_knowledge/ # IRDAI regulatory documents

│ ├── scripts/ # Utility scripts

│ ├── static/ # Frontend assets (CSS, JS)

│ └── templates/ # HTML templates

├── docs/

│ ├── SETUP.md # Installation guide

│ ├── TROUBLESHOOTING.md # Common issues & solutions

│ ├── SYSTEM_ARCHITECTURE.md # Detailed architecture

│ ├── STATUS.md # Current status

│ ├── setup/ # Additional setup guides

│ ├── reports/ # Technical reports

│ ├── adr/ # Architectural decisions

│ └── product-research/ # Strategic documents (16 docs)

├── assets/ # Screenshots and banners

├── .gitignore # Git ignore (includes .kiro/, .env)

├── README.md # This file

├── CONTRIBUTING.md # Contribution guidelines

└── LICENSE # MIT License

| Component | Technology | Version |

|---|---|---|

| Backend | FastAPI | 0.115.6 |

| AI Model | Ollama + Gemma 2 2B | Local |

| Embeddings | nomic-embed-text | 274MB |

| Vector DB | ChromaDB | 0.5.23 |

| Validation | Pydantic | 2.10.3 |

| Database | SQLAlchemy + SQLite | 2.0.36 |

| UI | Material Design 3 | HTML/CSS/JS |

| Framework | Purpose | License | Fallback |

|---|---|---|---|

| RAGAS | RAG evaluation (faithfulness, relevancy) | Apache 2.0 | Heuristic scoring |

| OpenTelemetry | Metrics, tracing, observability | Apache 2.0 | Built-in metrics |

| Huey | Background task queue (SQLite backend) | MIT | Synchronous execution |

| Indic Parler-TTS | High-quality Hindi neural TTS (0.9B params) | Apache 2.0 | gTTS → pyttsx3 |

| Hardware | ~100 chars | ~500 chars |

|---|---|---|

| CPU (default) | 2-5 min | 5-10 min |

| GPU (CUDA) | 5-15 sec | 15-45 sec |

| GPU (Apple M1/M2) | 10-30 sec | 30-90 sec |

Note: Neural TTS on CPU is slow but produces high-quality Hindi speech. For faster responses, the system falls back to gTTS automatically.

graph TB

subgraph FE["🎨 FRONTEND"]

UI["Material 3 Web UI"]

end

subgraph API["⚡ API LAYER"]

FAST["FastAPI Server"]

end

subgraph CORE["🔧 CORE SERVICES"]

DOC["Document Service"]

RAG["RAG Service"]

LLM["Ollama LLM<br/>gemma2:2b"]

POLICY["Policy Service"]

end

subgraph STORAGE["💾 STORAGE"]

CHROMA["ChromaDB"]

IRDAI["IRDAI Knowledge<br/>39 chunks"]

end

subgraph SAFETY["🛡️ SAFETY"]

GUARD["Guardrails"]

EVAL["RAGAS Evaluation"]

HITL["Human-in-the-Loop"]

end

subgraph AUX["📊 AUXILIARY"]

TTS["TTS Service<br/>Indic Parler-TTS"]

TRANS["Translation<br/>Argos Translate"]

end

UI --> FAST

FAST --> DOC

FAST --> POLICY

POLICY --> RAG

POLICY --> LLM

RAG --> CHROMA

IRDAI --> CHROMA

POLICY --> GUARD

POLICY --> EVAL

EVAL --> HITL

FAST --> TTS

FAST --> TRANS

📖 Full Architecture: See SYSTEM_ARCHITECTURE.md

cd backend

# Run all tests (197 tests)

python -m pytest tests/ -v

# Run specific test categories

python -m pytest tests/test_security.py -v # Security tests

python -m pytest tests/test_oss_frameworks.py -v # OSS framework tests

python -m pytest tests/test_hallucination_detection.py -v # Hallucination tests

# Run with coverage

python -m pytest tests/ --cov=app --cov-report=html| Category | Tests | Description |

|---|---|---|

| Configuration | 23 | Config validation, environment handling |

| Security | 15 | Input sanitization, PII protection, CORS |

| Error Paths | 23 | Graceful degradation, fallback handling |

| Hallucination | 15 | Detection accuracy, grounding validation |

| OSS Frameworks | 28 | RAGAS, OpenTelemetry, Huey integration |

| Integration | 20+ | End-to-end workflow tests |

| Total | 197 | All passing |

- 100% Local Processing: All AI analysis happens on your machine

- No Cloud APIs: Zero data sent to external services

- No API Keys Required: Core functionality works offline

- Advanced Guardrails: Input validation, PII protection, safety checks

- HITL Validation: Expert review for quality assurance

- Secure Secrets:

.envfile in.gitignore, never committed

# backend/.env (NEVER commit this file)

HF_TOKEN=hf_your_token_here # Optional: For Indic Parler-TTS

OLLAMA_HOST=localhost:11434 # Default Ollama endpoint- Changelog - Version history and release notes

- Setup Guide - Installation and configuration

- Troubleshooting - Common issues and solutions

- System Architecture - Technical deep-dive

- Status - Current project status

- Ollama Setup - Local AI model setup

| # | Document | Description |

|---|---|---|

| 1 | Executive Summary | Problem, solution, market opportunity (₹1,200 Cr) |

| 2 | Product Vision | Long-term vision and strategy |

| 3 | Business Case | Market sizing (515M policies), revenue models |

| 4 | Competitive Analysis | Competitor landscape, differentiation |

| 5 | Product Roadmap | Feature timeline, milestones |

| 6 | PRD | Functional requirements, user stories |

| 7 | Requirements | Technical specifications |

| 8 | Architecture | System design, data flow |

| 9 | Explainability | AI transparency, evaluation metrics |

| 10 | User Journey | UX flows, interaction design |

| 11 | Privacy & Compliance | IRDAI, DPDP Act 2023 |

| 12 | Testing Strategy | QA plans, coverage |

| 13 | Go-to-Market | Launch plan, pricing |

| 14 | Risk Register | Risk identification, mitigation |

| 15 | Ethical AI | AI ethics, bias mitigation |

| 16 | Metrics & KPIs | Success metrics, North Star |

| Endpoint | Method | Purpose |

|---|---|---|

/ |

GET | Serve frontend UI |

/upload |

POST | Upload policy document |

/analyze |

POST | Analyze uploaded policy |

/rag/ask |

POST | Ask question via RAG |

/rag/stats |

GET | RAG service statistics |

/tts |

POST | Generate audio summary |

/translate |

POST | Hindi ↔ English translation |

- Validate Core Concept: Does local AI analysis provide value?

- Test Accuracy: Are summaries and insights accurate?

- User Experience: Is the Material 3 interface intuitive?

- Guardrails Effectiveness: Are safety checks working?

- HITL Integration: How often is expert review needed?

- Market Fit: Is there demand for privacy-first insurance analysis?

Vikas Sahani

Product Manager & Main Product Lead

- 📧 Email: vikassahani17@gmail.com

- 💼 LinkedIn: linkedin.com/in/vikas-sahani-727420358

- 🐙 GitHub: @VIKAS9793

Engineering Team:

- Kiro (AI Co-Engineering Assistant)

- Antigravity (AI Co-Assistant & Engineering Support)

Contributions are welcome! Please read CONTRIBUTING.md before submitting.

# Quick start for contributors

git checkout -b feature/AmazingFeature

git commit -m 'feat: add AmazingFeature'

git push origin feature/AmazingFeature

# Open a Pull RequestThis project is licensed under the MIT License - see LICENSE for details.

- IRDAI for insurance regulatory guidelines

- Ollama for local LLM infrastructure

- Google for Gemma models

- ChromaDB for vector database

- FastAPI community for excellent framework

- AI4Bharat for Indic Parler-TTS Hindi speech synthesis

- HuggingFace for model hosting and transformers library

If you use SaralPolicy or its components in your research, please cite:

@inproceedings{sankar25_interspeech,

title = {{Rasmalai : Resources for Adaptive Speech Modeling in IndiAn Languages with Accents and Intonations}},

author = {Ashwin Sankar and Yoach Lacombe and Sherry Thomas and Praveen {Srinivasa Varadhan} and Sanchit Gandhi and Mitesh M. Khapra},

year = {2025},

booktitle = {{Interspeech 2025}},

pages = {4128--4132},

doi = {10.21437/Interspeech.2025-2758},

}

@misc{lacombe-etal-2024-parler-tts,

author = {Yoach Lacombe and Vaibhav Srivastav and Sanchit Gandhi},

title = {Parler-TTS},

year = {2024},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/parler-tts}}

}

@misc{lyth2024natural,

title={Natural language guidance of high-fidelity text-to-speech with synthetic annotations},

author={Dan Lyth and Simon King},

year={2024},

eprint={2402.01912},

archivePrefix={arXiv},

}Model: ai4bharat/indic-parler-tts (Apache 2.0, 0.9B parameters)

- 📧 Email: vikassahani17@gmail.com

- 🐛 Issues: GitHub Issues

- 💼 LinkedIn: Vikas Sahani

Found a security issue? Please email vikassahani17@gmail.com instead of using the public issue tracker.

Made with ❤️ to make insurance understandable for everyone

"Because everyone deserves to understand what they're paying for."

This is a POC/demo system. For production deployment, see the Production Engineering Evaluation and Remediation Plan.