This project is a full-stack web application built with the MERN stack (MongoDB, Express.js, React.js, Node.js) that enables users to upload lecture videos (MP4 format, ~60 minutes). The application automatically transcribes the video, segments the transcript into 5-minute intervals, and generates objective multiple-choice questions (MCQs) for each segment using a locally hosted Large Language Model (LLM). This ensures offline operation and privacy of user data.

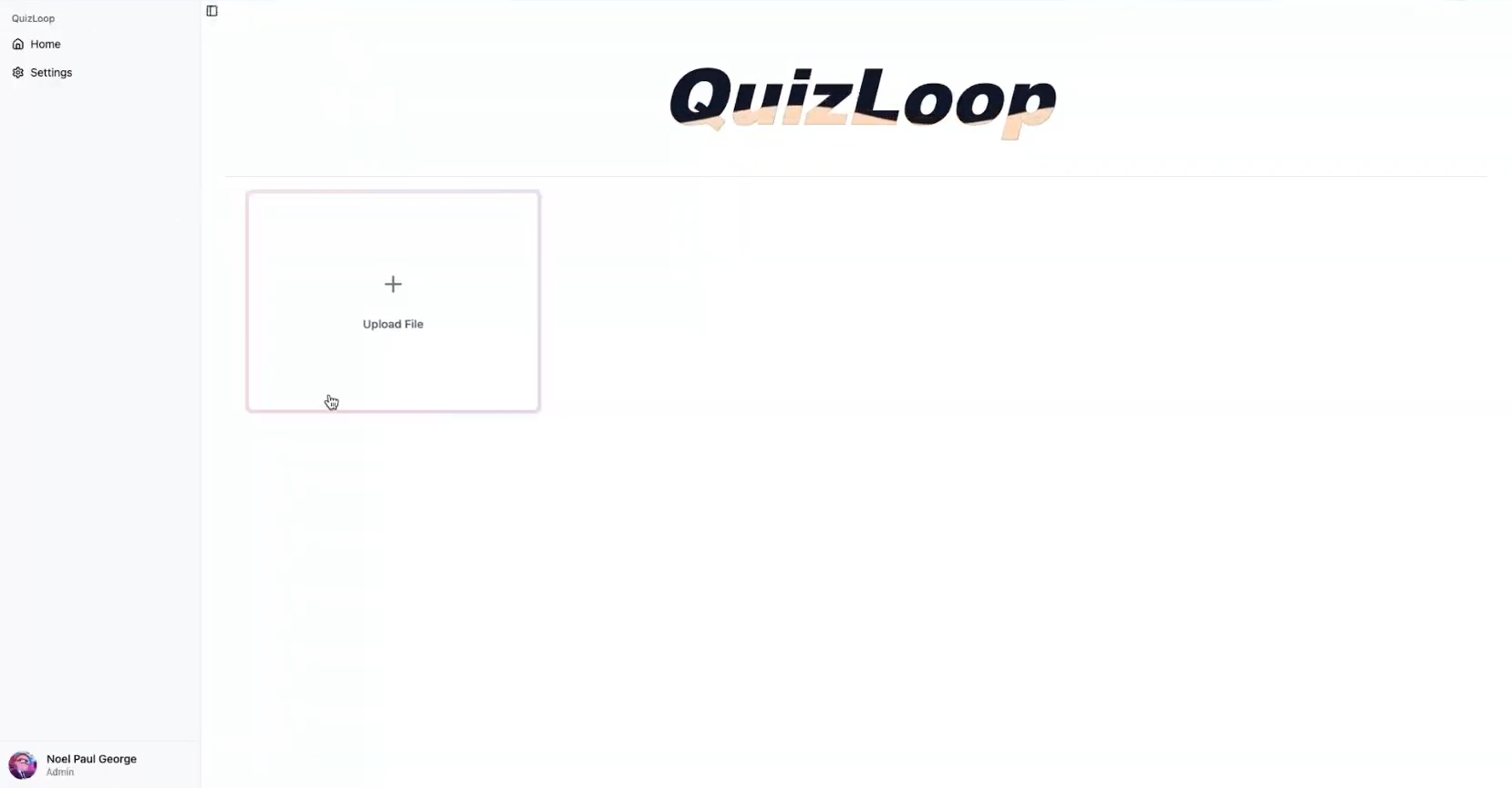

- Responsive web interface for uploading MP4 video files.

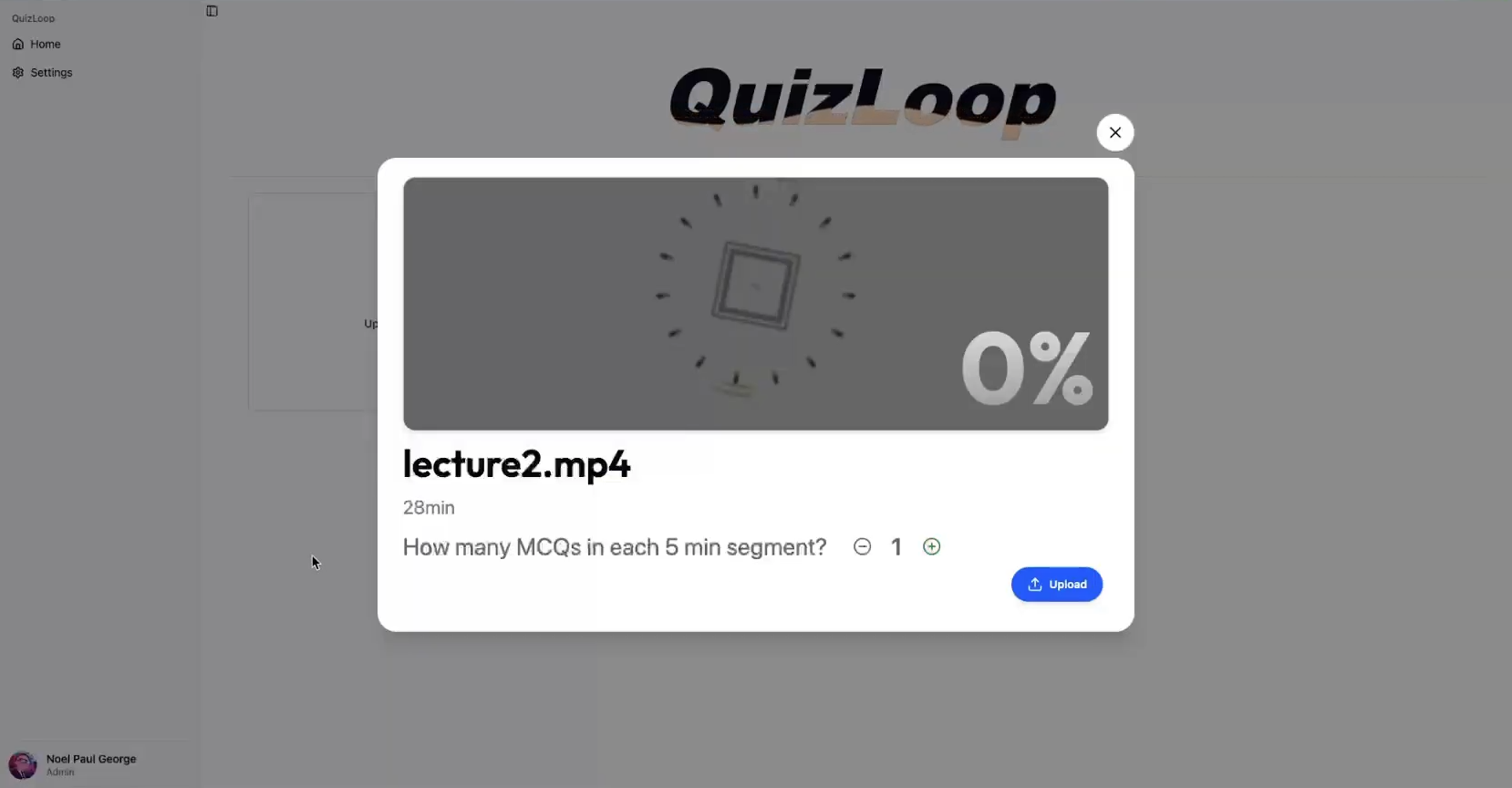

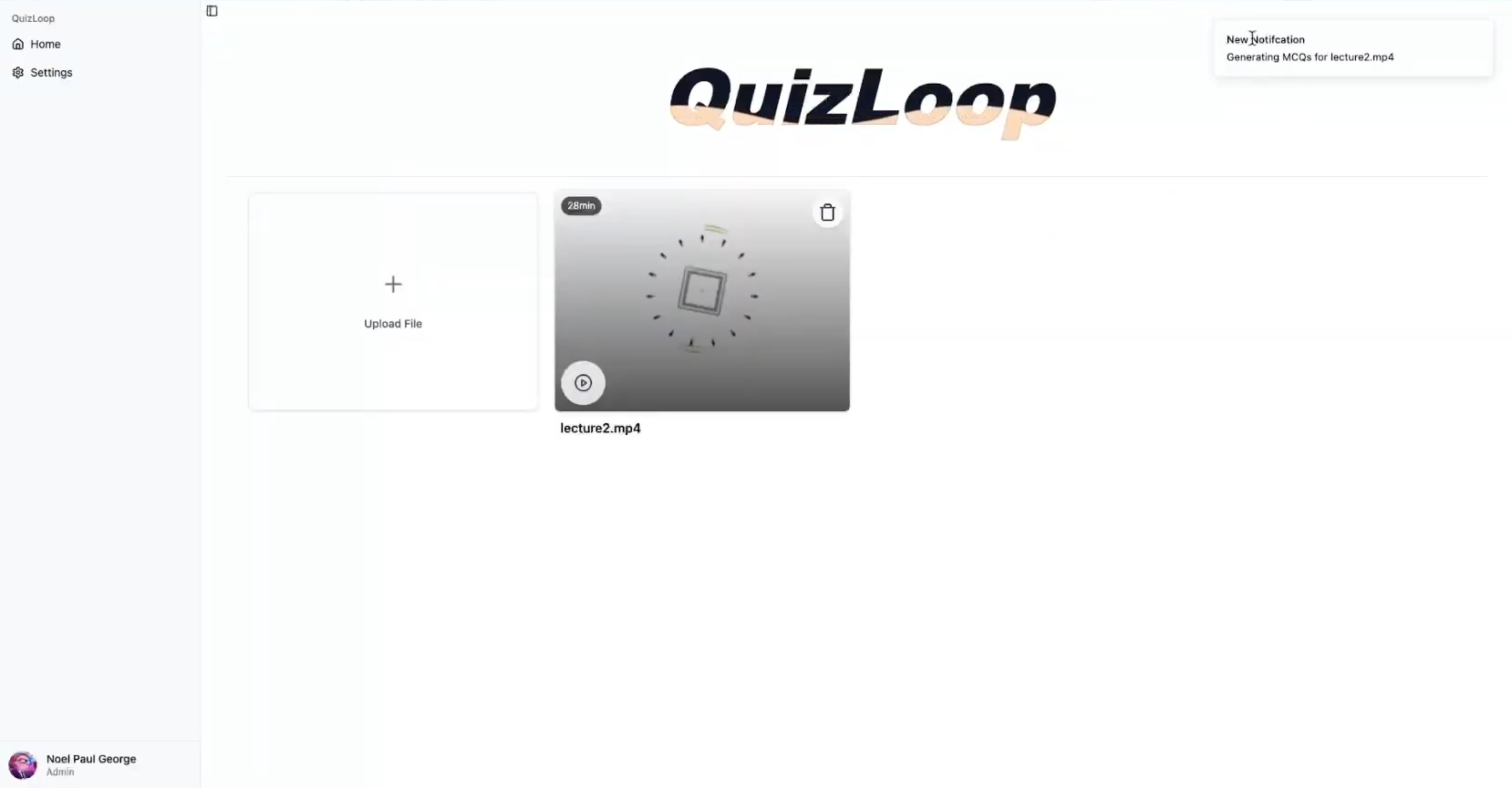

- Real-time progress bar and status updates for transcription and question generation.

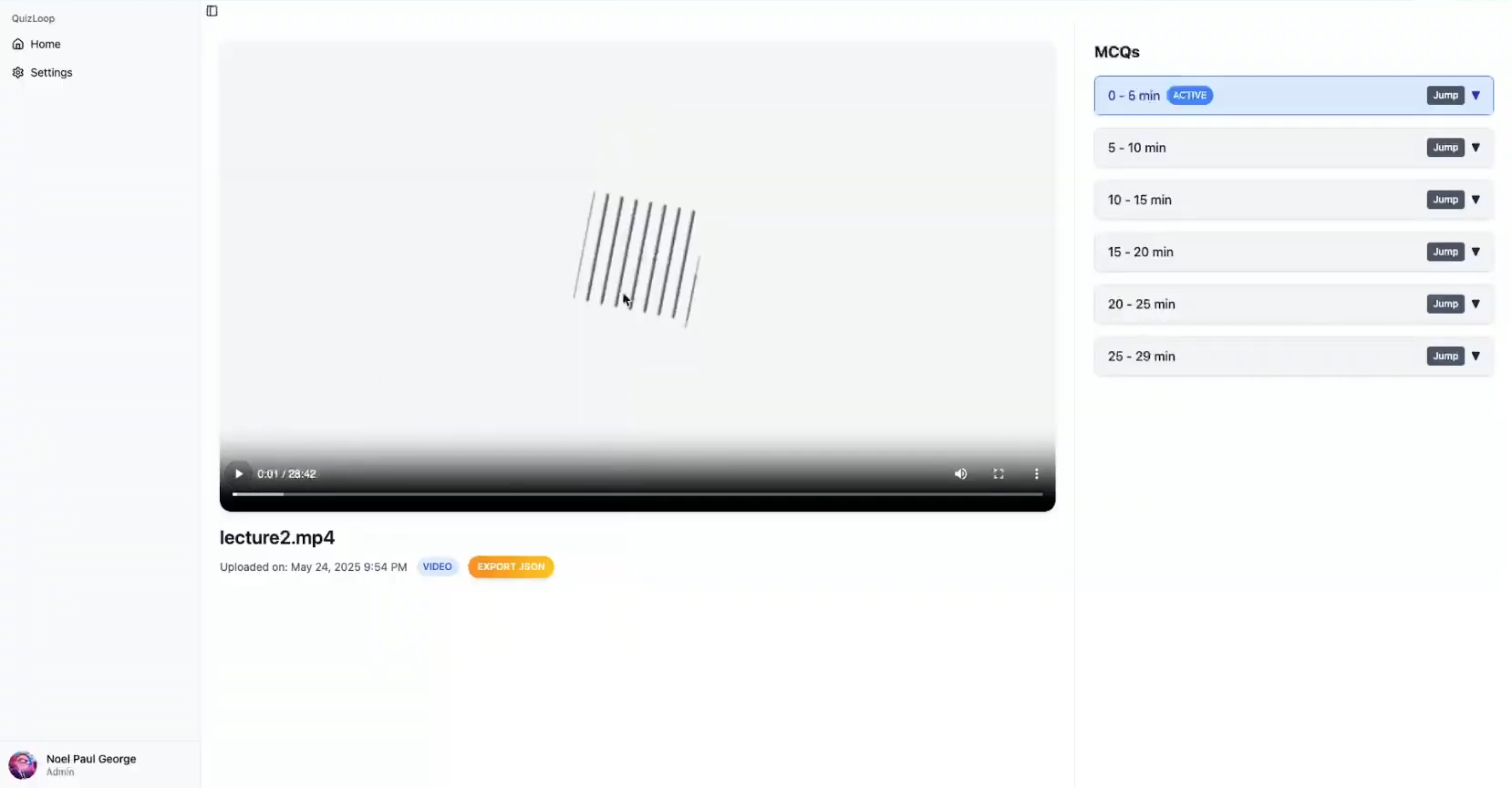

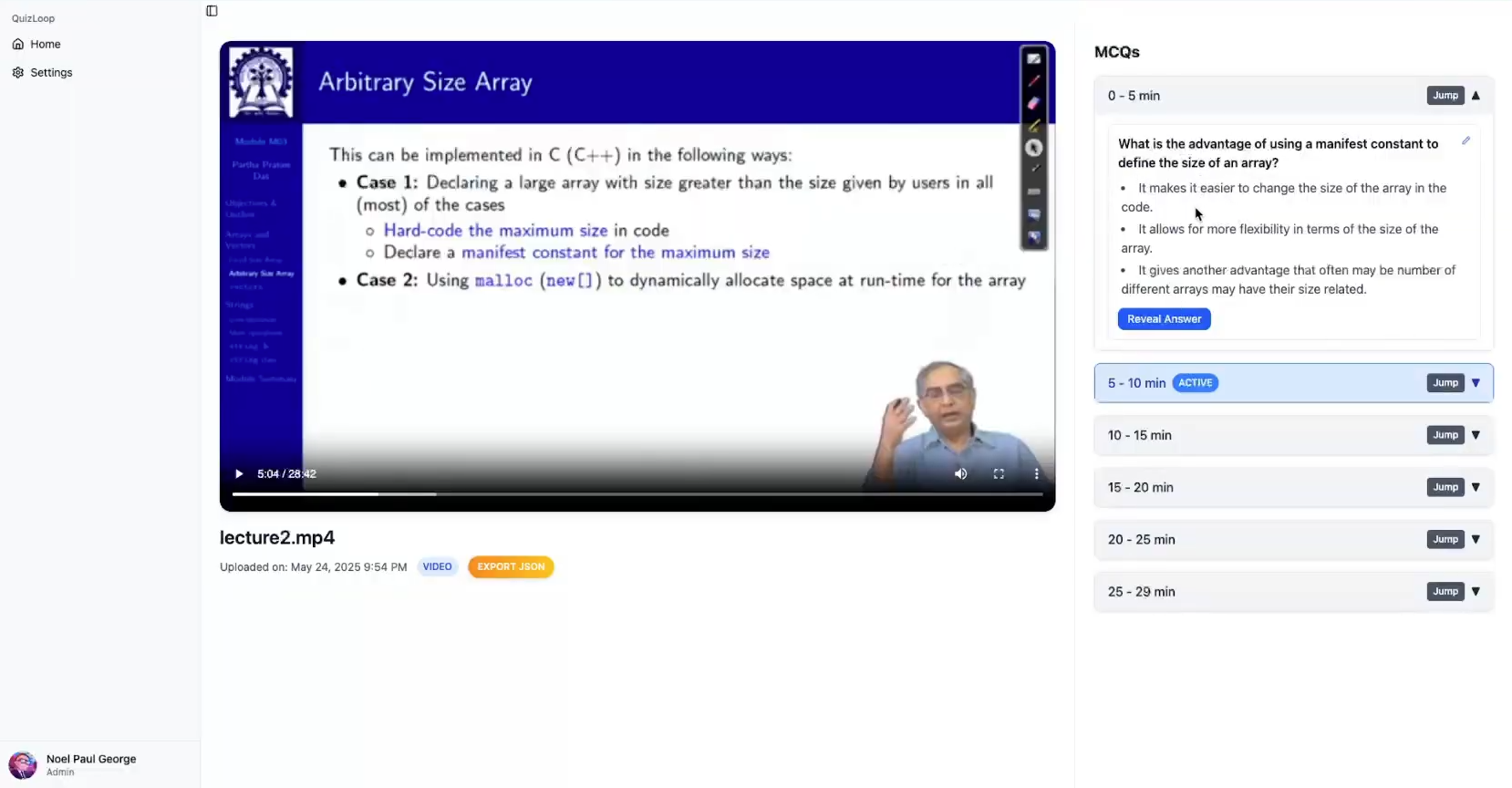

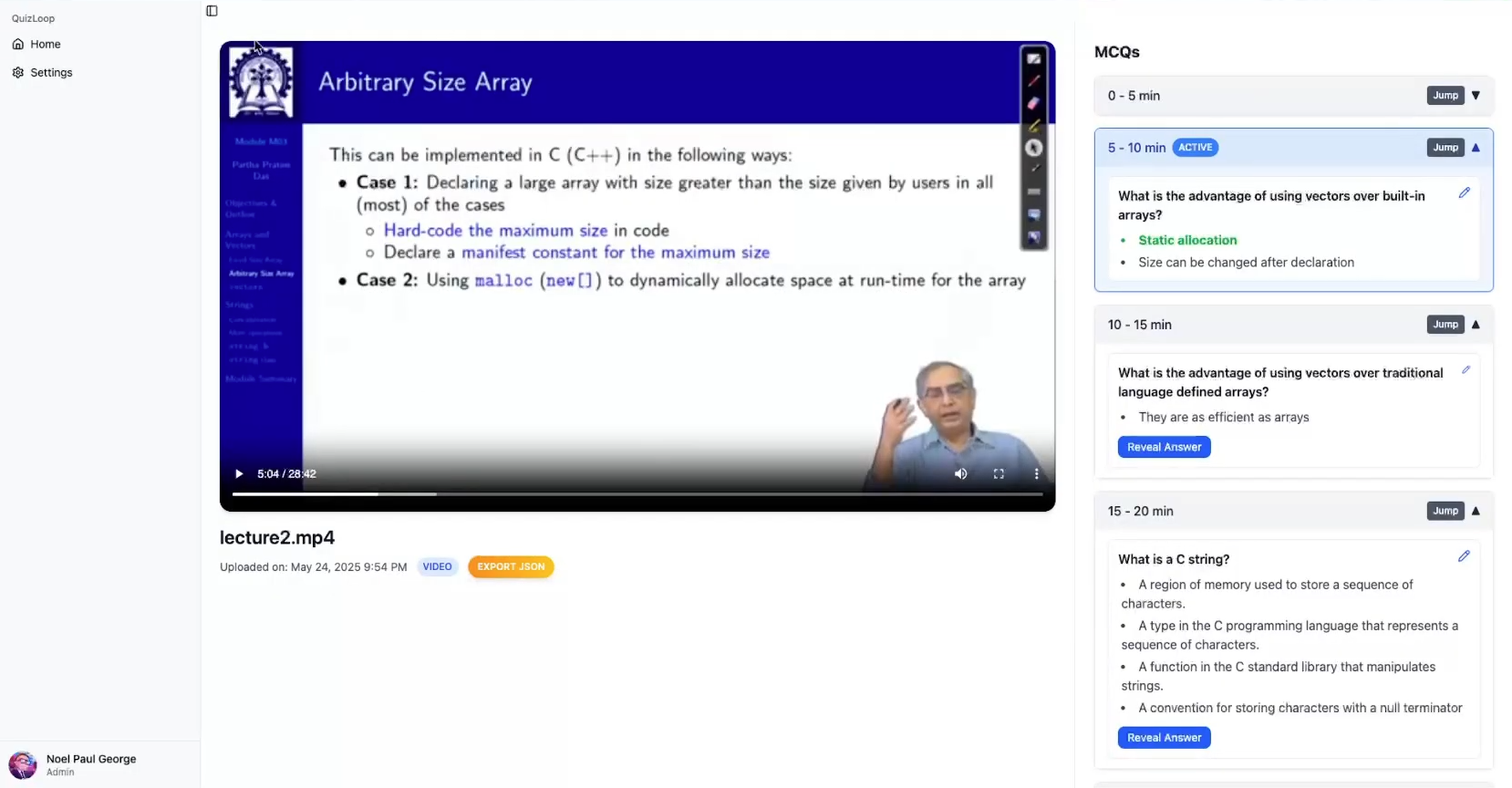

- Display transcript segmented by 5-minute intervals.

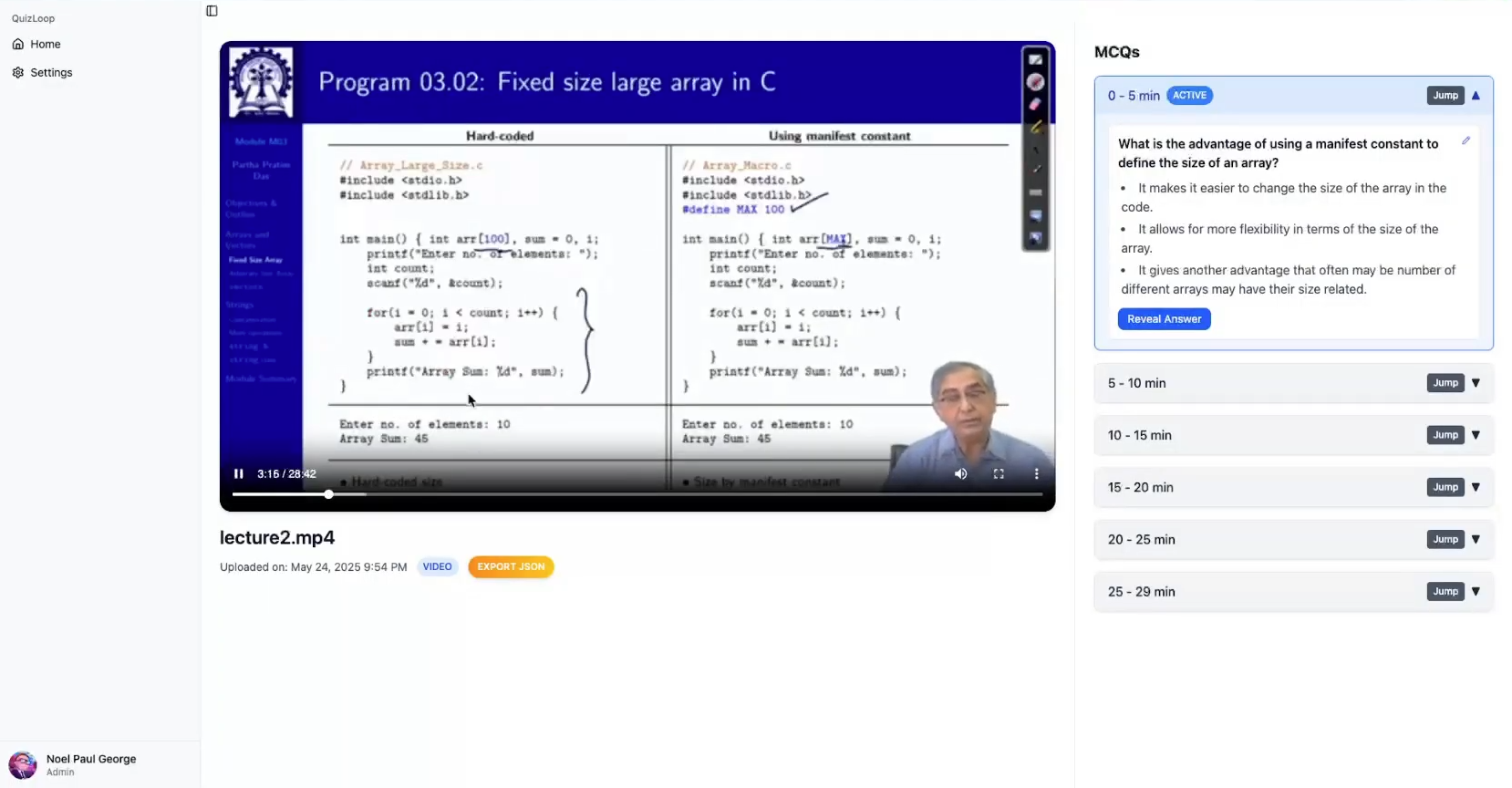

- Auto-generated MCQs for each transcript segment.

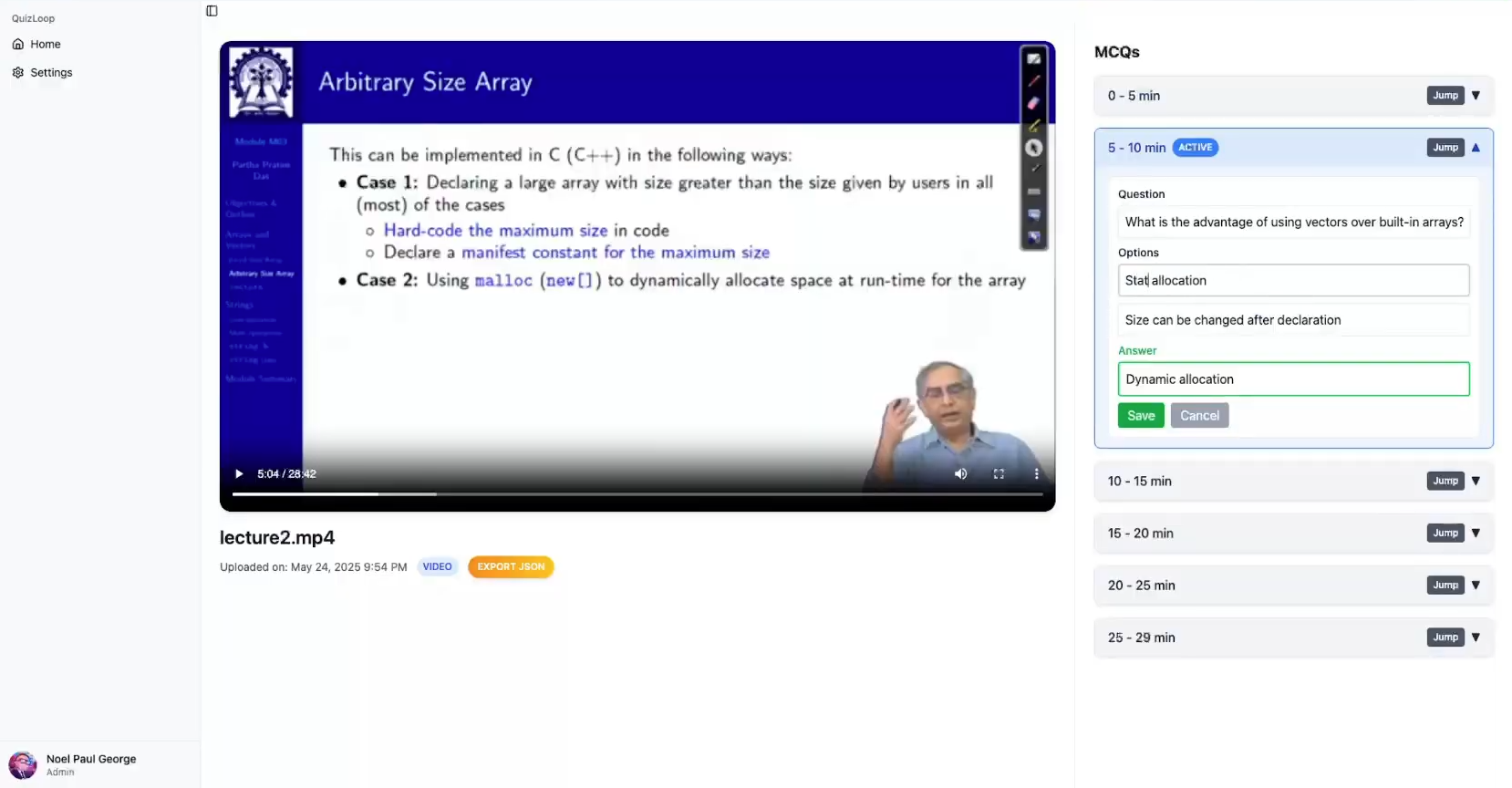

- Options to review, edit, and export generated questions.

- Secure handling of video file uploads, stored temporarily on the local machine.

- Integration with a Python-based transcription service (Whisper).

- Transcript segmentation into fixed 5-minute chunks.

- Communication with a locally hosted LLM service for MCQ generation via REST API.

- Storage of questions in MongoDB.

- Stores metadata of uploaded videos.

- Stores generated objective-type questions for each segment.

- Transcription using Whisper model running locally.

- Question generation using a locally deployed LLM (e.g., LLaMA, Mistral, GPT4All).

- Python REST API backend (FastAPI) hosting the AI models.

- Prompt engineering to generate relevant, randomized MCQs for each transcript segment.

| Layer | Technology / Tools |

|---|---|

| Frontend | React.js, TypeScript, ShadCN, React Query |

| Backend | Node.js, Express.js, TypeScript, routing-controllers |

| Database | MongoDB (with official Node.js driver) |

| AI/ML Services | Whisper (local transcription), LLM via Ollama, FastAPI for REST API |

| Storage | Local file system (for videos) |

| Messaging | RabbitMQ |

- User uploads a lecture video via the React frontend.

- Backend receives and stores the video locally.

- Video is sent to the transcription Python service (Whisper) to convert speech to text.

- The full transcript is segmented into 5-minute intervals.

- Each segment is sent to the locally hosted LLM service, which generates multiple-choice questions.

- Transcripts and generated MCQs are saved in MongoDB.

- The frontend displays segmented transcripts and MCQs, allowing users to review and export.

- Node.js (v16+ recommended)

- MongoDB (running locally or remotely)

- Python 3.8+ with required AI/ML dependencies installed (Whisper, LLM models, Flask/FastAPI)

- RabbitMQ (for messaging and background task processing)

- FFmpeg (for video processing, if needed)

With ❤️ Open-Source.