-

Notifications

You must be signed in to change notification settings - Fork 4

Home

Welcome to the Photo-SynthSeg wiki!

Project Github link: here Forked from the original SynthSeg

>>> Branches on the repo:

- master - Original SynthSeg

- lesion-SynthSeg - Same as above

- fixed-spacing - Branch for SynthSeg on Photos (with fixed spacing)

- variable-spacing - Branch for SynthSeg on Photos (with variable spacing)

NOTE: You will almost always work with the photo-{fixed|variable}-spacing branch.

Path on calico: /space/calico/1/users/Harsha/SynthSeg

Please refer to the setup script. TODO

Python Environment:

- Option 1. Build your own using the requirements file.

- Option 2. Source my environment-

source /space/calico_001/users/Harsha/venvs/synthseg-venv/bin/activate

NOTE (important): Before running this project, please add the project directory to the PYTHONPATH environment variable as follows:

export PYTHONPATH=/path/to/Photo-SynthSeg/in/your/space

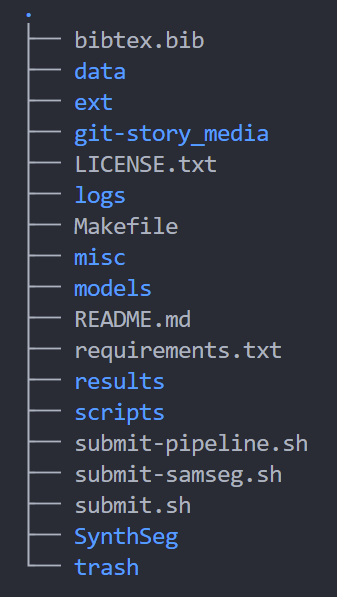

Directory Structure: (followed by a description of contents)

data - data for Photo-SynthSeg. For more information, please refer to instructions.txt in the same folder.

-

SynthSeg_label_maps_manual_auto_photos - Segmentation maps (whole brain) for training Photo-SynthSeg

-

SynthSeg_label_maps_manual_auto_photos_noCerebellumOrBrainstem - Segmentation maps (whole brain) without Cerebellum and Brainstem

-

SynthSeg_label_maps_manual_auto_photos_noCerebellumOrBrainstem_lh - Segmentation maps (hemi brain) without Cerebellum and Brainstem

-

SynthSeg_param_files_manual_auto_photos - (see below)

-

SynthSeg_param_files_manual_auto_photos_noCerebellumOrBrainstem -

generation_charm_choroid_lesions_lh.npy - generation labels (i.e., the set of unique labels in the segmentation)

generation_classes_charm_choroid_lesions_gm_lh.npy - the generation classes (which group some CSF classes, hippocampus with the amygdala, etc.)

segmentation_new_charm_choroid_lesions_lh.npy - segmentation labels (which ignore some segmentation labels, like the CSF around the skull, which is label 24). So that you know, the number of neutral labels is 7.

topo_classes_lh.npy - For more information, please refer to

~/Photo-SynthSeg/scripts/tutorials/2-generation-explained.py -

SynthSeg_param_files_manual_auto_photos_noCerebellumOrBrainstem_lh

-

SynthSeg_test_data -

-

uw_photo - Please refer to photo-reconstruction wiki

ext - External modules used in the project

logs - SLURM logs for different recipes/targets run on the cluster

misc - Miscellaneous scripts written for the project

models - Collection of all Photo-SynthSeg models

-

jei-model - Eugenio trained a fixed spacing (4 mm) model. I'd safely ignore it for now.

-

SynthSeg.h5 - Model from master branch (irrelevant to photos project)

-

models-2022 - Various models trained to segment 3D reconstructed volumes from Photographs

NOTE: S{

spacing}R{run_id} means a model trained with a 'fixed-spacing' ofspacingmm. VS{run-id} means 'variable-spacing'. The difference betweenVSandVS-accordionis- inVS, the spacing within a volume is fixed but randomized between batches, whereas inVS-accordion, the spacing is randomized both within a volume as well as between batches. If the model name ends with ann, it means then_neutral_labelsis set to some integer.no-flipmeansflippinghas been set to False.NOTE (very important): Continuing the above description, please use models with an

nand nono-flip.

results - Collection of all results

-

20220328 - Ignore

-

20220407 - Ignore

-

20220411 - This is the latest/final set of results used in the paper. Performed automated segmentations on UW reconstructions using the models mentioned above. The script used to generate this result is

~/scripts/hg_dice_scripts/new.py. -

mgh_inference_20230411 - Performed automated segmentations on MGH reconstructions using final select models. The script used to generate this result is

~/scripts/mgh_inference.py(in thefixed-spacingbranch). -

new-recons - Reconstructions on UW data using updated reconstruction code (aka with interleaved training)

-

old-recons - Same as above but before finalizing the reconstruction script. This folder can be safely ignored.

scripts - Collection of Python scripts used in the project

-

commands - training*, prediction and few other scripts written by Benjamin, *modified by @hvgazula.

-

hg_dice_scripts - Scripts written by @hvgazula for post-processing on reconstructions and segmentations

1. dice_avg_plots.ipynb - Notebook to generate the boxplots of dice scores in the paper

2. dice_avg_plots.py - Python script to generate boxplots of dice scores across all models.

3. dice_calculations.py - script to calculate and store dice scores

4. dice_config.py - configuration file to enable and postprocessing

5. dice_gather.py - collect all required files (reconstructions and segmentations) as needed for postprocessing

6. dice_mri_utils.py - enables registration or conversion of 'space' of one volume to another for dice calculations

7. dice_plots.py - plotting (as the name suggests)

8. dice_stats.py

9. dice_utils.py - common utilities across all other scripts in the current directory.

10. dice_volumes.py - calculate/store volumes

11. new.py - postprocessing script (important)

12. old.py - same as above but can be safely ignored.

13. uw_config.py - some configuration parameters for postprocessing

- tutorials - Written by Benjamin. This could be a starting point for understanding the modeling approach behind SynthSeg

submit-pipeline.sh - Please refer to ~/scripts/hg_dice_scripts/new.py or the Makefile for more information

submit-samseg.sh - shell script to run SAMSEG. This was necessitated because SAMSEG was run using Freesurfer (but in a very roundabout way)

submit.sh - SLURM submission script to run Make recipes as needed