-

Notifications

You must be signed in to change notification settings - Fork 1

Description

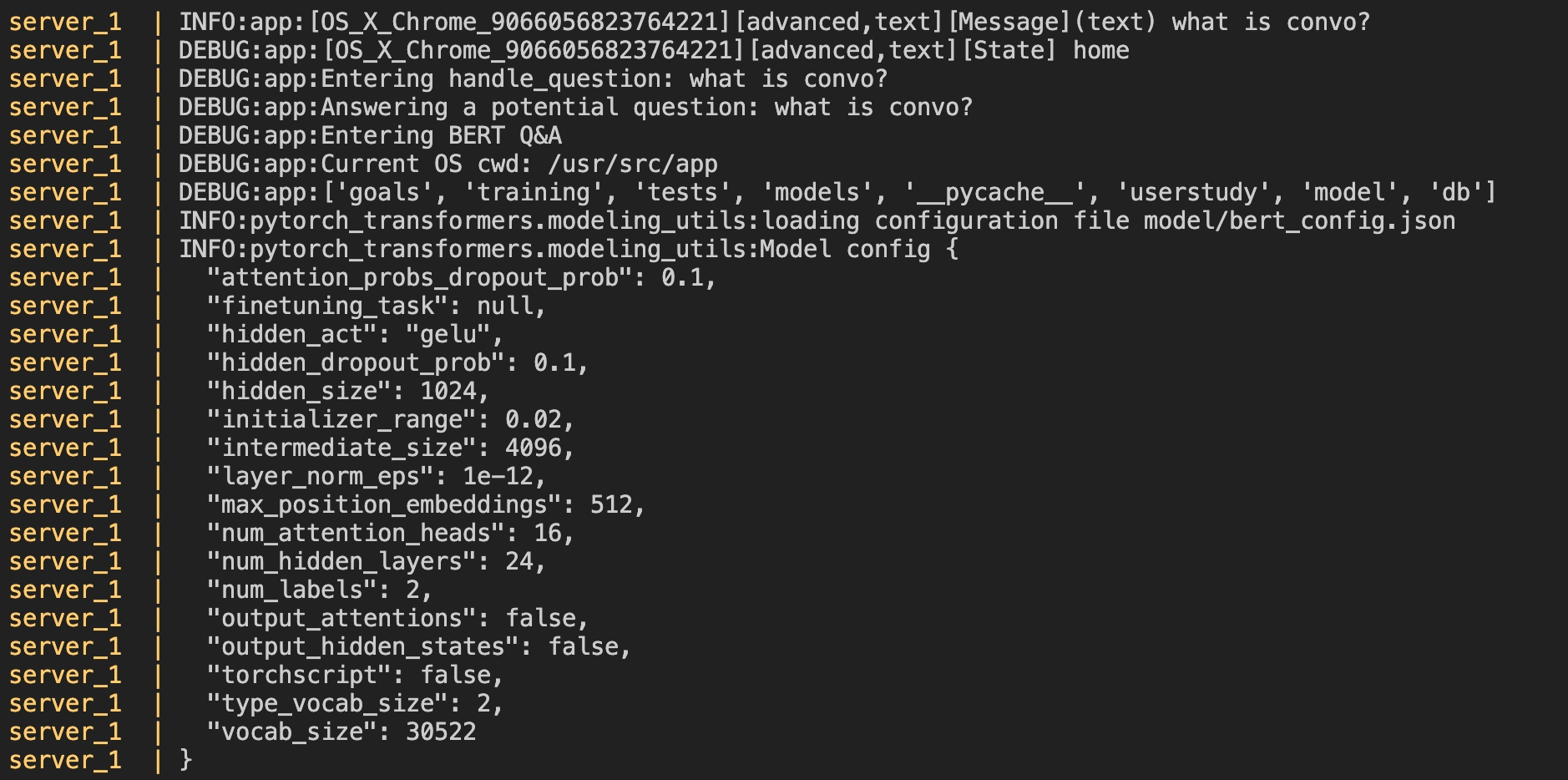

Integrating identical code to my local Q&A scripts into the answer function in the QuestionAnswer class in question.py, I keep running into this issue, after I type in a question (e.g. "What is Convo?"):

server_1 | INFO:pytorch_transformers.tokenization_utils:Didn't find file model/added_tokens.json. We won't load it.

server_1 | INFO:pytorch_transformers.tokenization_utils:Didn't find file model/special_tokens_map.json. We won't load it.

I have a folder inside backend/server called model, where I place the large training files needed by BERT and Transformers. This path setup is the same as how I do it locally/separately. But I can't seem to figure out the issue as to why when I integrate this code into Convo (setup locally in Docker), it hits these errors. Furthermore, I have confirmed that I am in the right path and am able to access the model subdirectory (you can see that the file model/bert_config.json is able to be accessed:

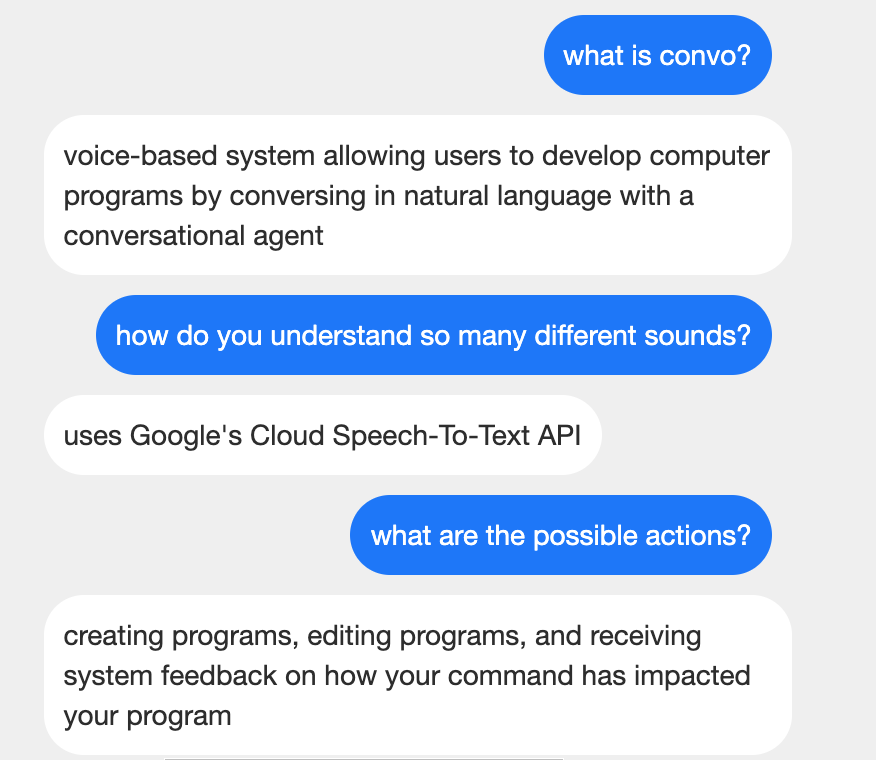

To confirm that my code logic works locally, I decided to try an experiment where I run my separate Q&A standalone Flask server, expose it publicly using a ngrok URL, and send POST requests to that URL from Convo. This was able to work:

So I am guessing it might be an issue related to Docker? But it seems that my path and directory setup looks correct. Any ideas for how to resolve this issue?