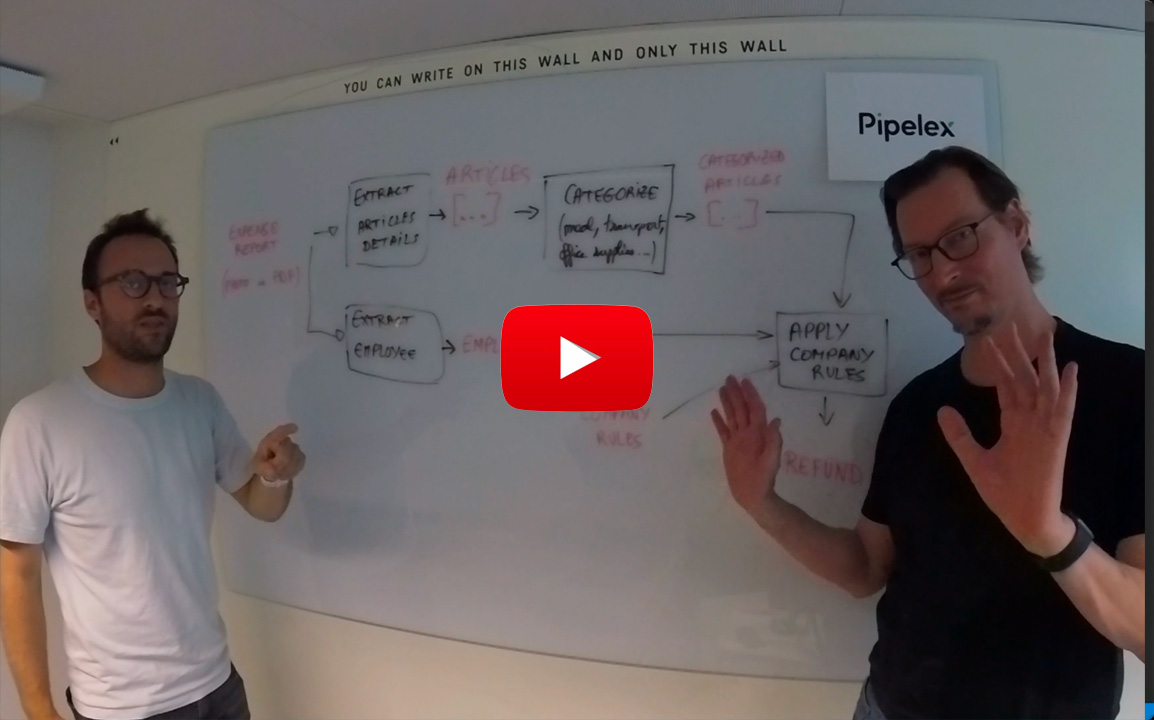

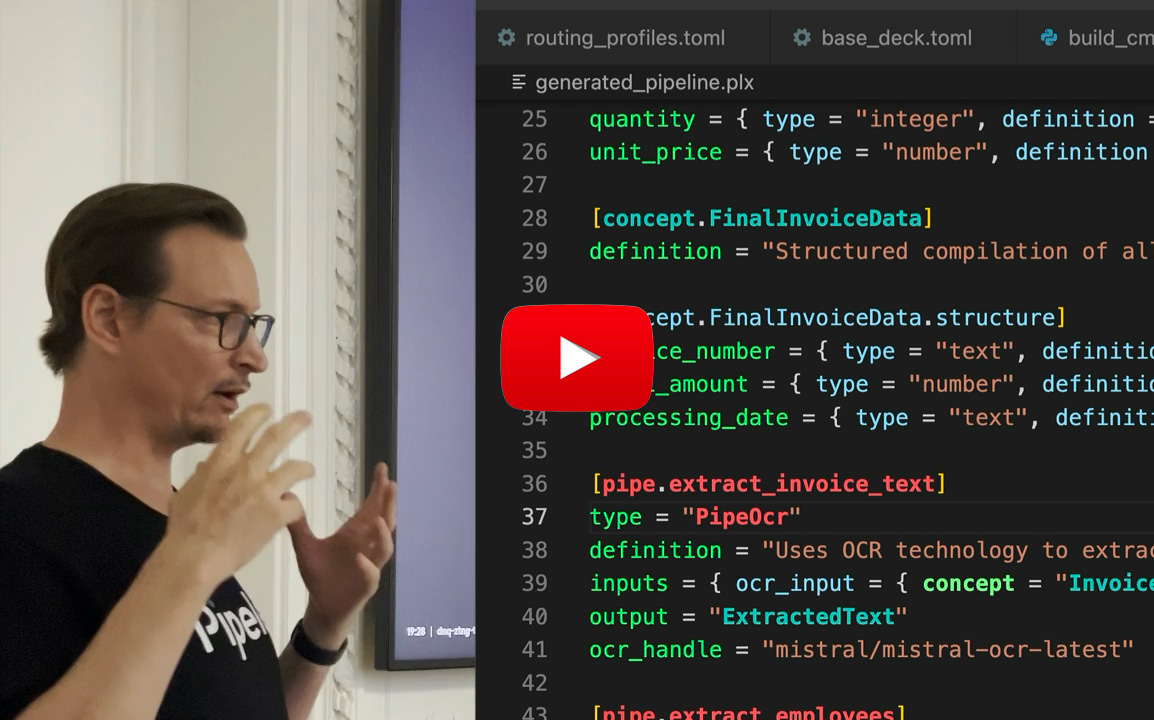

- From Whiteboard to AI Workflow in less than 5 minutes with no hands (2025-07)

+ From Whiteboard to AI Method in less than 5 minutes with no hands (2025-07)

|

- The AI workflow that writes an AI workflow in 64 seconds (2025-09)

+ The AI method that writes an AI method in 64 seconds (2025-09)

@@ -323,21 +325,21 @@ asyncio.run(run_pipeline())

## 💡 What is Pipelex?

-Pipelex is an open-source language that enables you to build and run **repeatable AI workflows**. Instead of cramming everything into one complex prompt, you break tasks into focused steps, each pipe handling one clear transformation.

+Pipelex is an open-source language that enables you to build and run **repeatable AI methods**. Instead of cramming everything into one complex prompt, you break tasks into focused steps, each pipe handling one clear transformation.

-Each pipe processes information using **Concepts** (typing with meaning) to ensure your pipelines make sense. The Pipelex language (`.plx` files) is simple and human-readable, even for non-technical users. Each step can be structured and validated, giving you the reliability of software with the intelligence of AI.

+Each pipe processes information using **Concepts** (typing with meaning) to ensure your pipelines make sense. The Pipelex language (`.mthds` files) is simple and human-readable, even for non-technical users. Each step can be structured and validated, giving you the reliability of software with the intelligence of AI.

## 📖 Next Steps

**Learn More:**

-- [Design and Run Pipelines](https://docs.pipelex.com/pre-release/home/6-build-reliable-ai-workflows/pipes/) - Complete guide with examples

-- [Kick off a Pipeline Project](https://docs.pipelex.com/pre-release/home/6-build-reliable-ai-workflows/kick-off-a-pipelex-workflow-project/) - Deep dive into Pipelex

+- [Design and Run Methods](https://docs.pipelex.com/pre-release/home/6-build-reliable-ai-workflows/pipes/) - Complete guide with examples

+- [Kick off a Method Project](https://docs.pipelex.com/pre-release/home/6-build-reliable-ai-workflows/kick-off-a-methods-project/) - Deep dive into Pipelex

- [Configure AI Providers](https://docs.pipelex.com/pre-release/home/5-setup/configure-ai-providers/) - Set up AI providers and models

## 🔧 IDE Extension

-We **highly** recommend installing our extension for `.plx` files into your IDE. You can find it in the [Open VSX Registry](https://open-vsx.org/extension/Pipelex/pipelex). It's coming soon to VS Code marketplace too. If you're using Cursor, Windsurf or another VS Code fork, you can search for it directly in your extensions tab.

+We **highly** recommend installing our extension for `.mthds` files into your IDE. You can find it in the [Open VSX Registry](https://open-vsx.org/extension/Pipelex/pipelex). It's coming soon to VS Code marketplace too. If you're using Cursor, Windsurf or another VS Code fork, you can search for it directly in your extensions tab.

## 📚 Examples & Cookbook

diff --git a/docs/home/1-releases/chicago.md b/docs/home/1-releases/chicago.md

index 82e11df81..f9a8128d6 100644

--- a/docs/home/1-releases/chicago.md

+++ b/docs/home/1-releases/chicago.md

@@ -4,7 +4,7 @@ title: "Chicago Release"

# Pipelex v0.18.0 "Chicago"

-**The AI workflow framework that just works.**

+**The AI method framework that just works.**

## Why Pipelex

@@ -12,19 +12,19 @@ Pipelex eliminates the complexity of building AI-powered applications. Instead o

- **One framework** for prompts, pipelines, and structured outputs

- **One API key** for dozens of AI models

-- **One workflow** from prototype to production

+- **One method** from prototype to production

---

## A Major Milestone

-Three months after our first public launch in San Francisco, Pipelex reaches a new level of maturity with the "Chicago" release (currently in beta-test). This version delivers on our core promise: **enabling every developer to build AI workflows that are reliable, flexible, and production-ready**.

+Three months after our first public launch in San Francisco, Pipelex reaches a new level of maturity with the "Chicago" release (currently in beta-test). This version delivers on our core promise: **enabling every developer to build AI methods that are reliable, flexible, and production-ready**.

Version 0.18.0 represents our most significant release to date, addressing the three priorities that emerged from real-world usage:

- **Universal model access** — one API key for all leading AI models

- **State-of-the-art document extraction** — deployable anywhere

-- **Visual pipeline inspection** — full transparency into your workflows

+- **Visual pipeline inspection** — full transparency into your methods

---

@@ -91,7 +91,7 @@ Broad support for open-source AI:

### Developer Experience

-- **Pure PLX Workflows** — Inline concept structures now support nested concepts, making Pipelex fully usable with just `.plx` files and the CLI—no Python code required

+- **Pure MTHDS Methods** — Inline concept structures now support nested concepts, making Pipelex fully usable with just `.mthds` files and the CLI—no Python code required

- **Deep Integration Options** — Generate Pydantic BaseModels from your declarative concepts for full IDE autocomplete, type checking, and validation (TypeScript Zod structures coming soon)

- **PipeCompose Construct Mode** — Build `StructuredContent` objects deterministically without an LLM, composing outputs from working memory variables, fixed values, templates, and nested structures

- **Cloud Storage for Artifacts** — Store generated images and extracted pages on AWS S3 or Google Cloud Storage with public or signed URLs

@@ -112,7 +112,7 @@ Then run `pipelex init` to configure your environment and obtain your Gateway AP

---

-*Ready to build AI workflows that just work?*

+*Ready to build AI methods that just work?*

[Join the Waitlist](https://go.pipelex.com/waitlist){ .md-button .md-button--primary }

[Documentation](https://docs.pipelex.com/pre-release){ .md-button }

diff --git a/docs/home/10-advanced-customizations/observer-provider-injection.md b/docs/home/10-advanced-customizations/observer-provider-injection.md

index f277ef067..eaeb4b21b 100644

--- a/docs/home/10-advanced-customizations/observer-provider-injection.md

+++ b/docs/home/10-advanced-customizations/observer-provider-injection.md

@@ -216,4 +216,4 @@ def setup_pipelex():

return pipelex_instance

```

-The observer system provides powerful insights into your pipeline execution patterns and is essential for monitoring, debugging, and optimizing your Pipelex workflows.

\ No newline at end of file

+The observer system provides powerful insights into your pipeline execution patterns and is essential for monitoring, debugging, and optimizing your Pipelex methods.

\ No newline at end of file

diff --git a/docs/home/2-get-started/pipe-builder.md b/docs/home/2-get-started/pipe-builder.md

index 48e81d3b8..8fa377486 100644

--- a/docs/home/2-get-started/pipe-builder.md

+++ b/docs/home/2-get-started/pipe-builder.md

@@ -1,5 +1,5 @@

---

-title: "Generate Workflows with Pipe Builder"

+title: "Generate Methods with Pipe Builder"

---

@@ -18,9 +18,9 @@ During the second step of the initialization, we recommand, for a quick start, t

If you want to bring your own API keys, see [Configure AI Providers](../../home/5-setup/configure-ai-providers.md) for details.

-# Generate workflows with Pipe Builder

+# Generate methods with Pipe Builder

-The fastest way to create production-ready AI workflows is with the Pipe Builder. Just describe what you want, and Pipelex generates complete, validated pipelines.

+The fastest way to create production-ready AI methods is with the Pipe Builder. Just describe what you want, and Pipelex generates complete, validated pipelines.

```bash

pipelex build pipe "Take a CV and Job offer in PDF, analyze if they match and generate 5 questions for the interview"

@@ -28,12 +28,12 @@ pipelex build pipe "Take a CV and Job offer in PDF, analyze if they match and ge

The pipe builder generates three files in a numbered directory (e.g., `results/pipeline_01/`):

-1. **`bundle.plx`** - Complete production-ready script in our Pipelex language with domain definition, concepts, and pipe steps

+1. **`bundle.mthds`** - Complete production-ready script in our Pipelex language with domain definition, concepts, and pipe steps

2. **`inputs.json`** - Template describing the **mandatory** inputs for running the pipe

3. **`run_{pipe_code}.py`** - Ready-to-run Python script that you can customize and execute

!!! tip "Pipe Builder Requirements"

- For now, the pipe builder requires access to **Claude 4.5 Sonnet**, either through Pipelex Inference, or using your own key through Anthropic, Amazon Bedrock or BlackboxAI. Don't hesitate to join our [Discord](https://go.pipelex.com/discord) to get a key, otherwise, you can also create the workflows yourself, following our [documentation guide](./write-workflows-manually.md).

+ For now, the pipe builder requires access to **Claude 4.5 Sonnet**, either through Pipelex Inference, or using your own key through Anthropic, Amazon Bedrock or BlackboxAI. Don't hesitate to join our [Discord](https://go.pipelex.com/discord) to get a key, otherwise, you can also create the methods yourself, following our [documentation guide](./write-methods-manually.md).

!!! info "Learn More"

Want to understand how the Pipe Builder works under the hood? See [Pipe Builder Deep Dive](../9-tools/pipe-builder.md) for the full explanation of its multi-step generation process.

@@ -43,18 +43,18 @@ The pipe builder generates three files in a numbered directory (e.g., `results/p

**Option 1: CLI**

```bash

-pipelex run results/cv_match.plx --inputs inputs.json

+pipelex run results/cv_match.mthds --inputs inputs.json

```

The `--inputs` file should be a JSON dictionary where keys are input variable names and values are the input data. Learn more on how to provide the inputs of a pipe: [Providing Inputs to Pipelines](../../home/6-build-reliable-ai-workflows/pipes/provide-inputs.md)

**Option 2: Python**

-This requires having the `.plx` file or your pipe inside the directory where the Python file is located.

+This requires having the `.mthds` file or your pipe inside the directory where the Python file is located.

```python

import json

-from pipelex.pipeline.execute import execute_pipeline

+from pipelex.pipeline.runner import PipelexRunner

from pipelex.pipelex import Pipelex

# Initialize Pipelex

@@ -65,10 +65,12 @@ with open("inputs.json", "r", encoding="utf-8") as json_file:

inputs = json.load(json_file)

# Execute the pipeline

-pipe_output = await execute_pipeline(

+runner = PipelexRunner()

+response = await runner.execute_pipeline(

pipe_code="analyze_cv_and_prepare_interview",

inputs=inputs

)

+pipe_output = response.pipe_output

print(pipe_output.main_stuff)

@@ -76,7 +78,7 @@ print(pipe_output.main_stuff)

## IDE Support

-We **highly** recommend installing our own extension for PLX files into your IDE of choice. You can find it in the [Open VSX Registry](https://open-vsx.org/extension/Pipelex/pipelex) and download it directly using [this link](https://open-vsx.org/api/Pipelex/pipelex/0.2.1/file/Pipelex.pipelex-0.2.1.vsix). It's coming soon to the VS Code marketplace too and if you are using Cursor, Windsurf or another VS Code fork, you can search for it directly in your extensions tab.

+We **highly** recommend installing our own extension for MTHDS files into your IDE of choice. You can find it in the [Open VSX Registry](https://open-vsx.org/extension/Pipelex/pipelex) and download it directly using [this link](https://open-vsx.org/api/Pipelex/pipelex/0.2.1/file/Pipelex.pipelex-0.2.1.vsix). It's coming soon to the VS Code marketplace too and if you are using Cursor, Windsurf or another VS Code fork, you can search for it directly in your extensions tab.

## Examples

@@ -86,12 +88,12 @@ We **highly** recommend installing our own extension for PLX files into your IDE

## Next Steps

-Now that you know how to generate workflows with the Pipe Builder, explore these resources:

+Now that you know how to generate methods with the Pipe Builder, explore these resources:

-**Learn how to Write Workflows yourself**

+**Learn how to Write Methods yourself**

-- [:material-pencil: Write Workflows Manually](./write-workflows-manually.md){ .md-button .md-button--primary }

-- [:material-book-open-variant: Build Reliable AI Workflows](../6-build-reliable-ai-workflows/kick-off-a-pipelex-workflow-project.md){ .md-button .md-button--primary }

+- [:material-pencil: Write Methods Manually](./write-methods-manually.md){ .md-button .md-button--primary }

+- [:material-book-open-variant: Build Reliable AI Methods](../6-build-reliable-ai-workflows/kick-off-a-methods-project.md){ .md-button .md-button--primary }

**Explore Examples:**

diff --git a/docs/home/2-get-started/write-workflows-manually.md b/docs/home/2-get-started/write-methods-manually.md

similarity index 90%

rename from docs/home/2-get-started/write-workflows-manually.md

rename to docs/home/2-get-started/write-methods-manually.md

index 478983b92..525cbe0e8 100644

--- a/docs/home/2-get-started/write-workflows-manually.md

+++ b/docs/home/2-get-started/write-methods-manually.md

@@ -1,16 +1,16 @@

-# Writing Workflows

+# Writing Methods

-Ready to dive deeper? This section shows you how to manually create pipelines and understand the `.plx` language.

+Ready to dive deeper? This section shows you how to manually create pipelines and understand the `.mthds` language.

-!!! tip "Prefer Automated Workflow Generation?"

- If you have access to **Claude 4.5 Sonnet** (via Pipelex Inference, Anthropic, Amazon Bedrock, or BlackBox AI), you can use our **pipe builder** to generate workflows from natural language descriptions. See the [Pipe Builder guide](./pipe-builder.md) to learn how to use `pipelex build pipe` commands. This tutorial is for those who want to write workflows manually or understand the `.plx` language in depth.

+!!! tip "Prefer Automated Method Generation?"

+ If you have access to **Claude 4.5 Sonnet** (via Pipelex Inference, Anthropic, Amazon Bedrock, or BlackBox AI), you can use our **pipe builder** to generate methods from natural language descriptions. See the [Pipe Builder guide](./pipe-builder.md) to learn how to use `pipelex build pipe` commands. This tutorial is for those who want to write methods manually or understand the `.mthds` language in depth.

## Write Your First Pipeline

Let's build a **character generator** to understand the basics.

-Create a `.plx` file anywhere in your project (we recommend a `pipelines` directory):

+Create a `.mthds` file anywhere in your project (we recommend a `pipelines` directory):

-`character.plx`

+`character.mthds`

```toml

domain = "characters" # domain of existance of your pipe

@@ -41,15 +41,17 @@ Create a Python file to execute the pipeline:

`character.py`

```python

-from pipelex.pipeline.execute import execute_pipeline

+from pipelex.pipeline.runner import PipelexRunner

from pipelex.pipelex import Pipelex

# Initialize pipelex to load your pipeline libraries

Pipelex.make()

-pipe_output = await execute_pipeline(

+runner = PipelexRunner()

+response = await runner.execute_pipeline(

pipe_code="create_character",

)

+pipe_output = response.pipe_output

print(pipe_output.main_stuff_as_str) # `main_stuff_as_str` is allowed here because the output is a `TextContent`

```

@@ -70,9 +72,9 @@ As you might notice, this is plain text, and nothing is structured. Now we are g

Let's create a rigorously structured `Character` object instead of plain text. We need to create the concept `Character`. The concept names MUST be in PascalCase. [Learn more about defining concepts](../6-build-reliable-ai-workflows/concepts/define_your_concepts.md)

-### Option 1: Define the Structure in your `.plx` file

+### Option 1: Define the Structure in your `.mthds` file

-Define structures directly in your `.plx` file:

+Define structures directly in your `.mthds` file:

```toml

[concept.Character] # Declare the concept by giving it a name.

@@ -89,7 +91,7 @@ description = "A description of the character" # Fourth attribute: "descrip

Specify that the output of your Pipellm is a `Character` object:

-`characters.plx`

+`characters.mthds`

```toml

domain = "characters"

@@ -146,7 +148,7 @@ Learn more in [Inline Structures](../6-build-reliable-ai-workflows/concepts/inli

Specify that the output of your Pipellm is a `Character` object:

-`characters.plx`

+`characters.mthds`

```toml

domain = "characters"

@@ -229,7 +231,7 @@ Learn more about Jinja in the [PipeLLM documentation](../../home/6-build-reliabl

`run_pipe.py`

```python

-from pipelex.pipeline.execute import execute_pipeline

+from pipelex.pipeline.runner import PipelexRunner

from pipelex.pipelex import Pipelex

from character_model import CharacterMetadata

@@ -250,10 +252,12 @@ inputs = {

}

# Run the pipe with loaded inputs

-pipe_output = await execute_pipeline(

+runner = PipelexRunner()

+response = await runner.execute_pipeline(

pipe_code="extract_character_advances",

inputs=inputs,

)

+pipe_output = response.pipe_output

# Get the result as a properly typed instance

print(pipe_output)

@@ -284,7 +288,7 @@ class CharacterMetadata(StructuredContent):

`run_pipe.py`

```python

-from pipelex.pipeline.execute import execute_pipeline

+from pipelex.pipeline.runner import PipelexRunner

from pipelex.pipelex import Pipelex

from character_model import CharacterMetadata

@@ -305,10 +309,12 @@ inputs = {

}

# Run the pipe with loaded inputs

-pipe_output = await execute_pipeline(

+runner = PipelexRunner()

+response = await runner.execute_pipeline(

pipe_code="extract_character_advances",

inputs=inputs,

)

+pipe_output = response.pipe_output

# Get the result as a properly typed instance

extracted_metadata = pipe_output.main_stuff_as(content_type=CharacterMetadata)

@@ -325,12 +331,12 @@ Now that you understand the basics, explore more:

**Learn More about the PipeLLM:**

-- [LLM Configuration: play with the models](../../home/6-build-reliable-ai-workflows/configure-ai-llm-to-optimize-workflows.md) - Optimize cost and quality

+- [LLM Configuration: play with the models](../../home/6-build-reliable-ai-workflows/configure-ai-llm-to-optimize-methods.md) - Optimize cost and quality

- [Full configuration of the PipeLLM](../../home/6-build-reliable-ai-workflows/pipes/pipe-operators/PipeLLM.md)

**Learn more about Pipelex (domains, project structure, best practices...)**

-- [Build Reliable AI Workflows](../../home/6-build-reliable-ai-workflows/kick-off-a-pipelex-workflow-project.md) - Deep dive into pipeline design

+- [Build Reliable AI Methods](../../home/6-build-reliable-ai-workflows/kick-off-a-methods-project.md) - Deep dive into pipeline design

- [Cookbook Examples](../../home/4-cookbook-examples/index.md) - Real-world examples and patterns

**Learn More about the other pipes**

diff --git a/docs/home/3-understand-pipelex/language-spec-v0-1-0.md b/docs/home/3-understand-pipelex/language-spec-v0-1-0.md

index 7f6b319aa..f26e523ba 100644

--- a/docs/home/3-understand-pipelex/language-spec-v0-1-0.md

+++ b/docs/home/3-understand-pipelex/language-spec-v0-1-0.md

@@ -1,28 +1,28 @@

-# Pipelex (PLX) – Declarative AI Workflow Spec (v0.1.0)

+# Pipelex (MTHDS) – Declarative AI Method Spec (v0.1.0)

-**Build deterministic, repeatable AI workflows using declarative TOML syntax.**

+**Build deterministic, repeatable AI methods using declarative TOML syntax.**

-The Pipelex Language (PLX) uses a TOML-based syntax to define deterministic, repeatable AI workflows. This specification documents version 0.1.0 of the language and establishes the canonical way to declare domains, concepts, and pipes inside `.plx` bundles.

+The Pipelex Language (MTHDS) uses a TOML-based syntax to define deterministic, repeatable AI methods. This specification documents version 0.1.0 of the language and establishes the canonical way to declare domains, concepts, and pipes inside `.mthds` bundles.

---

## Core Idea

-Pipelex is a workflow declaration language that gets interpreted at runtime, we already have a Python runtime (see [github.com/pipelex/pipelex](https://github.com/pipelex/pipelex)).

+Pipelex is a method declaration language that gets interpreted at runtime, we already have a Python runtime (see [github.com/pipelex/pipelex](https://github.com/pipelex/pipelex)).

-Pipelex lets you declare **what** your AI workflow should accomplish and **how** to execute it step by step. Each `.plx` file represents a bundle where you define:

+Pipelex lets you declare **what** your AI method should accomplish and **how** to execute it step by step. Each `.mthds` file represents a bundle where you define:

- **Concepts** (PascalCase): the structured or unstructured data flowing through your system

-- **Pipes** (snake_case): operations or orchestrators that define your workflow

+- **Pipes** (snake_case): operations or orchestrators that define your method

- **Domain** (named in snake_case): the topic or field of work this bundle is about

-Write once in `.plx` files. Run anywhere. Get the same results every time.

+Write once in `.mthds` files. Run anywhere. Get the same results every time.

---

## Semantics

-Pipelex workflows are **declarative and deterministic**:

+Pipelex methods are **declarative and deterministic**:

- Pipes are evaluated based on their dependencies, not declaration order

- Controllers explicitly define execution flow (sequential, parallel, or conditional)

@@ -35,7 +35,7 @@ All concepts are strongly typed. All pipes declare their inputs and outputs. The

**Guarantees:**

-- Deterministic workflow execution and outputs

+- Deterministic method execution and outputs

- Strong typing with validation before runtime

**Not supported in v0.1.0:**

@@ -48,9 +48,9 @@ All concepts are strongly typed. All pipes declare their inputs and outputs. The

---

-## Complete Example: CV Job Matching Workflow

+## Complete Example: CV Job Matching Method

-This workflow analyses candidate CVs against job offer requirements to determine match quality.

+This method analyses candidate CVs against job offer requirements to determine match quality.

```toml

domain = "cv_job_matching"

@@ -180,5 +180,5 @@ Evaluate how well this candidate matches the job requirements.

- Processes all candidate CVs in parallel (batch processing)

- Each CV is extracted and analyzed against the structured job requirements using an LLM

- Produces a scored match analysis for each candidate with strengths, weaknesses, and hiring recommendations

-- Demonstrates sequential orchestration, parallel processing, nested workflows, and strong typing

+- Demonstrates sequential orchestration, parallel processing, nested methods, and strong typing

diff --git a/docs/home/3-understand-pipelex/pipelex-paradigm/index.md b/docs/home/3-understand-pipelex/pipelex-paradigm/index.md

index 80ca7b913..0754ec490 100644

--- a/docs/home/3-understand-pipelex/pipelex-paradigm/index.md

+++ b/docs/home/3-understand-pipelex/pipelex-paradigm/index.md

@@ -1,12 +1,12 @@

# The Pipelex Paradigm

-Pipelex is an **open-source Python framework** for defining and running **repeatable AI workflows**.

+Pipelex is an **open-source Python framework** for defining and running **repeatable AI methods**.

Here's what we've learned: LLMs are powerful, but asking them to do everything in one prompt is like asking a brilliant colleague to solve ten problems while juggling. The more complexity you pack into a single prompt, the more reliability drops. You've seen it: the perfect prompt that works 90% of the time until it doesn't.

The solution is straightforward: break complex tasks into focused steps. But without proper tooling, you end up with spaghetti code and prompts scattered across your codebase.

-Pipelex introduces **knowledge pipelines**: a way to capture these workflow steps as **composable pipes**. Each pipe follows one rule: **knowledge in, knowledge out**. Unlike rigid templates, each pipe uses AI's full intelligence to handle variation while guaranteeing consistent output structure. You get **deterministic structure with adaptive intelligence**, the reliability of software with the flexibility of AI.

+Pipelex introduces **knowledge pipelines**: a way to capture these method steps as **composable pipes**. Each pipe follows one rule: **knowledge in, knowledge out**. Unlike rigid templates, each pipe uses AI's full intelligence to handle variation while guaranteeing consistent output structure. You get **deterministic structure with adaptive intelligence**, the reliability of software with the flexibility of AI.

## Working with Knowledge and Using Concepts to Make Sense

diff --git a/docs/home/3-understand-pipelex/viewpoint.md b/docs/home/3-understand-pipelex/viewpoint.md

index 1690ef0fe..78aed111d 100644

--- a/docs/home/3-understand-pipelex/viewpoint.md

+++ b/docs/home/3-understand-pipelex/viewpoint.md

@@ -5,13 +5,13 @@ Web version: https://knowhowgraph.com/

---

# Viewpoint: The Know-How Graph

-Declarative, Repeatable AI Workflows as Shared Infrastructure

+Declarative, Repeatable AI Methods as Shared Infrastructure

**TL;DR**

Agents are great at solving new problems, terrible at doing the same thing twice.

-We argue that repeatable AI workflows should complement agents: written in a declarative language that both humans and agents can understand, reuse, and compose. These workflows become tools that agents can build, invoke, and share to turn repeatable cognitive work into reliable infrastructure.

+We argue that repeatable AI methods should complement agents: written in a declarative language that both humans and agents can understand, reuse, and compose. These methods become tools that agents can build, invoke, and share to turn repeatable cognitive work into reliable infrastructure.

At scale, this forms a **Know-How Graph:** a network of reusable methods that become shared infrastructure.

@@ -25,13 +25,13 @@ This is **the repeatability paradox**. Agents excel at understanding requirement

### We Need a Standard for Reusable Methods

-The solution is to capture these methods as AI workflows so agents can reuse them.

+The solution is to capture these methods as AI methods so agents can reuse them.

-By "AI workflows" we mean the actual intellectual work that wasn't automatable before LLMs: extracting structured data from unstructured documents, applying complex analyses and business rules, generating reports with reasoning. **This isn’t about API plumbing or app connectors, it’s about the actual intellectual work.**

+By "AI methods" we mean the actual intellectual work that wasn't automatable before LLMs: extracting structured data from unstructured documents, applying complex analyses and business rules, generating reports with reasoning. **This isn’t about API plumbing or app connectors, it’s about the actual intellectual work.**

-Yet look at what's happening today: teams everywhere are hand-crafting the same workflows from scratch. To extract data points from contracts and RFPs, to process expense reports, to classify documents, to screen resumes: identical problems solved in isolation, burning engineering hours.

+Yet look at what's happening today: teams everywhere are hand-crafting the same methods from scratch. To extract data points from contracts and RFPs, to process expense reports, to classify documents, to screen resumes: identical problems solved in isolation, burning engineering hours.

-## AI workflows must be formalized

+## AI methods must be formalized

OpenAPI and MCP enable interoperability for software and agents. The remaining problem is formalizing the **methods that assemble the cognitive steps themselves:** extraction, analysis, synthesis, creativity, and decision-making, the part where understanding matters. These formalized methods must be:

@@ -39,29 +39,29 @@ OpenAPI and MCP enable interoperability for software and agents. The remaining p

- **Efficient:** use the right AI model for each step, large or small.

- **Transparent:** no black boxes. Domain experts can audit the logic, spot issues, suggest improvements.

-The workflow becomes a shared artifact that humans and AI collaborate on, optimize together, and trust to run at scale.

+The method becomes a shared artifact that humans and AI collaborate on, optimize together, and trust to run at scale.

### Current solutions are inadequate

-Engineers building AI workflows today are stuck with bad options.

+Engineers building AI methods today are stuck with bad options.

-Code frameworks like LangChain require **maintaining custom software for every workflow,** with business logic buried in implementation details and technical debt accumulating with each new use case.

+Code frameworks like LangChain require **maintaining custom software for every method,** with business logic buried in implementation details and technical debt accumulating with each new use case.

-Visual builders like Zapier, Make, or n8n excel at what they're designed for: connecting APIs and automating data flow between services. **But automation platforms are not cognitive workflow systems.** AI was bolted on as a feature after the fact. They weren't built for intellectual work. When you need actual understanding and multi-step reasoning, these tools quickly become unwieldy.

+Visual builders like Zapier, Make, or n8n excel at what they're designed for: connecting APIs and automating data flow between services. **But automation platforms are not cognitive method systems.** AI was bolted on as a feature after the fact. They weren't built for intellectual work. When you need actual understanding and multi-step reasoning, these tools quickly become unwieldy.

-None of these solutions speak the language of the domain expert. None of them were built for agents to understand, modify, or generate workflows from requirements. They express technical plumbing, not business logic.

+None of these solutions speak the language of the domain expert. None of them were built for agents to understand, modify, or generate methods from requirements. They express technical plumbing, not business logic.

At the opposite, agent SDKs and multi-agent frameworks give you flexibility but sacrifice the repeatability you need for production. **You want agents for exploration and problem-solving, but when you've found a solution that works, you need to lock it down.**

-> We need a universal workflow language that expresses business logic, not technical plumbing.

-This workflow language must run across platforms, models, and agent frameworks, where the method outlives any vendor or model version.

+> We need a universal method language that expresses business logic, not technical plumbing.

+This method language must run across platforms, models, and agent frameworks, where the method outlives any vendor or model version.

>

## We Need a Declarative Language

-AI workflows should be first-class citizens of our technical infrastructure: not buried in code or trapped in platforms, but expressed in a language built for the job. The method should be an artifact you can version, diff, test, and optimize.

+AI methods should be first-class citizens of our technical infrastructure: not buried in code or trapped in platforms, but expressed in a language built for the job. The method should be an artifact you can version, diff, test, and optimize.

-**We need a declarative language that states what you want, not how to compute it.** As SQL separated intent from implementation for data, we need the same for AI workflows — so we can build a Know-How Graph: a reusable graph of methods that agents and humans both understand.

+**We need a declarative language that states what you want, not how to compute it.** As SQL separated intent from implementation for data, we need the same for AI methods — so we can build a Know-How Graph: a reusable graph of methods that agents and humans both understand.

### The language shouldn’t need documentation: it is the documentation

@@ -71,22 +71,22 @@ Traditional programs are instructions a machine blindly executes. The machine do

### Language fosters collaboration: users and agents building together

-The language must be readable by everyone who matters: domain experts who know the business logic, engineers who optimize and deploy it, and crucially, AI agents that can build and refine workflows autonomously.

+The language must be readable by everyone who matters: domain experts who know the business logic, engineers who optimize and deploy it, and crucially, AI agents that can build and refine methods autonomously.

-Imagine agents that transform natural language requirements into working workflows. They design each transformation step (or reuse existing ones), test against real or synthetic data, incorporate expert feedback, and iterate to improve quality while reducing costs. Once a workflow is built, agents can invoke it as a reliable tool whenever they need structured, predictable outputs.

+Imagine agents that transform natural language requirements into working methods. They design each transformation step (or reuse existing ones), test against real or synthetic data, incorporate expert feedback, and iterate to improve quality while reducing costs. Once a method is built, agents can invoke it as a reliable tool whenever they need structured, predictable outputs.

-> This is how agents finally remember know-how: by encoding methods into reusable workflows they can build, share, and execute on demand.

+> This is how agents finally remember know-how: by encoding methods into reusable methods they can build, share, and execute on demand.

>

## The Know-How Graph: a Network of Composable Methods

-**Breaking complex work into smaller tasks is a recursive, core pattern.** Each workflow should stand on the shoulders of others, composing like LEGO bricks to build increasingly sophisticated cognitive systems.

+**Breaking complex work into smaller tasks is a recursive, core pattern.** Each method should stand on the shoulders of others, composing like LEGO bricks to build increasingly sophisticated cognitive systems.

What emerges is a **Know-How Graph**: not just static knowledge, but executable methods that connect and build upon one another. **Unlike a knowledge graph mapping facts, this maps procedures: the actual know-how of getting cognitive work done.**

**Example:**

-A recruitment workflow doesn't start from scratch. It composes existing workflows:

+A recruitment method doesn't start from scratch. It composes existing methods:

- ExtractCandidateProfile (experience, education, skills…)

- ExtractJobOffer (skills, years of experience…).

@@ -95,23 +95,23 @@ These feed into your custom ScoreCard logic to produce a MatchAnalysis, which tr

Each component can be assigned to different team members and validated independently by the relevant stakeholders.

-> Think of a workflow as a proven route through the work, and the Know-How Graph as the network of all such routes.

+> Think of a method as a proven route through the work, and the Know-How Graph as the network of all such routes.

>

### Know-how is as shareable as knowledge

-Think about the explosion of prompt sharing since 2023. All those people trading their best ChatGPT prompts on Twitter, GitHub, Reddit, LinkedIn. Now imagine that same viral knowledge sharing, but with complete, tested, composable workflows instead of fragile prompts.

+Think about the explosion of prompt sharing since 2023. All those people trading their best ChatGPT prompts on Twitter, GitHub, Reddit, LinkedIn. Now imagine that same viral knowledge sharing, but with complete, tested, composable methods instead of fragile prompts.

-We’ve seen this movie: software package managers, SQL views, Docker, dbt packages. Composable standards create ecosystems where everyone’s work makes everyone else more productive. Generic workflows for common tasks will spread rapidly, while companies keep their differentiating workflows as competitive advantage. That's how we stop reinventing the wheel while preserving secret sauce.

+We’ve seen this movie: software package managers, SQL views, Docker, dbt packages. Composable standards create ecosystems where everyone’s work makes everyone else more productive. Generic methods for common tasks will spread rapidly, while companies keep their differentiating methods as competitive advantage. That's how we stop reinventing the wheel while preserving secret sauce.

-The same principle applies to AI workflows through the Know-How Graph: durable infrastructure that compounds value over time.

+The same principle applies to AI methods through the Know-How Graph: durable infrastructure that compounds value over time.

-> The Know-How Graph will thrive on the open web because workflows are just files: easy to publish, fork, improve, and compose.

+> The Know-How Graph will thrive on the open web because methods are just files: easy to publish, fork, improve, and compose.

>

### What this unlocks

-- Faster time to production (reuse existing workflows + AI writes them for you)

+- Faster time to production (reuse existing methods + AI writes them for you)

- Lower run costs (optimize price / performance for each task)

- Better collaboration between tech and business

- Better auditability / compliance

@@ -121,26 +121,26 @@ The same principle applies to AI workflows through the Know-How Graph: durable i

[**Pipelex**](https://github.com/Pipelex/pipelex) is our take on this language: open-source (MIT), designed for the Know-How Graph.

-Each workflow is built from pipes: modular transformations that guarantee their output structure while applying intelligence to the content. A pipe is a knowledge transformer with a simple contract: knowledge in → knowledge out., each defined conceptually and with explicit structure and validation. The method is readable and editable by humans and agents.

+Each method is built from pipes: modular transformations that guarantee their output structure while applying intelligence to the content. A pipe is a knowledge transformer with a simple contract: knowledge in → knowledge out., each defined conceptually and with explicit structure and validation. The method is readable and editable by humans and agents.

-Our Pipelex workflow builder is itself a Pipelex workflow. The tooling builds itself.

+Our Pipelex method builder is itself a Pipelex method. The tooling builds itself.

## Why This Can Become a Standard

-Pipelex is MIT-licensed and designed for portability. Workflows are files, based on TOML syntax (itself well standardized), and the outputs are validated JSON.

+Pipelex is MIT-licensed and designed for portability. Methods are files, based on TOML syntax (itself well standardized), and the outputs are validated JSON.

-Early adopters are contributing to the [cookbook repo](https://github.com/Pipelex/pipelex-cookbook/tree/feature/Chicago), building integrations, and running workflows in production. The pieces for ecosystem growth are in place: declarative spec, reference implementation, composable architecture.

+Early adopters are contributing to the [cookbook repo](https://github.com/Pipelex/pipelex-cookbook/tree/feature/Chicago), building integrations, and running methods in production. The pieces for ecosystem growth are in place: declarative spec, reference implementation, composable architecture.

Building a standard is hard. We're at v0.1.0, with versioning and backward compatibility coming next. The spec will evolve with your feedback.

## Join Us

-The most valuable standards are boring infrastructure everyone relies on: SQL, HTTP, JSON. Pipelex aims to be that for AI workflows.

+The most valuable standards are boring infrastructure everyone relies on: SQL, HTTP, JSON. Pipelex aims to be that for AI methods.

-Start with one workflow: extract invoice data, process applications, analyze reports… Share what works. Build on what others share.

+Start with one method: extract invoice data, process applications, analyze reports… Share what works. Build on what others share.

-**The future of AI needs both:** smarter agents that explore and adapt, AND reliable workflows that execute proven methods at scale. One workflow at a time, let's build the cognitive infrastructure every organization needs.

+**The future of AI needs both:** smarter agents that explore and adapt, AND reliable methods that execute proven methods at scale. One method at a time, let's build the cognitive infrastructure every organization needs.

---

diff --git a/docs/home/4-cookbook-examples/extract-dpe.md b/docs/home/4-cookbook-examples/extract-dpe.md

index 7df181d3e..edc91c142 100644

--- a/docs/home/4-cookbook-examples/extract-dpe.md

+++ b/docs/home/4-cookbook-examples/extract-dpe.md

@@ -52,7 +52,7 @@ class Dpe(StructuredContent):

yearly_energy_costs: Optional[float] = None

```

-## The Pipeline Definition: `extract_dpe.plx`

+## The Pipeline Definition: `extract_dpe.mthds`

The pipeline uses a `PipeLLM` with a very specific prompt to extract the information from the document. The combination of the image and the OCR text allows the LLM to accurately capture all the details.

diff --git a/docs/home/4-cookbook-examples/extract-gantt.md b/docs/home/4-cookbook-examples/extract-gantt.md

index 156e8eeee..7ea9043f6 100644

--- a/docs/home/4-cookbook-examples/extract-gantt.md

+++ b/docs/home/4-cookbook-examples/extract-gantt.md

@@ -51,9 +51,9 @@ class GanttChart(StructuredContent):

milestones: Optional[List[Milestone]]

```

-## The Pipeline Definition: `gantt.plx`

+## The Pipeline Definition: `gantt.mthds`

-The `extract_gantt_by_steps` pipeline is a sequence of smaller, focused pipes. This is a great example of building a complex workflow from simple, reusable components.

+The `extract_gantt_by_steps` pipeline is a sequence of smaller, focused pipes. This is a great example of building a complex method from simple, reusable components.

```toml

[pipe.extract_gantt_by_steps]

@@ -92,7 +92,7 @@ Here is the name of the task you have to extract the dates for:

@gantt_task_name

"""

```

-This demonstrates the "divide and conquer" approach that Pipelex encourages. By breaking down a complex problem into smaller steps, each step can be handled by a specialized pipe, making the overall workflow more robust and easier to debug.

+This demonstrates the "divide and conquer" approach that Pipelex encourages. By breaking down a complex problem into smaller steps, each step can be handled by a specialized pipe, making the overall method more robust and easier to debug.

## Flowchart

diff --git a/docs/home/4-cookbook-examples/extract-generic.md b/docs/home/4-cookbook-examples/extract-generic.md

index e0cf87b1e..519beacca 100644

--- a/docs/home/4-cookbook-examples/extract-generic.md

+++ b/docs/home/4-cookbook-examples/extract-generic.md

@@ -24,7 +24,7 @@ async def extract_generic(pdf_url: str) -> TextAndImagesContent:

return markdown_and_images

```

-The `merge_markdown_and_images` function is a great example of how you can add your own Python code to a Pipelex workflow to perform custom processing.

+The `merge_markdown_and_images` function is a great example of how you can add your own Python code to a Pipelex method to perform custom processing.

```python

def merge_markdown_and_images(working_memory: WorkingMemory) -> TextAndImagesContent:

diff --git a/docs/home/4-cookbook-examples/extract-proof-of-purchase.md b/docs/home/4-cookbook-examples/extract-proof-of-purchase.md

index 4faed4ad7..48736f345 100644

--- a/docs/home/4-cookbook-examples/extract-proof-of-purchase.md

+++ b/docs/home/4-cookbook-examples/extract-proof-of-purchase.md

@@ -48,7 +48,7 @@ class ProofOfPurchase(StructuredContent):

```

This demonstrates how you can create nested data structures to accurately model your data.

-## The Pipeline Definition: `extract_proof_of_purchase.plx`

+## The Pipeline Definition: `extract_proof_of_purchase.mthds`

The pipeline uses a powerful `PipeLLM` to extract the structured data from the document. The prompt is carefully engineered to guide the LLM.

diff --git a/docs/home/4-cookbook-examples/extract-table.md b/docs/home/4-cookbook-examples/extract-table.md

index 2f963daec..97e9a57a1 100644

--- a/docs/home/4-cookbook-examples/extract-table.md

+++ b/docs/home/4-cookbook-examples/extract-table.md

@@ -56,7 +56,7 @@ class HtmlTable(StructuredContent):

return self

```

-## The Pipeline Definition: `table.plx`

+## The Pipeline Definition: `table.mthds`

The pipeline uses a two-step "extract and review" pattern. The first pipe does the initial extraction, and the second pipe reviews the generated HTML against the original image to correct any errors. This is a powerful pattern for increasing the reliability of LLM outputs.

@@ -88,4 +88,4 @@ Rewrite the entire html table with your potential corrections.

Make sure you do not forget any text.

"""

```

-This self-correction pattern is a key technique for building robust and reliable AI workflows with Pipelex.

\ No newline at end of file

+This self-correction pattern is a key technique for building robust and reliable AI methods with Pipelex.

\ No newline at end of file

diff --git a/docs/home/4-cookbook-examples/hello-world.md b/docs/home/4-cookbook-examples/hello-world.md

index b81e1c4aa..6f18a0955 100644

--- a/docs/home/4-cookbook-examples/hello-world.md

+++ b/docs/home/4-cookbook-examples/hello-world.md

@@ -20,7 +20,7 @@ import asyncio

from pipelex import pretty_print

from pipelex.pipelex import Pipelex

-from pipelex.pipeline.execute import execute_pipeline

+from pipelex.pipeline.runner import PipelexRunner

async def hello_world():

@@ -28,9 +28,11 @@ async def hello_world():

This function demonstrates the use of a super simple Pipelex pipeline to generate text.

"""

# Run the pipe

- pipe_output = await execute_pipeline(

+ runner = PipelexRunner()

+ response = await runner.execute_pipeline(

pipe_code="hello_world",

)

+ pipe_output = response.pipe_output

# Print the output

pretty_print(pipe_output, title="Your first Pipelex output")

@@ -44,7 +46,7 @@ asyncio.run(hello_world())

This example shows the minimal setup needed to run a Pipelex pipeline: initialize Pipelex, execute a pipeline by its code name, and pretty-print the results.

-## The Pipeline Definition: `hello_world.plx`

+## The Pipeline Definition: `hello_world.mthds`

The pipeline definition is extremely simple - it's a single LLM call that generates a haiku:

diff --git a/docs/home/4-cookbook-examples/index.md b/docs/home/4-cookbook-examples/index.md

index b17436d70..79704d4d8 100644

--- a/docs/home/4-cookbook-examples/index.md

+++ b/docs/home/4-cookbook-examples/index.md

@@ -5,7 +5,7 @@ Welcome to the Pipelex Cookbook!

[](https://github.com/Pipelex/pipelex-cookbook/tree/feature/Chicago)

-This is your go-to resource for practical examples and ready-to-use recipes to build powerful and reliable AI workflows with Pipelex. Whether you're a beginner looking to get started or an experienced user searching for advanced patterns, you'll find something useful here.

+This is your go-to resource for practical examples and ready-to-use recipes to build powerful and reliable AI methods with Pipelex. Whether you're a beginner looking to get started or an experienced user searching for advanced patterns, you'll find something useful here.

## Philosophy

@@ -34,7 +34,7 @@ Here are some of the examples you can find in the cookbook, organized by categor

* [**Simple OCR**](./simple-ocr.md): A basic OCR pipeline to extract text from a PDF.

* [**Generic Document Extraction**](./extract-generic.md): A powerful pipeline to extract text and images from complex documents.

-* [**Invoice Extractor**](./invoice-extractor.md): A complete workflow for processing invoices, including reporting.

+* [**Invoice Extractor**](./invoice-extractor.md): A complete method for processing invoices, including reporting.

* [**Proof of Purchase Extraction**](./extract-proof-of-purchase.md): A targeted pipeline for extracting data from receipts.

### Graphical Extraction

diff --git a/docs/home/4-cookbook-examples/invoice-extractor.md b/docs/home/4-cookbook-examples/invoice-extractor.md

index 8dc82644c..186266061 100644

--- a/docs/home/4-cookbook-examples/invoice-extractor.md

+++ b/docs/home/4-cookbook-examples/invoice-extractor.md

@@ -9,7 +9,7 @@ This example provides a comprehensive pipeline for processing invoices. It takes

## The Pipeline Explained

-The `process_invoice` pipeline is a complete workflow for invoice processing.

+The `process_invoice` pipeline is a complete method for invoice processing.

```python

async def process_invoice(pdf_url: str) -> ListContent[Invoice]:

@@ -51,9 +51,9 @@ class Invoice(StructuredContent):

# ... other fields

```

-## The Pipeline Definition: `invoice.plx`

+## The Pipeline Definition: `invoice.mthds`

-The entire workflow is defined in a PLX file. This declarative approach makes the pipeline easy to understand and modify. Here's a snippet from `invoice.plx`:

+The entire method is defined in a MTHDS file. This declarative approach makes the pipeline easy to understand and modify. Here's a snippet from `invoice.mthds`:

```toml

[pipe.process_invoice]

@@ -89,7 +89,7 @@ The category of this invoice is: $invoice_details.category.

"""

```

-This shows how a complex workflow, including text extraction with `PipeExtract` and LLM calls, can be defined in a simple, readable format. The `model = "$engineering-structured"` line is particularly powerful, as it tells the LLM to structure its output according to the `Invoice` model.

+This shows how a complex method, including text extraction with `PipeExtract` and LLM calls, can be defined in a simple, readable format. The `model = "$engineering-structured"` line is particularly powerful, as it tells the LLM to structure its output according to the `Invoice` model.

## The Pipeline Flowchart

diff --git a/docs/home/4-cookbook-examples/simple-ocr.md b/docs/home/4-cookbook-examples/simple-ocr.md

index bccfa51cd..58f4633a7 100644

--- a/docs/home/4-cookbook-examples/simple-ocr.md

+++ b/docs/home/4-cookbook-examples/simple-ocr.md

@@ -2,7 +2,7 @@

This example demonstrates a basic OCR (Optical Character Recognition) pipeline. It takes a PDF file as input, extracts the text from each page, and saves the content.

-This is a fundamental building block for many document processing workflows.

+This is a fundamental building block for many document processing methods.

## Get the code

diff --git a/docs/home/4-cookbook-examples/write-tweet.md b/docs/home/4-cookbook-examples/write-tweet.md

index a3454a708..1825cd2c5 100644

--- a/docs/home/4-cookbook-examples/write-tweet.md

+++ b/docs/home/4-cookbook-examples/write-tweet.md

@@ -36,7 +36,7 @@ This example shows how to use multiple inputs to guide the generation process an

## The Data Structure: `OptimizedTweet` Model

-The data model for this pipeline is very simple, as the final output is just a piece of text. However, the pipeline uses several concepts internally to manage the workflow, such as `DraftTweet`, `TweetAnalysis`, and `WritingStyle`.

+The data model for this pipeline is very simple, as the final output is just a piece of text. However, the pipeline uses several concepts internally to manage the method, such as `DraftTweet`, `TweetAnalysis`, and `WritingStyle`.

```python

class OptimizedTweet(TextContent):

@@ -44,7 +44,7 @@ class OptimizedTweet(TextContent):

pass

```

-## The Pipeline Definition: `tech_tweet.plx`

+## The Pipeline Definition: `tech_tweet.mthds`

This pipeline uses a two-step "analyze and optimize" sequence. The first pipe analyzes the draft tweet for common pitfalls, and the second pipe rewrites the tweet based on the analysis and a provided writing style. This is a powerful pattern for refining generated content.

@@ -82,7 +82,7 @@ Evaluate the tweet for these key issues:

@draft_tweet

"""

```

-This "analyze and refine" pattern is a great way to build more reliable and sophisticated text generation workflows. The first step provides a structured critique, and the second step uses that critique to improve the final output.

+This "analyze and refine" pattern is a great way to build more reliable and sophisticated text generation methods. The first step provides a structured critique, and the second step uses that critique to improve the final output.

Here is the flowchart generated during this run:

diff --git a/docs/home/5-setup/configure-ai-providers.md b/docs/home/5-setup/configure-ai-providers.md

index 881648662..8fa346266 100644

--- a/docs/home/5-setup/configure-ai-providers.md

+++ b/docs/home/5-setup/configure-ai-providers.md

@@ -173,10 +173,10 @@ Learn more in our [Inference Backend Configuration](../../home/7-configuration/c

Now that you have your backend configured:

1. **Organize your project**: [Project Organization](./project-organization.md)

-2. **Learn the concepts**: [Writing Workflows Tutorial](../../home/2-get-started/pipe-builder.md)

+2. **Learn the concepts**: [Writing Methods Tutorial](../../home/2-get-started/pipe-builder.md)

3. **Explore examples**: [Cookbook Repository](https://github.com/Pipelex/pipelex-cookbook/tree/feature/Chicago)

-4. **Deep dive**: [Build Reliable AI Workflows](../../home/6-build-reliable-ai-workflows/kick-off-a-pipelex-workflow-project.md)

+4. **Deep dive**: [Build Reliable AI Methods](../../home/6-build-reliable-ai-workflows/kick-off-a-methods-project.md)

!!! tip "Advanced Configuration"

For detailed backend configuration options, see [Inference Backend Configuration](../../home/7-configuration/config-technical/inference-backend-config.md).

diff --git a/docs/home/5-setup/index.md b/docs/home/5-setup/index.md

index 61a3cc0b7..2051dd126 100644

--- a/docs/home/5-setup/index.md

+++ b/docs/home/5-setup/index.md

@@ -12,7 +12,7 @@ If you already have a project running and want to tune behavior, jump to [Config

## Quick guide

- **Need to run pipelines with LLMs?** Start with [Configure AI Providers](./configure-ai-providers.md).

-- **Need a recommended repo layout for `.plx` and Python code?** See [Project Organization](./project-organization.md).

+- **Need a recommended repo layout for `.mthds` and Python code?** See [Project Organization](./project-organization.md).

- **Need to understand telemetry and privacy trade-offs?** See [Telemetry](./telemetry.md).

- **Ready to tune the knobs?** Go to [Configuration Overview](../7-configuration/index.md).

diff --git a/docs/home/5-setup/project-organization.md b/docs/home/5-setup/project-organization.md

index d62e3bd72..12ec2dd90 100644

--- a/docs/home/5-setup/project-organization.md

+++ b/docs/home/5-setup/project-organization.md

@@ -2,20 +2,21 @@

## Overview

-Pipelex automatically discovers `.plx` pipeline files anywhere in your project (excluding `.venv`, `.git`, `node_modules`, etc.).

+Pipelex automatically discovers `.mthds` pipeline files anywhere in your project (excluding `.venv`, `.git`, `node_modules`, etc.).

## Recommended: Keep pipelines with related code

```bash

your_project/

-├── my_project/ # Your Python package

+├── METHODS.toml # Package manifest (optional)

+├── my_project/ # Your Python package

│ ├── finance/

│ │ ├── services.py

-│ │ ├── invoices.plx # Pipeline with finance code

+│ │ ├── invoices.mthds # Pipeline with finance code

│ │ └── invoices_struct.py # Structure classes

│ └── legal/

│ ├── services.py

-│ ├── contracts.plx # Pipeline with legal code

+│ ├── contracts.mthds # Pipeline with legal code

│ └── contracts_struct.py

├── .pipelex/ # Config at repo root

│ └── pipelex.toml

@@ -23,19 +24,21 @@ your_project/

└── requirements.txt

```

+- **Package manifest**: `METHODS.toml` at your project root declares package identity and pipe visibility. See [Packages](../6-build-reliable-ai-workflows/packages.md) for details.

+

## Alternative: Centralize pipelines

```bash

your_project/

├── pipelines/

-│ ├── invoices.plx

-│ ├── contracts.plx

+│ ├── invoices.mthds

+│ ├── contracts.mthds

│ └── structures.py

└── .pipelex/

└── pipelex.toml

```

-Learn more in our [Project Structure documentation](../../home/6-build-reliable-ai-workflows/kick-off-a-pipelex-workflow-project.md).

+Learn more in our [Project Structure documentation](../../home/6-build-reliable-ai-workflows/kick-off-a-methods-project.md).

---

@@ -51,8 +54,8 @@ Learn more in our [Project Structure documentation](../../home/6-build-reliable-

Now that you understand project organization:

1. **Start building**: [Get Started](../../home/2-get-started/pipe-builder.md)

-2. **Learn the concepts**: [Writing Workflows Tutorial](../../home/2-get-started/pipe-builder.md)

+2. **Learn the concepts**: [Writing Methods Tutorial](../../home/2-get-started/pipe-builder.md)

3. **Explore examples**: [Cookbook Repository](https://github.com/Pipelex/pipelex-cookbook/tree/feature/Chicago)

-4. **Deep dive**: [Build Reliable AI Workflows](../../home/6-build-reliable-ai-workflows/kick-off-a-pipelex-workflow-project.md)

+4. **Deep dive**: [Build Reliable AI Methods](../../home/6-build-reliable-ai-workflows/kick-off-a-methods-project.md)

diff --git a/docs/home/6-build-reliable-ai-workflows/concepts/define_your_concepts.md b/docs/home/6-build-reliable-ai-workflows/concepts/define_your_concepts.md

index f2f4270c9..c1fc9447f 100644

--- a/docs/home/6-build-reliable-ai-workflows/concepts/define_your_concepts.md

+++ b/docs/home/6-build-reliable-ai-workflows/concepts/define_your_concepts.md

@@ -1,6 +1,6 @@

# Defining Your Concepts

-Concepts are the foundation of reliable AI workflows. They define what flows through your pipes—not just as data types, but as meaningful pieces of knowledge with clear boundaries and validation rules.

+Concepts are the foundation of reliable AI methods. They define what flows through your pipes—not just as data types, but as meaningful pieces of knowledge with clear boundaries and validation rules.

## Writing Concept Definitions

@@ -72,7 +72,7 @@ Those concepts will be Text-based by default. If you want to use structured outp

Group concepts that naturally belong together in the same domain. A domain acts as a namespace for a set of related concepts and pipes, helping you organize and reuse your pipeline components. You can learn more about them in [Understanding Domains](../domain.md).

```toml

-# finance.plx

+# finance.mthds

domain = "finance"

description = "Financial document processing"

@@ -86,7 +86,7 @@ LineItem = "An individual item or service listed in a financial document"

## Get Started with Inline Structures

-To add structure to your concepts, the **recommended approach** is using **inline structures** directly in your `.plx` files. Inline structures support all field types including nested concepts:

+To add structure to your concepts, the **recommended approach** is using **inline structures** directly in your `.mthds` files. Inline structures support all field types including nested concepts:

```toml

[concept.Customer]

diff --git a/docs/home/6-build-reliable-ai-workflows/concepts/inline-structures.md b/docs/home/6-build-reliable-ai-workflows/concepts/inline-structures.md

index 06f0025a1..7d82053f8 100644

--- a/docs/home/6-build-reliable-ai-workflows/concepts/inline-structures.md

+++ b/docs/home/6-build-reliable-ai-workflows/concepts/inline-structures.md

@@ -1,6 +1,6 @@

# Inline Structure Definition

-Define structured concepts directly in your `.plx` files using pipelex syntax. This is the **recommended approach** for most use cases, offering rapid development without Python boilerplate.

+Define structured concepts directly in your `.mthds` files using pipelex syntax. This is the **recommended approach** for most use cases, offering rapid development without Python boilerplate.

For an introduction to concepts themselves, see [Define Your Concepts](define_your_concepts.md). For advanced features requiring Python classes, see [Python StructuredContent Classes](python-classes.md).

@@ -246,11 +246,11 @@ The `pipelex build structures` command generates Python classes from your inline

### Usage

```bash

-# Generate from a directory of .plx files

+# Generate from a directory of .mthds files

pipelex build structures ./my_pipelines/

-# Generate from a specific .plx file

-pipelex build structures ./my_pipeline/bundle.plx

+# Generate from a specific .mthds file

+pipelex build structures ./my_pipeline/bundle.mthds

# Specify output directory

pipelex build structures ./my_pipelines/ -o ./generated/

@@ -306,5 +306,5 @@ See [Python StructuredContent Classes](python-classes.md) for advanced features.

- [Define Your Concepts](define_your_concepts.md) - Learn about concept semantics and naming

- [Python StructuredContent Classes](python-classes.md) - Advanced features with Python

-- [Writing Workflows Tutorial](../../2-get-started/pipe-builder.md) - Get started with structured outputs

+- [Writing Methods Tutorial](../../2-get-started/pipe-builder.md) - Get started with structured outputs

diff --git a/docs/home/6-build-reliable-ai-workflows/concepts/native-concepts.md b/docs/home/6-build-reliable-ai-workflows/concepts/native-concepts.md

index 98515c181..9ba73cf3b 100644

--- a/docs/home/6-build-reliable-ai-workflows/concepts/native-concepts.md

+++ b/docs/home/6-build-reliable-ai-workflows/concepts/native-concepts.md

@@ -1,12 +1,12 @@

# Native Concepts

-Pipelex includes several built-in native concepts that cover common data types in AI workflows. These concepts come with predefined structures and are automatically available in all pipelines—no setup required.

+Pipelex includes several built-in native concepts that cover common data types in AI methods. These concepts come with predefined structures and are automatically available in all pipelines—no setup required.

For an introduction to concepts, see [Define Your Concepts](define_your_concepts.md).

## What Are Native Concepts?

-Native concepts are ready-to-use building blocks for AI workflows. They represent common data types you'll frequently work with: text, images, documents, numbers, and combinations thereof.

+Native concepts are ready-to-use building blocks for AI methods. They represent common data types you'll frequently work with: text, images, documents, numbers, and combinations thereof.

**Key characteristics:**

@@ -133,7 +133,7 @@ class DynamicContent(StuffContent):

pass

```

-**Use for:** Workflows where the content structure isn't known in advance.

+**Use for:** Methods where the content structure isn't known in advance.

### JSONContent

@@ -189,7 +189,7 @@ output = "Page"

This extracts each page with both its text/images and a visual representation.

-### In Complex Workflows

+### In Complex Methods

```toml

[pipe.create_report]

@@ -223,7 +223,7 @@ Refine native concepts when:

- ✅ You need semantic specificity (e.g., `Invoice` vs `Document`)

- ✅ You want to add custom structure on top of the base structure

-- ✅ Building domain-specific workflows

+- ✅ Building domain-specific methods

- ✅ Need type safety for specific document types

## Common Patterns

@@ -286,5 +286,5 @@ Analyze this image: $image"

- [Define Your Concepts](define_your_concepts.md) - Learn about concept semantics

- [Inline Structures](inline-structures.md) - Add structure to refined concepts

- [Python StructuredContent Classes](python-classes.md) - Advanced customization

-- [Writing Workflows Tutorial](../../2-get-started/pipe-builder.md) - Use native concepts in pipelines

+- [Writing Methods Tutorial](../../2-get-started/pipe-builder.md) - Use native concepts in pipelines

diff --git a/docs/home/6-build-reliable-ai-workflows/concepts/python-classes.md b/docs/home/6-build-reliable-ai-workflows/concepts/python-classes.md

index c2d46a837..dc19439c7 100644

--- a/docs/home/6-build-reliable-ai-workflows/concepts/python-classes.md

+++ b/docs/home/6-build-reliable-ai-workflows/concepts/python-classes.md

@@ -122,7 +122,7 @@ age = { type = "integer", description = "User's age", required = false }

**Step 2: Generate the base class**

```bash

-pipelex build structures ./my_pipeline.plx -o ./structures/

+pipelex build structures ./my_pipeline.mthds -o ./structures/

```

**Step 3: Add custom validation**

@@ -151,7 +151,7 @@ class UserProfile(StructuredContent):

return v

```

-**Step 4: Update your .plx file**

+**Step 4: Update your .mthds file**

```toml

[concept]

@@ -184,7 +184,7 @@ in_stock = { type = "boolean", description = "Stock availability", default_value

**2. Generate the Python class:**

```bash

-pipelex build structures ./ecommerce.plx -o ./structures/

+pipelex build structures ./ecommerce.mthds -o ./structures/

```

**3. Add your custom logic** to the generated file:

@@ -217,7 +217,7 @@ class Product(StructuredContent):

return f"${self.price:.2f}"

```

-**4. Update your `.plx` file:**

+**4. Update your `.mthds` file:**

```toml

domain = "ecommerce"

@@ -255,5 +255,5 @@ Product = "A product in the catalog"

- [Inline Structures](inline-structures.md) - Fast prototyping with TOML

- [Define Your Concepts](define_your_concepts.md) - Learn about concept semantics and naming

-- [Writing Workflows Tutorial](../../2-get-started/pipe-builder.md) - Get started with structured outputs

+- [Writing Methods Tutorial](../../2-get-started/pipe-builder.md) - Get started with structured outputs

diff --git a/docs/home/6-build-reliable-ai-workflows/concepts/refining-concepts.md b/docs/home/6-build-reliable-ai-workflows/concepts/refining-concepts.md

index 6412e8d1c..de5cf0021 100644

--- a/docs/home/6-build-reliable-ai-workflows/concepts/refining-concepts.md

+++ b/docs/home/6-build-reliable-ai-workflows/concepts/refining-concepts.md

@@ -1,6 +1,6 @@

# Refining Concepts

-Concept refinement allows you to create more specific versions of existing concepts while inheriting their structure. This provides semantic clarity and type safety for domain-specific workflows.

+Concept refinement allows you to create more specific versions of existing concepts while inheriting their structure. This provides semantic clarity and type safety for domain-specific methods.

## What is Concept Refinement?

@@ -37,7 +37,7 @@ inputs = { contract = "Contract" } # Clear what type of document is expected

output = "ContractTerms"

```

-### 3. Domain-Specific Workflows

+### 3. Domain-Specific Methods

Build pipelines tailored to specific use cases:

@@ -184,6 +184,92 @@ refines = "Customer"

Both `VIPCustomer` and `InactiveCustomer` will have access to the `name` and `email` fields defined in `Customer`. When you create content for these concepts, it will be compatible with the base `Customer` structure.

+## Cross-Package Refinement

+

+You can refine concepts that live in a different package. This lets you specialize a shared concept from a dependency without modifying the dependency itself.

+

+### Syntax

+

+Use the `->` cross-package reference operator in the `refines` field:

+

+```toml

+[concept.RefinedConcept]

+description = "A more specialized version of a cross-package concept"

+refines = "alias->domain.BaseConceptCode"

+```

+

+| Part | Description |

+|------|-------------|

+| `alias` | The dependency alias declared in your `METHODS.toml` `[dependencies]` section |

+| `->` | Cross-package reference operator |

+| `domain` | The dot-separated domain path inside the dependency package |

+| `BaseConceptCode` | The `PascalCase` concept code to refine |

+

+### Full Example

+

+Suppose you depend on a scoring library that defines a `WeightedScore` concept:

+

+**Dependency package** (`scoring-lib`):

+

+```toml title="METHODS.toml"

+[package]

+address = "github.com/acme/scoring-lib"

+version = "2.0.0"

+description = "Scoring utilities."

+

+[exports.scoring]

+pipes = ["compute_weighted_score"]

+```

+

+```toml title="scoring.mthds"

+domain = "scoring"

+

+[concept.WeightedScore]

+description = "A weighted score result"

+

+[pipe.compute_weighted_score]

+type = "PipeLLM"

+description = "Compute a weighted score"

+output = "WeightedScore"

+prompt = "Compute a weighted score for: {{ item }}"

+```

+

+**Your consumer package**:

+

+```toml title="METHODS.toml"

+[package]

+address = "github.com/acme/analysis-app"

+version = "1.0.0"

+description = "Analysis application."

+

+[dependencies]

+scoring_lib = { address = "github.com/acme/scoring-lib", version = "^2.0.0" }

+

+[exports.analysis]

+pipes = ["compute_detailed_score"]

+```

+

+```toml title="analysis.mthds"

+domain = "analysis"

+

+[concept.DetailedScore]

+description = "An extended score with additional detail"

+refines = "scoring_lib->scoring.WeightedScore"

+

+[pipe.compute_detailed_score]

+type = "PipeLLM"

+description = "Compute a detailed score"

+output = "DetailedScore"

+prompt = "Compute a detailed score for: {{ item }}"

+```

+

+`DetailedScore` inherits the structure of `WeightedScore` from the `scoring_lib` dependency's `scoring` domain.

+

+!!! important

+ The base concept must be accessible from the dependency. The dependency must export the pipes in the domain that contains the concept, or the concept's domain must be reachable via an exported pipe's bundle.

+

+For more on how dependencies and cross-package references work, see [Packages](../packages.md#cross-package-references).

+

## Type Compatibility

Understanding how refined concepts interact with pipe inputs is crucial.

@@ -287,7 +373,7 @@ refines = "Document"

- ✅ Your concept is semantically a specific type of an existing concept

- ✅ The base concept's structure is sufficient for your needs

- ✅ You want to inherit existing validation and behavior

-- ✅ You're building domain-specific workflows with clear document/content types

+- ✅ You're building domain-specific methods with clear document/content types

- ✅ You need to create specialized versions of an existing concept

**Examples:**

@@ -312,4 +398,5 @@ refines = "Customer"

- [Native Concepts](native-concepts.md) - Complete guide to native concepts

- [Inline Structures](inline-structures.md) - Add structure to concepts

- [Python StructuredContent Classes](python-classes.md) - Advanced customization

+- [Packages](../packages.md) - Package system, dependencies, and cross-package references

diff --git a/docs/home/6-build-reliable-ai-workflows/configure-ai-llm-to-optimize-workflows.md b/docs/home/6-build-reliable-ai-workflows/configure-ai-llm-to-optimize-methods.md

similarity index 100%

rename from docs/home/6-build-reliable-ai-workflows/configure-ai-llm-to-optimize-workflows.md

rename to docs/home/6-build-reliable-ai-workflows/configure-ai-llm-to-optimize-methods.md

diff --git a/docs/home/6-build-reliable-ai-workflows/domain.md b/docs/home/6-build-reliable-ai-workflows/domain.md

index 93b86d62c..8482bd477 100644

--- a/docs/home/6-build-reliable-ai-workflows/domain.md

+++ b/docs/home/6-build-reliable-ai-workflows/domain.md

@@ -1,6 +1,6 @@

# Understanding Domains

-A domain in Pipelex is a **semantic namespace** that organizes related concepts and pipes. It's declared at the top of every `.plx` file and serves as an identifier for grouping related functionality.

+A domain in Pipelex is a **semantic namespace** that organizes related concepts and pipes. It's declared at the top of every `.mthds` file and serves as an identifier for grouping related functionality.

## What is a Domain?

@@ -12,7 +12,7 @@ A domain is defined by three properties:

## Declaring a Domain

-Every `.plx` file must declare its domain at the beginning:

+Every `.mthds` file must declare its domain at the beginning:

```toml

domain = "invoice_processing"

@@ -39,6 +39,37 @@ system_prompt = "You are an expert in financial document analysis and invoice pr

❌ domain = "invoiceProcessing" # camelCase not allowed

```

+## Hierarchical Domains

+

+Domains support **dotted paths** to express a hierarchy:

+

+```toml

+domain = "legal"

+domain = "legal.contracts"

+domain = "legal.contracts.shareholder"

+```

+

+Each segment must be `snake_case`. The hierarchy is organizational — there is no scope inheritance between parent and child domains. `legal.contracts` and `legal` are independent namespaces; defining concepts in one does not affect the other.

+

+**Valid hierarchical domains:**

+

+```toml

+✅ domain = "legal.contracts"

+✅ domain = "legal.contracts.shareholder"

+✅ domain = "finance.reporting"

+```

+

+**Invalid hierarchical domains:**

+

+```toml

+❌ domain = ".legal" # Cannot start with a dot

+❌ domain = "legal." # Cannot end with a dot

+❌ domain = "legal..contracts" # No consecutive dots

+❌ domain = "Legal.Contracts" # Segments must be snake_case

+```

+

+Hierarchical domains are used in the `[exports]` section of `METHODS.toml` to control pipe visibility across domains. See [Packages](./packages.md) for details.

+

## How Domains Work

### Concept Namespacing

@@ -68,14 +99,14 @@ This creates two concepts:

The domain code prevents naming conflicts. Multiple bundles can define concepts with the same name if they're in different domains:

```toml

-# finance.plx

+# finance.mthds

domain = "finance"

[concept]

Report = "A financial report"

```

```toml

-# marketing.plx

+# marketing.mthds

domain = "marketing"

[concept]

Report = "A marketing campaign report"

@@ -85,17 +116,17 @@ Result: Two different concepts (`finance.Report` and `marketing.Report`) with no

### Multiple Bundles, Same Domain

-Multiple `.plx` files can declare the same domain. They all contribute to that domain's namespace:

+Multiple `.mthds` files can declare the same domain. They all contribute to that domain's namespace:

```toml

-# finance_invoices.plx

+# finance_invoices.mthds

domain = "finance"

[concept]

Invoice = "..."

```

```toml

-# finance_payments.plx

+# finance_payments.mthds

domain = "finance"

[concept]

Payment = "..."

@@ -170,7 +201,8 @@ Individual pipes can override the domain system prompt by defining their own `sy

## Related Documentation

+- [Packages](./packages.md) - Controlling pipe visibility with exports

- [Pipelex Bundle Specification](./pipelex-bundle-specification.md) - How domains are declared in bundles

-- [Kick off a Pipelex Workflow Project](./kick-off-a-pipelex-workflow-project.md) - Getting started

+- [Kick off a Pipelex Method Project](./kick-off-a-methods-project.md) - Getting started

- [Define Your Concepts](./concepts/define_your_concepts.md) - Creating concepts within domains

- [Designing Pipelines](./pipes/index.md) - Building pipes within domains