diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000..5cb922e

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,4 @@

+outdir/

+__pycache__

+*.egg-info/

+*.swp

diff --git a/.pylintrc b/.pylintrc

new file mode 100644

index 0000000..82d676a

--- /dev/null

+++ b/.pylintrc

@@ -0,0 +1,20 @@

+[MESSAGES CONTROL]

+

+disable=

+ bad-continuation,

+ bad-whitespace,

+ invalid-name,

+ no-else-return,

+ superfluous-parens,

+ too-few-public-methods,

+ trailing-newlines,

+ duplicate-code,

+ missing-function-docstring,

+ missing-class-docstring,

+ missing-module-docstring,

+ consider-using-f-string,

+

+[TYPECHECK]

+generated-members=

+ torch

+

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000..9d0fff5

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,26 @@

+Copyright (c) 2021-2025, The LS4GAN Project Developers

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+1. Redistributions of source code must retain the above copyright notice,

+ this list of conditions and the following disclaimer.

+2. Redistributions in binary form must reproduce the above copyright

+ notice, this list of conditions and the following disclaimer in the

+ documentation and/or other materials provided with the distribution.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDER AND CONTRIBUTORS "AS IS"

+AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

+IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

+ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE

+LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

+CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

+SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

+INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

+CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

+ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

+POSSIBILITY OF SUCH DAMAGE.

+

+The above copyright and license notices apply to all files in this repository

+except for any file that contains its own copyright and/or license declaration.

diff --git a/README.md b/README.md

index cf5ed5b..023e838 100644

--- a/README.md

+++ b/README.md

@@ -1 +1,308 @@

-The code will be available shortly.

+# UVCGAN-S: Stratified CycleGAN for Unsupervised Data Decomposition

+

+

+

+## Overview

+

+

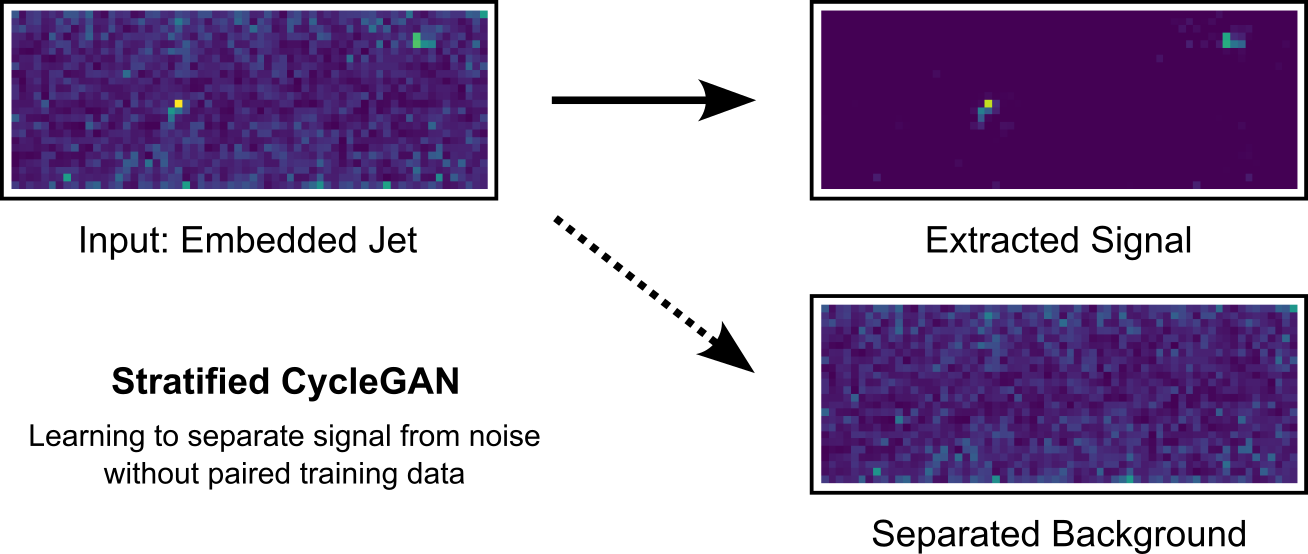

+This repository provides a reference implementation of Stratified CycleGAN

+(UVCGAN-S), an architecture for unsupervised signal extraction from mixed data.

+

+What problem does Stratified CycleGAN solve?

+

+Imagine you have three datasets. The first contains clean signals. The second

+contains backgrounds. The third contains mixed data where signals and

+backgrounds have been combined in some complicated way. You don't know exactly

+how the mixing happens, and you can't find pairs that show which clean signal

+corresponds to which mixed observation. But you need to take new mixed data and

+decompose it back into signal and background components.

+

+Stratified CycleGAN learns to do this decomposition from unpaired examples.

+You show it random samples of signals, random samples of backgrounds and mixed

+data, and it figures out both how to combine signals and backgrounds into

+realistic mixed data, and how to decompose mixed data back into its parts.

+

+

+  +

+

+

+

+See the [Quick Start](#quick-start-guide) section for a concrete example

+using cat and dog images.

+

+

+## Installation

+

+The package was tested only under Linux systems.

+

+

+### Environment Setup

+

+Development environment based on

+`pytorch/pytorch:1.12.1-cuda11.3-cudnn8-runtime` container.

+

+There are several ways to setup the package environment:

+

+**Option 1: Docker**

+

+Download the docker container `pytorch/pytorch:1.12.1-cuda11.3-cudnn8-runtime`.

+Inside the container, create a virtual environment to avoid package conflicts:

+```bash

+python3 -m venv --system-site-packages ~/.venv/uvcgan-s

+source ~/.venv/uvcgan-s/bin/activate

+```

+

+**Option 2: Conda**

+```bash

+conda env create -f contrib/conda_env.yaml

+conda activate uvcgan-s

+```

+

+### Install Package

+

+Once the environment is set, install the `uvcgan-s` package and its

+requirements:

+

+```bash

+pip install -r requirements.txt

+pip install -e .

+```

+

+### Environment Variables

+

+By default, UVCGAN-S reads datasets from `./data` and saves models to

+`./outdir`. If any other location is desired, these defaults can be overriden

+with:

+```bash

+export UVCGAN_S_DATA=/path/to/datasets

+export UVCGAN_S_OUTDIR=/path/to/models

+```

+

+## Quick Start Guide

+

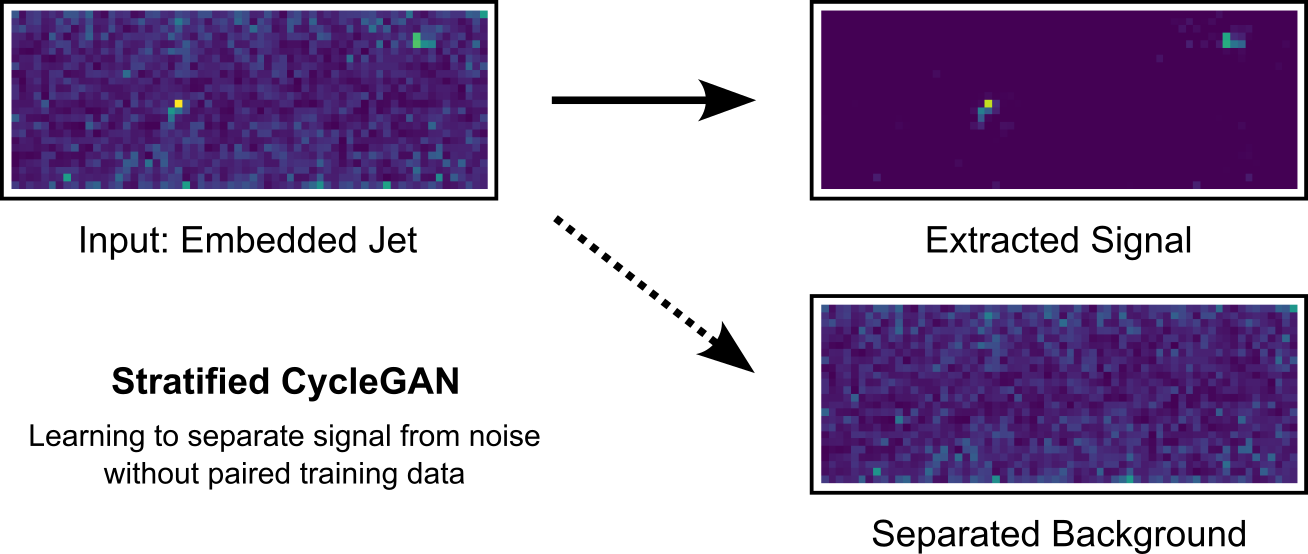

+This package was developed for sPHENIX jet signal extraction. However, jet

+signal analysis requires familiarity with sPHENIX-specific reconstruction

+algorithms and jet quality analysis procedures. This section demonstrates

+application of Stratified CycleGAN method on a simpler toy problem with

+intuitive interpretation. The toy example illustrates the basic workflow and

+serves as a template for applying the method to your own data.

+

+

+  +

+

+

+The toy problem is this: we have images that contain a blurry mix of cat and

+dog faces. The goal is to automatically decompose these mixed images into

+separate cat and dog images. To this end, we present Stratified CycleGAN with

+the mixed images, a random sample of cat images, and a random sample of dog

+images. By observing these three collections, the model learns how cats look on

+average, how dogs look on average, and what would be the best way to decompose

+the current mixed image into a cat and a dog. Importantly, the model is never

+shown training pairs like "this specific mixed image was created from this

+specific cat image and this specific dog image." It only sees random examples

+from each collection and figures out the decomposition on its own.

+

+### Installation

+

+Before proceeding further, the package needs to be installed following

+instructions at the top of this README, if not installed already.

+

+### Dataset Preparation

+

+The toy example uses cat and dog images from the AFHQ dataset. To download and

+preprocess it:

+

+```bash

+# Download the AFHQ dataset

+./scripts/download_dataset.sh afhq

+

+# Resize all images to 256x256 pixels

+python3 scripts/downsize_right.py -s 256 256 -i lanczos \

+ "${UVCGAN_S_DATA:-./data}/afhq/" \

+ "${UVCGAN_S_DATA:-./data}/afhq_resized_lanczos"

+```

+

+The resizing script creates a new directory `afhq_resized_lanczos` containing

+256x256 versions of all images, which is the format expected by the training

+script.

+

+### Training

+

+To train the model, run the following command:

+```bash

+python3 scripts/train/toy_mix_blur/train_uvcgan-s.py

+```

+

+The script trains the Stratified CycleGAN model for 100 epochs. On an RTX 3090

+GPU, each epoch takes approximately 3 minutes, so the complete training process

+requires about 5 hours. The trained model and intermediate checkpoints are

+saved in the directory

+`${UVCGAN_S_OUTDIR:-./outdir}/toy_mix_blur/uvcgan-s/model_m(uvcgan-s)_d(resnet)_g(vit-modnet)_cat_dog_sub/`.

+

+The structure of the model directory is described in the

+[F.A.Q.](#what-is-the-structure-of-a-model-directory).

+

+### Evaluation

+

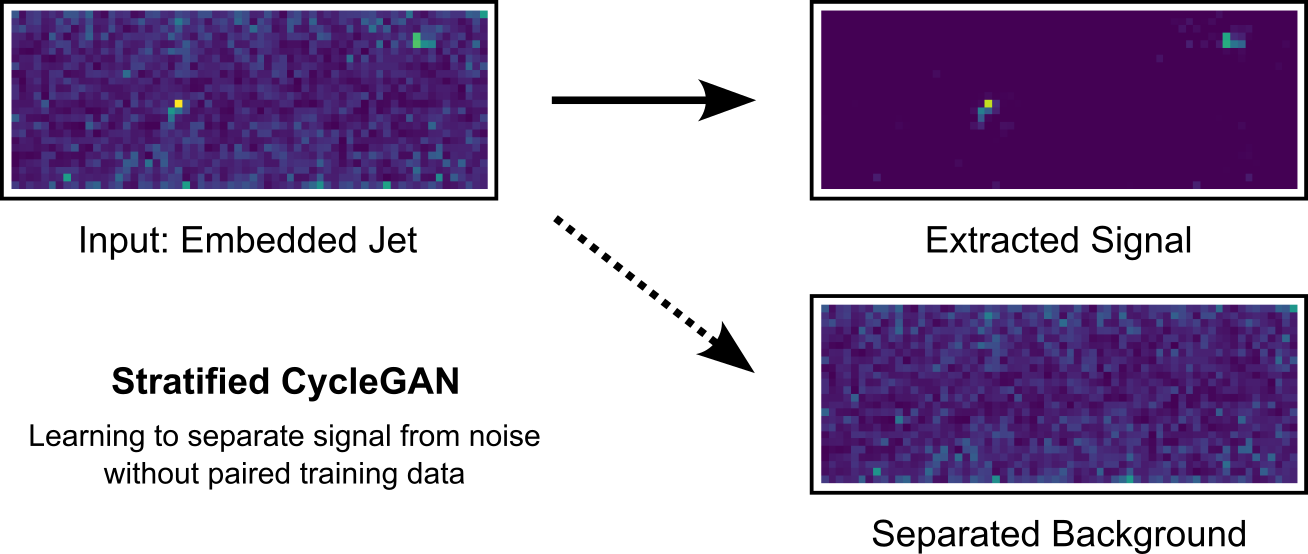

+

+  +

+

+

+

+After training completes, the model can be used to decompose images from the

+validation set of AFHQ. Run:

+```bash

+python3 scripts/translate_images.py \

+ "${UVCGAN_S_OUTDIR:-./outdir}/toy_mix_blur/uvcgan-s/cat_dog_sub" \

+ --split val \

+ --domain 2 \

+ --format image

+```

+

+This command takes the mixture cat-dog images (Domain B) and decomposes them

+into separate cat and dog components (Domain A). The `--domain 2` flag

+specifies that the input images come from Domain B, which contains the mixed

+data.

+

+The results are saved in the model directory under

+`evals/final/translated(None)_domain(2)_eval-val/`. This evaluation directory

+contains several subdirectories:

+

+- `fake_a0/` - extracted cat components

+- `fake_a1/` - extracted dog components

+- `real_b/` - original blurred mixture inputs

+

+Each subdirectory contains numbered image files (`sample_0.png`,

+ `sample_1.png`, etc.) corresponding to the validation set.

+

+

+### Adapting to Your Own Data

+

+To apply Stratified CycleGAN to a different decomposition problem, use the toy

+example training script as a starting point. The script

+`scripts/train/toy_mix_blur/train_uvcgan-s.py` contains a declarative

+configuration showing how to structure the three required datasets and set up

+the domain structure for decomposition. For a more complex example, see

+`scripts/train/sphenix/train_uvcgan-s.py`.

+

+

+## sPHENIX Application: Jet Background Subtraction

+

+The package was developed for extracting particle jets from heavy-ion collision

+backgrounds in sPHENIX calorimeter data. This section describes how to

+reproduce the paper results.

+

+### Dataset

+

+The sPHENIX dataset can be downloaded from Zenodo: https://zenodo.org/records/17783990

+

+Alternatively, use the download script:

+```bash

+./scripts/download_dataset.sh sphenix

+```

+

+The dataset contains HDF5 files with calorimeter energy measurements organized

+as 24×64 eta-phi grids. The data is split into training, validation, and test

+sets. Training data consists of three components: PYTHIA jets (the signal

+component), HIJING minimum-bias events (the background component), and

+embedded PYTHIA+HIJING events (the mixed data). The test set uses JEWEL jets

+embedded in HIJING backgrounds. JEWEL models jet-medium interactions

+differently from PYTHIA, providing an out-of-distribution test of the model's

+generalization capability.

+

+

+### Training or Using Pre-trained Model

+

+There are two options for obtaining a trained model: training from scratch or

+downloading the pre-trained model from the paper.

+

+To train a new model from scratch:

+```bash

+python3 scripts/train/sphenix/train_uvcgan-s.py

+```

+

+The training configuration uses the same Stratified CycleGAN architecture as

+the toy example, adapted for single-channel calorimeter data. The trained model

+is saved in the directory

+`${UVCGAN_S_OUTDIR:-./outdir}/sphenix/uvcgan-s/model_m(uvcgan-s)_d(resnet)_g(vit-modnet)_sgn_bkg_sub`

+(see [F.A.Q.](#what-is-the-structure-of-a-model-directory) for details on the

+ model directory structure).

+

+Alternatively, a pre-trained model can be downloaded from Zenodo: https://zenodo.org/records/17809156

+

+The pre-trained model can be used directly for evaluation without retraining.

+

+

+### Evaluation

+

+To evaluate the model on the test set:

+```bash

+python3 scripts/translate_images.py \

+ "${UVCGAN_S_OUTDIR:-./outdir}/path/to/sphenix/model" \

+ --split test \

+ --domain 2 \

+ --format ndarray

+```

+

+The `--format ndarray` flag saves results as NumPy arrays rather than images.

+The output structure is similar to the toy example: extracted signal and

+background components are saved in separate directories under `evals/final/`.

+Each output file contains a 24×64 calorimeter energy grid that can be used for

+physics analysis.

+

+

+# F.A.Q.

+

+## I am training my model on a multi-GPU node. How to make sure that I use only one GPU?

+

+You can specify GPUs that `pytorch` will use with the help of the

+`CUDA_VISIBLE_DEVICES` environment variable. This variable can be set to a list

+of comma-separated GPU indices. When it is set, `pytorch` will only use GPUs

+whose IDs are in the `CUDA_VISIBLE_DEVICES`.

+

+

+## What is the structure of a model directory?

+

+`uvcgan-s` saves each model in a separate directory that contains:

+ - `MODEL/config.json` -- model architecture, training, and evaluation

+ configurations

+ - `MODEL/net_*.pth` -- PyTorch weights of model networks

+ - `MODEL/opt_*.pth` -- PyTorch weights of training optimizers

+ - `MODEL/shed_*.pth` -- PyTorch weights of training schedulers

+ - `MODEL/checkpoints/` -- training checkpoints

+ - `MODEL/evals/` -- evaluation results

+

+

+## Training fails with "Config collision detected" error

+

+`uvcgan-s` enforces a one-model-per-directory policy to prevent accidental

+overwrites of existing models. Each model directory must have a unique

+configuration - if you try to place a model with different settings in a

+directory that already contains a model, you'll receive a "Config collision

+detected" error.

+

+This safeguard helps prevent situations where you might accidentally lose

+trained models by starting a new training run with different parameters in the

+same directory.

+

+Solutions:

+1. To overwrite the old model: delete the old `config.json` configuration file

+ and restart the training process.

+2. To preserve the old model: modify the training script of the new model and

+ update the `label` or `outdir` configuration options to avoid collisions.

+

+

+# LICENSE

+

+`uvcgan-s` is distributed under `BSD-2` license.

+

+`uvcgan-s` repository contains some code (primarily in `uvcgan_s/base`

+subdirectory) from [pytorch-CycleGAN-and-pix2pix][cyclegan_repo].

+This code is also licensed under `BSD-2` license (please refer to

+`uvcgan_s/base/LICENSE` for details).

+

+Each code snippet that was taken from

+[pytorch-CycleGAN-and-pix2pix][cyclegan_repo] has a note about proper copyright

+attribution.

+

+[cyclegan_repo]: https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

diff --git a/contrib/conda_env.yaml b/contrib/conda_env.yaml

new file mode 100644

index 0000000..0f8e8a8

--- /dev/null

+++ b/contrib/conda_env.yaml

@@ -0,0 +1,119 @@

+name: uvcgan-s

+channels:

+ - pytorch

+ - nvidia

+ - defaults

+ - conda-forge

+dependencies:

+ - _libgcc_mutex=0.1

+ - _openmp_mutex=4.5

+ - backcall=0.2.0

+ - beautifulsoup4=4.11.1

+ - blas=1.0

+ - brotlipy=0.7.0

+ - bzip2=1.0.8

+ - ca-certificates=2022.07.19

+ - certifi=2022.6.15

+ - cffi=1.15.0

+ - chardet=4.0.0

+ - charset-normalizer=2.0.4

+ - colorama=0.4.4

+ - conda=4.13.0

+ - conda-build=3.21.9

+ - conda-content-trust=0.1.1

+ - conda-package-handling=1.8.1

+ - cryptography=37.0.1

+ - cudatoolkit=11.3.1

+ - decorator=5.1.1

+ - ffmpeg=4.3

+ - filelock=3.6.0

+ - freetype=2.11.0

+ - giflib=5.2.1

+ - glob2=0.7

+ - gmp=6.2.1

+ - gnutls=3.6.15

+ - icu=58.2

+ - idna=3.3

+ - intel-openmp=2021.4.0

+ - ipython=7.31.1

+ - jedi=0.18.1

+ - jinja2=2.10.1

+ - jpeg=9e

+ - lame=3.100

+ - lcms2=2.12

+ - ld_impl_linux-64=2.35.1

+ - libarchive=3.5.2

+ - libffi=3.3

+ - libgcc-ng=9.3.0

+ - libgomp=9.3.0

+ - libiconv=1.16

+ - libidn2=2.3.2

+ - liblief=0.11.5

+ - libpng=1.6.37

+ - libstdcxx-ng=9.3.0

+ - libtasn1=4.16.0

+ - libtiff=4.2.0

+ - libunistring=0.9.10

+ - libwebp=1.2.2

+ - libwebp-base=1.2.2

+ - libxml2=2.9.14

+ - lz4-c=1.9.3

+ - markupsafe=2.0.1

+ - matplotlib-inline=0.1.2

+ - mkl=2021.4.0

+ - mkl-service=2.4.0

+ - mkl_fft=1.3.1

+ - mkl_random=1.2.2

+ - ncurses=6.3

+ - nettle=3.7.3

+ - numpy=1.21.5

+ - numpy-base=1.21.5

+ - openh264=2.1.1

+ - openssl=1.1.1q

+ - parso=0.8.3

+ - patchelf=0.13

+ - pexpect=4.8.0

+ - pickleshare=0.7.5

+ - pillow=9.0.1

+ - pip=22.1.2

+ - pkginfo=1.8.2

+ - prompt-toolkit=3.0.20

+ - psutil=5.8.0

+ - ptyprocess=0.7.0

+ - py-lief=0.11.5

+ - pycosat=0.6.3

+ - pycparser=2.21

+ - pygments=2.11.2

+ - pyopenssl=22.0.0

+ - pysocks=1.7.1

+ - python=3.7.13

+ - python-libarchive-c=2.9

+ - pytorch=1.12.1

+ - pytorch-mutex=1.0

+ - pytz=2022.1

+ - pyyaml=6.0

+ - readline=8.1.2

+ - requests=2.27.1

+ - ripgrep=12.1.1

+ - ruamel_yaml=0.15.100

+ - setuptools=61.2.0

+ - six=1.16.0

+ - soupsieve=2.3.1

+ - sqlite=3.38.2

+ - tk=8.6.11

+ - torchtext=0.13.1

+ - torchvision=0.13.1

+ - tqdm=4.63.0

+ - traitlets=5.1.1

+ - typing_extensions=4.3.0

+ - tzdata=2022a

+ - urllib3=1.26.8

+ - wcwidth=0.2.5

+ - wheel=0.37.1

+ - xz=5.2.5

+ - yaml=0.2.5

+ - zlib=1.2.12

+ - zstd=1.5.2

+ - einops=0.4.*

+ - h5py=3.6.*

+ - pandas=1.3.*

diff --git a/requirements.txt b/requirements.txt

new file mode 100644

index 0000000..ca95049

--- /dev/null

+++ b/requirements.txt

@@ -0,0 +1,8 @@

+einops==0.4.1

+h5py==3.6.0

+numpy==1.21.5

+pandas==1.3.3

+Pillow==9.0.1

+torch==1.12.1

+torchvision==0.13.1

+tqdm==4.63.0

diff --git a/scripts/download_dataset.sh b/scripts/download_dataset.sh

new file mode 100755

index 0000000..7300076

--- /dev/null

+++ b/scripts/download_dataset.sh

@@ -0,0 +1,166 @@

+#!/usr/bin/env bash

+

+DATADIR="${UVCGAN_S_DATA:-data}"

+

+declare -A URL_LIST=(

+ [afhq]="https://www.dropbox.com/s/t9l9o3vsx2jai3z/afhq.zip"

+ [sphenix]="https://zenodo.org/record/17783990/files/2025-06-05_jet_bkg_sub.tar"

+)

+

+declare -A CHECKSUMS=(

+ [afhq]="7f63dcc14ef58c0e849b59091287e1844da97016073aac20403ae6c6132b950f"

+)

+

+die ()

+{

+ echo "${*}"

+ exit 1

+}

+

+usage ()

+{

+ cat < 0: # create an empty pool

+ self.num_imgs = 0

+ self.images = []

+

+ def query(self, images):

+ """Return an image from the pool.

+

+ Parameters:

+ images: the latest generated images from the generator

+

+ Returns images from the buffer.

+

+ By 50/100, the buffer will return input images.

+ By 50/100, the buffer will return images previously stored in the buffer,

+ and insert the current images to the buffer.

+ """

+ if self.pool_size == 0: # if the buffer size is 0, do nothing

+ return images

+ return_images = []

+ for image in images:

+ image = torch.unsqueeze(image.data, 0)

+ if self.num_imgs < self.pool_size: # if the buffer is not full; keep inserting current images to the buffer

+ self.num_imgs = self.num_imgs + 1

+ self.images.append(image)

+ return_images.append(image)

+ else:

+ p = random.uniform(0, 1)

+ if p > 0.5: # by 50% chance, the buffer will return a previously stored image, and insert the current image into the buffer

+ random_id = random.randint(0, self.pool_size - 1) # randint is inclusive

+ tmp = self.images[random_id].clone()

+ self.images[random_id] = image

+ return_images.append(tmp)

+ else: # by another 50% chance, the buffer will return the current image

+ return_images.append(image)

+ return_images = torch.cat(return_images, 0) # collect all the images and return

+ return return_images

diff --git a/uvcgan_s/base/losses.py b/uvcgan_s/base/losses.py

new file mode 100644

index 0000000..025b03a

--- /dev/null

+++ b/uvcgan_s/base/losses.py

@@ -0,0 +1,200 @@

+import torch

+from torch import nn

+

+def reduce_loss(loss, reduction):

+ if (reduction is None) or (reduction == 'none'):

+ return loss

+

+ if reduction == 'mean':

+ return loss.mean()

+

+ if reduction == 'sum':

+ return loss.sum()

+

+ raise ValueError(f"Unknown reduction method: '{reduction}'")

+

+class GANLoss(nn.Module):

+ """Define different GAN objectives.

+

+ The GANLoss class abstracts away the need to create the target label tensor

+ that has the same size as the input.

+ """

+

+ def __init__(

+ self, gan_mode, target_real_label = 1.0, target_fake_label = 0.0,

+ reduction = 'mean'

+ ):

+ """ Initialize the GANLoss class.

+

+ Parameters:

+ gan_mode (str) -- the type of GAN objective.

+ Choices: vanilla, lsgan, and wgangp.

+ target_real_label (bool) -- label for a real image

+ target_fake_label (bool) -- label of a fake image

+

+ Note: Do not use sigmoid as the last layer of Discriminator.

+ LSGAN needs no sigmoid. Vanilla GANs will handle it with

+ BCEWithLogitsLoss.

+ """

+ super().__init__()

+

+ # pylint: disable=not-callable

+ self.register_buffer('real_label', torch.tensor(target_real_label))

+ self.register_buffer('fake_label', torch.tensor(target_fake_label))

+

+ self.gan_mode = gan_mode

+ self.reduction = reduction

+

+ if gan_mode == 'lsgan':

+ self.loss = nn.MSELoss(reduction = reduction)

+

+ elif gan_mode == 'vanilla':

+ self.loss = nn.BCEWithLogitsLoss(reduction = reduction)

+

+ elif gan_mode == 'softplus':

+ self.loss = nn.Softplus()

+

+ elif gan_mode == 'wgan':

+ self.loss = None

+

+ else:

+ raise NotImplementedError('gan mode %s not implemented' % gan_mode)

+

+ def get_target_tensor(self, prediction, target_is_real):

+ """Create label tensors with the same size as the input.

+

+ Parameters:

+ prediction (tensor) -- tpyically the prediction from a

+ discriminator

+ target_is_real (bool) -- if the ground truth label is for real

+ images or fake images

+

+ Returns:

+ A label tensor filled with ground truth label, and with the size of

+ the input

+ """

+

+ if target_is_real:

+ target_tensor = self.real_label

+ else:

+ target_tensor = self.fake_label

+ return target_tensor.expand_as(prediction)

+

+ def eval_wgan_loss(self, prediction, target_is_real):

+ if target_is_real:

+ result = -prediction.mean()

+ else:

+ result = prediction.mean()

+

+ return reduce_loss(result, self.reduction)

+

+ def eval_softplus_loss(self, prediction, target_is_real):

+ if target_is_real:

+ result = self.loss(prediction)

+ else:

+ result = self.loss(-prediction)

+

+ return reduce_loss(result, self.reduction)

+

+ def forward(self, prediction, target_is_real):

+ """Calculate loss given Discriminator's output and grount truth labels.

+

+ Parameters:

+ prediction (tensor) -- tpyically the prediction output from a

+ discriminator

+ target_is_real (bool) -- if the ground truth label is for real

+ images or fake images

+

+ Returns:

+ the calculated loss.

+ """

+

+ if isinstance(prediction, (list, tuple)):

+ result = sum(self.forward(x, target_is_real) for x in prediction)

+ return result / len(prediction)

+

+ if self.gan_mode == 'wgan':

+ return self.eval_wgan_loss(prediction, target_is_real)

+

+ if self.gan_mode == 'softplus':

+ return self.eval_softplus_loss(prediction, target_is_real)

+

+ target_tensor = self.get_target_tensor(prediction, target_is_real)

+ return self.loss(prediction, target_tensor)

+

+# pylint: disable=too-many-arguments

+# pylint: disable=redefined-builtin

+def cal_gradient_penalty(

+ netD, real_data, fake_data, device,

+ type = 'mixed', constant = 1.0, lambda_gp = 10.0

+):

+ """Calculate the gradient penalty loss, used in WGAN-GP

+

+ source: https://arxiv.org/abs/1704.00028

+

+ Arguments:

+ netD (network) -- discriminator network

+ real_data (tensor array) -- real images

+ fake_data (tensor array) -- generated images from the generator

+ device (str) -- torch device

+ type (str) -- if we mix real and fake data or not

+ Choices: [real | fake | mixed].

+ constant (float) -- the constant used in formula:

+ (||gradient||_2 - constant)^2

+ lambda_gp (float) -- weight for this loss

+

+ Returns the gradient penalty loss

+ """

+ if lambda_gp == 0.0:

+ return 0.0, None

+

+ if type == 'real':

+ interpolatesv = real_data

+ elif type == 'fake':

+ interpolatesv = fake_data

+ elif type == 'mixed':

+ alpha = torch.rand(real_data.shape[0], 1, device = device)

+ alpha = alpha.expand(

+ real_data.shape[0], real_data.nelement() // real_data.shape[0]

+ ).contiguous().view(*real_data.shape)

+

+ interpolatesv = alpha * real_data + ((1 - alpha) * fake_data)

+ else:

+ raise NotImplementedError('{} not implemented'.format(type))

+

+ interpolatesv.requires_grad_(True)

+ disc_interpolates = netD(interpolatesv)

+

+ gradients = torch.autograd.grad(

+ outputs=disc_interpolates, inputs=interpolatesv,

+ grad_outputs=torch.ones(disc_interpolates.size()).to(device),

+ create_graph=True, retain_graph=True, only_inputs=True

+ )

+

+ gradients = gradients[0].view(real_data.size(0), -1)

+

+ gradient_penalty = (

+ ((gradients + 1e-16).norm(2, dim=1) - constant) ** 2

+ ).mean() * lambda_gp

+

+ return gradient_penalty, gradients

+

+def calc_zero_gp(model, x, **model_kwargs):

+ x.requires_grad_(True)

+ y = model(x, **model_kwargs)

+

+ grad = torch.autograd.grad(

+ outputs = y,

+ inputs = x,

+ grad_outputs = torch.ones(y.size()).to(y.device),

+ create_graph = True,

+ retain_graph = True,

+ only_inputs = True

+ )

+

+ grad = grad[0].view(x.shape[0], -1)

+ # NOTE: 1/2 for backward compatibility

+ gp = 1/2 * torch.sum(grad.square(), dim = 1).mean()

+

+ return gp, grad

+

diff --git a/uvcgan_s/base/networks.py b/uvcgan_s/base/networks.py

new file mode 100644

index 0000000..e70024c

--- /dev/null

+++ b/uvcgan_s/base/networks.py

@@ -0,0 +1,411 @@

+# pylint: disable=line-too-long

+# pylint: disable=redefined-builtin

+# pylint: disable=too-many-arguments

+# pylint: disable=unidiomatic-typecheck

+# pylint: disable=super-with-arguments

+

+import functools

+

+import torch

+from torch import nn

+

+class Identity(nn.Module):

+ # pylint: disable=no-self-use

+ def forward(self, x):

+ return x

+

+def get_norm_layer(norm_type='instance'):

+ """Return a normalization layer

+

+ Parameters:

+ norm_type (str) -- the name of the normalization layer: batch | instance | none

+

+ For BatchNorm, we use learnable affine parameters and track running statistics (mean/stddev).

+ For InstanceNorm, we do not use learnable affine parameters. We do not track running statistics.

+ """

+ if norm_type == 'batch':

+ norm_layer = functools.partial(nn.BatchNorm2d, affine=True, track_running_stats=True)

+ elif norm_type == 'instance':

+ norm_layer = functools.partial(nn.InstanceNorm2d, affine=False, track_running_stats=False)

+ elif norm_type == 'none':

+ norm_layer = lambda _features : Identity()

+ else:

+ raise NotImplementedError('normalization layer [%s] is not found' % norm_type)

+

+ return norm_layer

+

+def join_args(a, b):

+ return { **a, **b }

+

+def select_base_generator(model, **kwargs):

+ default_args = dict(norm = 'instance', use_dropout = False, ngf = 64)

+ kwargs = join_args(default_args, kwargs)

+

+ if model == 'resnet_9blocks':

+ return ResnetGenerator(n_blocks = 9, **kwargs)

+

+ if model == 'resnet_6blocks':

+ return ResnetGenerator(n_blocks = 6, **kwargs)

+

+ if model == 'unet_128':

+ return UnetGenerator(num_downs = 7, **kwargs)

+

+ if model == 'unet_256':

+ return UnetGenerator(num_downs = 8, **kwargs)

+

+ raise ValueError("Unknown generator: %s" % model)

+

+def select_base_discriminator(model, **kwargs):

+ default_args = dict(norm = 'instance', ndf = 64)

+ kwargs = join_args(default_args, kwargs)

+

+ if model == 'basic':

+ return NLayerDiscriminator(n_layers = 3, **kwargs)

+

+ if model == 'n_layers':

+ return NLayerDiscriminator(**kwargs)

+

+ if model == 'pixel':

+ return PixelDiscriminator(**kwargs)

+

+ raise ValueError("Unknown discriminator: %s" % model)

+

+class ResnetGenerator(nn.Module):

+ """Resnet-based generator that consists of Resnet blocks between a few downsampling/upsampling operations.

+

+ We adapt Torch code and idea from Justin Johnson's neural style transfer project(https://github.com/jcjohnson/fast-neural-style)

+ """

+

+ def __init__(self, image_shape, ngf=64, norm = 'batch', use_dropout=False, n_blocks=6, padding_type='reflect'):

+ """Construct a Resnet-based generator

+

+ Parameters:

+ input_nc (int) -- the number of channels in input images

+ output_nc (int) -- the number of channels in output images

+ ngf (int) -- the number of filters in the last conv layer

+ norm_layer -- normalization layer

+ use_dropout (bool) -- if use dropout layers

+ n_blocks (int) -- the number of ResNet blocks

+ padding_type (str) -- the name of padding layer in conv layers: reflect | replicate | zero

+ """

+

+ assert n_blocks >= 0

+ super().__init__()

+

+ norm_layer = get_norm_layer(norm_type = norm)

+

+ if type(norm_layer) == functools.partial:

+ use_bias = norm_layer.func == nn.InstanceNorm2d

+ else:

+ use_bias = norm_layer == nn.InstanceNorm2d

+

+ model = [nn.ReflectionPad2d(3),

+ nn.Conv2d(image_shape[0], ngf, kernel_size=7, padding=0, bias=use_bias),

+ norm_layer(ngf),

+ nn.ReLU(True)]

+

+ n_downsampling = 2

+ for i in range(n_downsampling): # add downsampling layers

+ mult = 2 ** i

+ model += [nn.Conv2d(ngf * mult, ngf * mult * 2, kernel_size=3, stride=2, padding=1, bias=use_bias),

+ norm_layer(ngf * mult * 2),

+ nn.ReLU(True)]

+

+ mult = 2 ** n_downsampling

+ for i in range(n_blocks): # add ResNet blocks

+

+ model += [ResnetBlock(ngf * mult, padding_type=padding_type, norm_layer=norm_layer, use_dropout=use_dropout, use_bias=use_bias)]

+

+ for i in range(n_downsampling): # add upsampling layers

+ mult = 2 ** (n_downsampling - i)

+ model += [nn.ConvTranspose2d(ngf * mult, int(ngf * mult / 2),

+ kernel_size=3, stride=2,

+ padding=1, output_padding=1,

+ bias=use_bias),

+ norm_layer(int(ngf * mult / 2)),

+ nn.ReLU(True)]

+ model += [nn.ReflectionPad2d(3)]

+ model += [nn.Conv2d(ngf, image_shape[0], kernel_size=7, padding=0)]

+

+ if image_shape[0] == 3:

+ model.append(nn.Sigmoid())

+

+ self.model = nn.Sequential(*model)

+

+ def forward(self, input):

+ """Standard forward"""

+ return self.model(input)

+

+

+class ResnetBlock(nn.Module):

+ """Define a Resnet block"""

+

+ def __init__(self, dim, padding_type, norm_layer, use_dropout, use_bias):

+ """Initialize the Resnet block

+

+ A resnet block is a conv block with skip connections

+ We construct a conv block with build_conv_block function,

+ and implement skip connections in function.

+ Original Resnet paper: https://arxiv.org/pdf/1512.03385.pdf

+ """

+ super().__init__()

+ self.conv_block = self.build_conv_block(dim, padding_type, norm_layer, use_dropout, use_bias)

+

+ # pylint: disable=no-self-use

+ def build_conv_block(self, dim, padding_type, norm_layer, use_dropout, use_bias):

+ """Construct a convolutional block.

+

+ Parameters:

+ dim (int) -- the number of channels in the conv layer.

+ padding_type (str) -- the name of padding layer: reflect | replicate | zero

+ norm_layer -- normalization layer

+ use_dropout (bool) -- if use dropout layers.

+ use_bias (bool) -- if the conv layer uses bias or not

+

+ Returns a conv block (with a conv layer, a normalization layer, and a non-linearity layer (ReLU))

+ """

+ conv_block = []

+ p = 0

+ if padding_type == 'reflect':

+ conv_block += [nn.ReflectionPad2d(1)]

+ elif padding_type == 'replicate':

+ conv_block += [nn.ReplicationPad2d(1)]

+ elif padding_type == 'zero':

+ p = 1

+ else:

+ raise NotImplementedError('padding [%s] is not implemented' % padding_type)

+

+ conv_block += [nn.Conv2d(dim, dim, kernel_size=3, padding=p, bias=use_bias), norm_layer(dim), nn.ReLU(True)]

+ if use_dropout:

+ conv_block += [nn.Dropout(0.5)]

+

+ p = 0

+ if padding_type == 'reflect':

+ conv_block += [nn.ReflectionPad2d(1)]

+ elif padding_type == 'replicate':

+ conv_block += [nn.ReplicationPad2d(1)]

+ elif padding_type == 'zero':

+ p = 1

+ else:

+ raise NotImplementedError('padding [%s] is not implemented' % padding_type)

+ conv_block += [nn.Conv2d(dim, dim, kernel_size=3, padding=p, bias=use_bias), norm_layer(dim)]

+

+ return nn.Sequential(*conv_block)

+

+ def forward(self, x):

+ """Forward function (with skip connections)"""

+ out = x + self.conv_block(x) # add skip connections

+ return out

+

+

+class UnetGenerator(nn.Module):

+ """Create a Unet-based generator"""

+

+ def __init__(self, image_shape, num_downs, ngf=64, norm = 'batch', use_dropout=False):

+ """Construct a Unet generator

+ Parameters:

+ input_nc (int) -- the number of channels in input images

+ output_nc (int) -- the number of channels in output images

+ num_downs (int) -- the number of downsamplings in UNet. For example, # if |num_downs| == 7,

+ image of size 128x128 will become of size 1x1 # at the bottleneck

+ ngf (int) -- the number of filters in the last conv layer

+ norm_layer -- normalization layer

+

+ We construct the U-Net from the innermost layer to the outermost layer.

+ It is a recursive process.

+ """

+ super(UnetGenerator, self).__init__()

+ norm_layer = get_norm_layer(norm_type=norm)

+

+ # construct unet structure

+ unet_block = UnetSkipConnectionBlock(ngf * 8, ngf * 8, input_nc=None, submodule=None, norm_layer=norm_layer, innermost=True) # add the innermost layer

+ for _i in range(num_downs - 5): # add intermediate layers with ngf * 8 filters

+ unet_block = UnetSkipConnectionBlock(ngf * 8, ngf * 8, input_nc=None, submodule=unet_block, norm_layer=norm_layer, use_dropout=use_dropout)

+ # gradually reduce the number of filters from ngf * 8 to ngf

+ unet_block = UnetSkipConnectionBlock(ngf * 4, ngf * 8, input_nc=None, submodule=unet_block, norm_layer=norm_layer)

+ unet_block = UnetSkipConnectionBlock(ngf * 2, ngf * 4, input_nc=None, submodule=unet_block, norm_layer=norm_layer)

+ unet_block = UnetSkipConnectionBlock(ngf, ngf * 2, input_nc=None, submodule=unet_block, norm_layer=norm_layer)

+ self.model = UnetSkipConnectionBlock(image_shape[0], ngf, input_nc=image_shape[0], submodule=unet_block, outermost=True, norm_layer=norm_layer) # add the outermost layer

+

+ def forward(self, input):

+ """Standard forward"""

+ return self.model(input)

+

+

+class UnetSkipConnectionBlock(nn.Module):

+ """Defines the Unet submodule with skip connection.

+ X -------------------identity----------------------

+ |-- downsampling -- |submodule| -- upsampling --|

+ """

+

+ def __init__(self, outer_nc, inner_nc, input_nc=None,

+ submodule=None, outermost=False, innermost=False, norm_layer=nn.BatchNorm2d, use_dropout=False):

+ """Construct a Unet submodule with skip connections.

+

+ Parameters:

+ outer_nc (int) -- the number of filters in the outer conv layer

+ inner_nc (int) -- the number of filters in the inner conv layer

+ input_nc (int) -- the number of channels in input images/features

+ submodule (UnetSkipConnectionBlock) -- previously defined submodules

+ outermost (bool) -- if this module is the outermost module

+ innermost (bool) -- if this module is the innermost module

+ norm_layer -- normalization layer

+ use_dropout (bool) -- if use dropout layers.

+ """

+ # pylint: disable=too-many-locals

+ super().__init__()

+ self.outermost = outermost

+ if type(norm_layer) == functools.partial:

+ use_bias = norm_layer.func == nn.InstanceNorm2d

+ else:

+ use_bias = norm_layer == nn.InstanceNorm2d

+ if input_nc is None:

+ input_nc = outer_nc

+ downconv = nn.Conv2d(input_nc, inner_nc, kernel_size=4,

+ stride=2, padding=1, bias=use_bias)

+ downrelu = nn.LeakyReLU(0.2, True)

+ downnorm = norm_layer(inner_nc)

+ uprelu = nn.ReLU(True)

+ upnorm = norm_layer(outer_nc)

+

+ if outermost:

+ upconv = nn.ConvTranspose2d(inner_nc * 2, outer_nc,

+ kernel_size=4, stride=2,

+ padding=1)

+ down = [downconv]

+ up = [uprelu, upconv ]

+

+ if outer_nc == 3:

+ up.append(nn.Sigmoid())

+

+ model = down + [submodule] + up

+ elif innermost:

+ upconv = nn.ConvTranspose2d(inner_nc, outer_nc,

+ kernel_size=4, stride=2,

+ padding=1, bias=use_bias)

+ down = [downrelu, downconv]

+ up = [uprelu, upconv, upnorm]

+ model = down + up

+ else:

+ upconv = nn.ConvTranspose2d(inner_nc * 2, outer_nc,

+ kernel_size=4, stride=2,

+ padding=1, bias=use_bias)

+ down = [downrelu, downconv, downnorm]

+ up = [uprelu, upconv, upnorm]

+

+ if use_dropout:

+ model = down + [submodule] + up + [nn.Dropout(0.5)]

+ else:

+ model = down + [submodule] + up

+

+ self.model = nn.Sequential(*model)

+

+ def forward(self, x):

+ if self.outermost:

+ return self.model(x)

+ else: # add skip connections

+ return torch.cat([x, self.model(x)], 1)

+

+

+class NLayerDiscriminator(nn.Module):

+ """Defines a PatchGAN discriminator"""

+

+ def __init__(

+ self, image_shape, ndf=64, n_layers=3, norm='batch', max_mult=8,

+ shrink_output = True, return_intermediate_activations = False

+ ):

+ # pylint: disable=too-many-locals

+ """Construct a PatchGAN discriminator

+

+ Parameters:

+ input_nc (int) -- the number of channels in input images

+ ndf (int) -- the number of filters in the last conv layer

+ n_layers (int) -- the number of conv layers in the discriminator

+ norm_layer -- normalization layer

+ """

+ super(NLayerDiscriminator, self).__init__()

+

+ norm_layer = get_norm_layer(norm_type = norm)

+

+ if type(norm_layer) == functools.partial:

+ use_bias = norm_layer.func == nn.InstanceNorm2d

+ else:

+ use_bias = norm_layer == nn.InstanceNorm2d

+

+ kw = 4

+ padw = 1

+ sequence = [nn.Conv2d(image_shape[0], ndf, kernel_size=kw, stride=2, padding=padw), nn.LeakyReLU(0.2, True)]

+ nf_mult = 1

+ nf_mult_prev = 1

+ for n in range(1, n_layers): # gradually increase the number of filters

+ nf_mult_prev = nf_mult

+ nf_mult = min(2 ** n, max_mult)

+ sequence += [

+ nn.Conv2d(ndf * nf_mult_prev, ndf * nf_mult, kernel_size=kw, stride=2, padding=padw, bias=use_bias),

+ norm_layer(ndf * nf_mult),

+ nn.LeakyReLU(0.2, True)

+ ]

+

+ nf_mult_prev = nf_mult

+ nf_mult = min(2 ** n_layers, max_mult)

+ sequence += [

+ nn.Conv2d(ndf * nf_mult_prev, ndf * nf_mult, kernel_size=kw, stride=1, padding=padw, bias=use_bias),

+ norm_layer(ndf * nf_mult),

+ nn.LeakyReLU(0.2, True)

+ ]

+

+ self.model = nn.Sequential(*sequence)

+ self.shrink_conv = None

+

+ if shrink_output:

+ self.shrink_conv = nn.Conv2d(ndf * nf_mult, 1, kernel_size=kw, stride=1, padding=padw)

+

+ self._intermediate = return_intermediate_activations

+

+ def forward(self, input):

+ """Standard forward."""

+ z = self.model(input)

+

+ if self.shrink_conv is None:

+ return z

+

+ y = self.shrink_conv(z)

+

+ if self._intermediate:

+ return (y, z)

+

+ return y

+

+class PixelDiscriminator(nn.Module):

+ """Defines a 1x1 PatchGAN discriminator (pixelGAN)"""

+

+ def __init__(self, image_shape, ndf=64, norm='batch'):

+ """Construct a 1x1 PatchGAN discriminator

+

+ Parameters:

+ input_nc (int) -- the number of channels in input images

+ ndf (int) -- the number of filters in the last conv layer

+ norm_layer -- normalization layer

+ """

+ super(PixelDiscriminator, self).__init__()

+

+ norm_layer = get_norm_layer(norm_type=norm)

+

+ if type(norm_layer) == functools.partial: # no need to use bias as BatchNorm2d has affine parameters

+ use_bias = norm_layer.func == nn.InstanceNorm2d

+ else:

+ use_bias = norm_layer == nn.InstanceNorm2d

+

+ self.net = [

+ nn.Conv2d(image_shape[0], ndf, kernel_size=1, stride=1, padding=0),

+ nn.LeakyReLU(0.2, True),

+ nn.Conv2d(ndf, ndf * 2, kernel_size=1, stride=1, padding=0, bias=use_bias),

+ norm_layer(ndf * 2),

+ nn.LeakyReLU(0.2, True),

+ nn.Conv2d(ndf * 2, 1, kernel_size=1, stride=1, padding=0, bias=use_bias)]

+

+ self.net = nn.Sequential(*self.net)

+

+ def forward(self, input):

+ """Standard forward."""

+ return self.net(input)

diff --git a/uvcgan_s/base/schedulers.py b/uvcgan_s/base/schedulers.py

new file mode 100644

index 0000000..b133123

--- /dev/null

+++ b/uvcgan_s/base/schedulers.py

@@ -0,0 +1,61 @@

+from torch.optim import lr_scheduler

+from uvcgan_s.torch.select import extract_name_kwargs

+

+def linear_scheduler(optimizer, epochs_warmup, epochs_anneal, verbose = True):

+

+ def lambda_rule(epoch, epochs_warmup, epochs_anneal):

+ if epoch < epochs_warmup:

+ return 1.0

+

+ return 1.0 - (epoch - epochs_warmup) / (epochs_anneal + 1)

+

+ lr_fn = lambda epoch : lambda_rule(epoch, epochs_warmup, epochs_anneal)

+

+ return lr_scheduler.LambdaLR(optimizer, lr_fn, verbose = verbose)

+

+SCHED_DICT = {

+ 'step' : lr_scheduler.StepLR,

+ 'plateau' : lr_scheduler.ReduceLROnPlateau,

+ 'cosine' : lr_scheduler.CosineAnnealingLR,

+ 'cosine-restarts' : lr_scheduler.CosineAnnealingWarmRestarts,

+ 'constant' : lr_scheduler.ConstantLR,

+ # lr scheds below are for backward compatibility

+ 'linear' : linear_scheduler,

+ 'linear-v2' : lr_scheduler.LinearLR,

+ 'CosineAnnealingWarmRestarts' : lr_scheduler.CosineAnnealingWarmRestarts,

+}

+

+def select_single_scheduler(optimizer, scheduler):

+ if scheduler is None:

+ return None

+

+ name, kwargs = extract_name_kwargs(scheduler)

+ kwargs['verbose'] = True

+

+ if name not in SCHED_DICT:

+ raise ValueError(

+ f"Unknown scheduler: '{name}'. Supported: {SCHED_DICT.keys()}"

+ )

+

+ return SCHED_DICT[name](optimizer, **kwargs)

+

+def select_scheduler(optimizer, scheduler, compose = False):

+ if scheduler is None:

+ return None

+

+ if not isinstance(scheduler, (list, tuple)):

+ scheduler = [ scheduler, ]

+

+ result = [ select_single_scheduler(optimizer, x) for x in scheduler ]

+

+ if compose:

+ if len(result) == 1:

+ return result[0]

+ else:

+ return lr_scheduler.ChainedScheduler(result)

+ else:

+ return result

+

+def get_scheduler(optimizer, scheduler):

+ return select_scheduler(optimizer, scheduler, compose = True)

+

diff --git a/uvcgan_s/base/weight_init.py b/uvcgan_s/base/weight_init.py

new file mode 100644

index 0000000..2f8ce81

--- /dev/null

+++ b/uvcgan_s/base/weight_init.py

@@ -0,0 +1,49 @@

+import logging

+from torch.nn import init

+

+from uvcgan_s.torch.select import extract_name_kwargs

+

+LOGGER = logging.getLogger('uvcgan_s.base')

+

+def winit_func(m, init_type = 'normal', init_gain = 0.2):

+ classname = m.__class__.__name__

+

+ if (

+ hasattr(m, 'weight')

+ and (classname.find('Conv') != -1 or classname.find('Linear') != -1)

+ ):

+ if init_type == 'normal':

+ init.normal_(m.weight.data, 0.0, init_gain)

+

+ elif init_type == 'xavier':

+ init.xavier_normal_(m.weight.data, gain = init_gain)

+

+ elif init_type == 'kaiming':

+ init.kaiming_normal_(m.weight.data, a = 0, mode = 'fan_in')

+

+ elif init_type == 'orthogonal':

+ init.orthogonal_(m.weight.data, gain = init_gain)

+

+ else:

+ raise NotImplementedError(

+ 'Initialization method [%s] is not implemented' % init_type

+ )

+

+ if hasattr(m, 'bias') and m.bias is not None:

+ init.constant_(m.bias.data, 0.0)

+

+ elif classname.find('BatchNorm2d') != -1:

+ init.normal_(m.weight.data, 1.0, init_gain)

+ init.constant_(m.bias.data, 0.0)

+

+def init_weights(net, weight_init):

+ if weight_init is None:

+ return

+

+ name, kwargs = extract_name_kwargs(weight_init)

+

+ LOGGER.debug('Initializnig network with %s', name)

+ net.apply(

+ lambda m, name=name, kwargs=kwargs : winit_func(m, name, **kwargs)

+ )

+

diff --git a/uvcgan_s/cgan/__init__.py b/uvcgan_s/cgan/__init__.py

new file mode 100644

index 0000000..21eeffa

--- /dev/null

+++ b/uvcgan_s/cgan/__init__.py

@@ -0,0 +1,30 @@

+from .cyclegan import CycleGANModel

+from .pix2pix import Pix2PixModel

+from .autoencoder import Autoencoder

+from .simple_autoencoder import SimpleAutoencoder

+from .uvcgan2 import UVCGAN2

+from .uvcgan_s import UVCGAN_S

+

+CGAN_MODELS = {

+ 'cyclegan' : CycleGANModel,

+ 'pix2pix' : Pix2PixModel,

+ 'autoencoder' : Autoencoder,

+ 'simple-autoencoder' : SimpleAutoencoder,

+ 'uvcgan-v2' : UVCGAN2,

+ 'uvcgan-s' : UVCGAN_S,

+}

+

+def select_model(name, **kwargs):

+ if name not in CGAN_MODELS:

+ raise ValueError("Unknown model: %s" % name)

+

+ return CGAN_MODELS[name](**kwargs)

+

+def construct_model(savedir, config, is_train, device):

+ model = select_model(

+ config.model, savedir = savedir, config = config, is_train = is_train,

+ device = device, **config.model_args

+ )

+

+ return model

+

diff --git a/uvcgan_s/cgan/autoencoder.py b/uvcgan_s/cgan/autoencoder.py

new file mode 100644

index 0000000..f3bcf88

--- /dev/null

+++ b/uvcgan_s/cgan/autoencoder.py

@@ -0,0 +1,166 @@

+# pylint: disable=not-callable

+# NOTE: Mistaken lint:

+# E1102: self.encoder is not callable (not-callable)

+from uvcgan_s.torch.select import select_optimizer, select_loss

+from uvcgan_s.torch.background_penalty import BackgroundPenaltyReduction

+from uvcgan_s.torch.image_masking import select_masking

+from uvcgan_s.models.generator import construct_generator

+

+from .model_base import ModelBase

+from .named_dict import NamedDict

+from .funcs import set_two_domain_input

+

+class Autoencoder(ModelBase):

+

+ def _setup_images(self, _config):

+ images = [ 'real_a', 'reco_a', 'real_b', 'reco_b', ]

+

+ if self.masking is not None:

+ images += [ 'masked_a', 'masked_b' ]

+

+ return NamedDict(*images)

+

+ def _setup_models(self, config):

+ if self.joint:

+ image_shape = config.data.datasets[0].shape

+

+ assert image_shape == config.data.datasets[1].shape, (

+ "Joint autoencoder requires all datasets to have "

+ "the same image shape"

+ )

+

+ return NamedDict(

+ encoder = construct_generator(

+ config.generator, image_shape, image_shape, self.device

+ )

+ )

+

+ models = NamedDict('encoder_a', 'encoder_b')

+ models.encoder_a = construct_generator(

+ config.generator,

+ config.data.datasets[0].shape,

+ config.data.datasets[0].shape,

+ self.device

+ )

+ models.encoder_b = construct_generator(

+ config.generator,

+ config.data.datasets[1].shape,

+ config.data.datasets[1].shape,

+ self.device

+ )

+

+ return models

+

+ def _setup_losses(self, config):

+ self.loss_fn = select_loss(config.loss)

+

+ assert config.gradient_penalty is None, \

+ "Autoencoder model does not support gradient penalty"

+

+ return NamedDict('loss_a', 'loss_b')

+

+ def _setup_optimizers(self, config):

+ if self.joint:

+ return NamedDict(

+ encoder = select_optimizer(

+ self.models.encoder.parameters(),

+ config.generator.optimizer

+ )

+ )

+

+ optimizers = NamedDict('encoder_a', 'encoder_b')

+

+ optimizers.encoder_a = select_optimizer(

+ self.models.encoder_a.parameters(), config.generator.optimizer

+ )

+ optimizers.encoder_b = select_optimizer(

+ self.models.encoder_b.parameters(), config.generator.optimizer

+ )

+

+ return optimizers

+

+ def __init__(

+ self, savedir, config, is_train, device,

+ joint = False, background_penalty = None, masking = None

+ ):

+ # pylint: disable=too-many-arguments

+ self.joint = joint

+ self.masking = select_masking(masking)

+

+ assert len(config.data.datasets) == 2, \

+ "Autoencoder expects a pair of datasets"

+

+ super().__init__(savedir, config, is_train, device)

+

+ if background_penalty is None:

+ self.background_penalty = None

+ else:

+ self.background_penalty = BackgroundPenaltyReduction(

+ **background_penalty

+ )

+

+ assert config.discriminator is None, \

+ "Autoencoder model does not use discriminator"

+

+ def _handle_epoch_end(self):

+ if self.background_penalty is not None:

+ self.background_penalty.end_epoch(self.epoch)

+

+ def _set_input(self, inputs, domain):

+ set_two_domain_input(self.images, inputs, domain, self.device)

+

+ def forward(self):

+ input_a = self.images.real_a

+ input_b = self.images.real_b

+

+ if self.masking is not None:

+ if input_a is not None:

+ input_a = self.masking(input_a)

+

+ if input_b is not None:

+ input_b = self.masking(input_b)

+

+ self.images.masked_a = input_a

+ self.images.masked_b = input_b

+

+ if input_a is not None:

+ if self.joint:

+ self.images.reco_a = self.models.encoder (input_a)

+ else:

+ self.images.reco_a = self.models.encoder_a(input_a)

+

+ if input_b is not None:

+ if self.joint:

+ self.images.reco_b = self.models.encoder (input_b)

+ else:

+ self.images.reco_b = self.models.encoder_b(input_b)

+

+ def backward_generator_base(self, real, reco):

+ if self.background_penalty is not None:

+ reco = self.background_penalty(reco, real)

+

+ loss = self.loss_fn(reco, real)

+ loss.backward()

+

+ return loss

+

+ def backward_generators(self):

+ self.losses.loss_b = self.backward_generator_base(

+ self.images.real_b, self.images.reco_b

+ )

+

+ self.losses.loss_a = self.backward_generator_base(

+ self.images.real_a, self.images.reco_a

+ )

+

+ def optimization_step(self):

+ self.forward()

+

+ for optimizer in self.optimizers.values():

+ optimizer.zero_grad()

+

+ self.backward_generators()

+

+ for optimizer in self.optimizers.values():

+ optimizer.step()

+

diff --git a/uvcgan_s/cgan/checkpoint.py b/uvcgan_s/cgan/checkpoint.py

new file mode 100644

index 0000000..70c6a77

--- /dev/null

+++ b/uvcgan_s/cgan/checkpoint.py

@@ -0,0 +1,73 @@

+import os

+import re

+import torch

+

+CHECKPOINTS_DIR = 'checkpoints'

+

+def find_last_checkpoint_epoch(savedir, prefix = None):

+ root = os.path.join(savedir, CHECKPOINTS_DIR)

+ if not os.path.exists(root):

+ return -1

+

+ if prefix is None:

+ r = re.compile(r'(\d+)_.*')

+ else:

+ r = re.compile(r'(\d+)_' + re.escape(prefix) + '_.*')

+

+ last_epoch = -1

+

+ for fname in os.listdir(root):

+ m = r.match(fname)

+ if m:

+ epoch = int(m.groups()[0])

+ last_epoch = max(last_epoch, epoch)

+

+ return last_epoch

+

+def get_save_path(savedir, name, epoch, mkdir = False):

+ if epoch is None:

+ fname = '%s.pth' % (name)

+ root = savedir

+ else:

+ fname = '%04d_%s.pth' % (epoch, name)

+ root = os.path.join(savedir, CHECKPOINTS_DIR)

+

+ result = os.path.join(root, fname)

+

+ if mkdir:

+ os.makedirs(root, exist_ok = True)

+

+ return result

+

+def save(named_dict, savedir, prefix, epoch = None):

+ for (k,v) in named_dict.items():

+ if v is None:

+ continue

+

+ save_path = get_save_path(

+ savedir, prefix + '_' + k, epoch, mkdir = True

+ )

+

+ if isinstance(v, torch.nn.DataParallel):

+ torch.save(v.module.state_dict(), save_path)

+ else:

+ torch.save(v.state_dict(), save_path)

+

+def load(named_dict, savedir, prefix, epoch, device):

+ for (k,v) in named_dict.items():

+ if v is None:

+ continue

+

+ load_path = get_save_path(

+ savedir, prefix + '_' + k, epoch, mkdir = False

+ )

+

+ if isinstance(v, torch.nn.DataParallel):

+ v.module.load_state_dict(

+ torch.load(load_path, map_location = device)

+ )

+ else:

+ v.load_state_dict(

+ torch.load(load_path, map_location = device)

+ )

+

diff --git a/uvcgan_s/cgan/cyclegan.py b/uvcgan_s/cgan/cyclegan.py

new file mode 100644

index 0000000..295674f

--- /dev/null

+++ b/uvcgan_s/cgan/cyclegan.py

@@ -0,0 +1,226 @@

+# pylint: disable=not-callable

+# NOTE: Mistaken lint:

+# E1102: self.criterion_gan is not callable (not-callable)

+

+import itertools

+import torch

+

+from uvcgan_s.torch.select import select_optimizer

+from uvcgan_s.base.image_pool import ImagePool

+from uvcgan_s.base.losses import GANLoss, cal_gradient_penalty

+from uvcgan_s.models.discriminator import construct_discriminator

+from uvcgan_s.models.generator import construct_generator

+

+from .model_base import ModelBase

+from .named_dict import NamedDict

+from .funcs import set_two_domain_input

+

+class CycleGANModel(ModelBase):

+ # pylint: disable=too-many-instance-attributes

+

+ def _setup_images(self, _config):

+ images = [ 'real_a', 'fake_b', 'reco_a', 'real_b', 'fake_a', 'reco_b' ]

+

+ if self.is_train and self.lambda_idt > 0:

+ images += [ 'idt_a', 'idt_b' ]

+

+ return NamedDict(*images)

+

+ def _setup_models(self, config):

+ models = {}

+

+ models['gen_ab'] = construct_generator(

+ config.generator,

+ config.data.datasets[0].shape,

+ config.data.datasets[1].shape,

+ self.device

+ )

+ models['gen_ba'] = construct_generator(

+ config.generator,

+ config.data.datasets[1].shape,

+ config.data.datasets[0].shape,

+ self.device

+ )

+

+ if self.is_train:

+ models['disc_a'] = construct_discriminator(

+ config.discriminator,

+ config.data.datasets[0].shape,

+ self.device

+ )

+ models['disc_b'] = construct_discriminator(

+ config.discriminator,

+ config.data.datasets[1].shape,

+ self.device

+ )

+

+ return NamedDict(**models)

+

+ def _setup_losses(self, config):

+ losses = [

+ 'gen_ab', 'gen_ba', 'cycle_a', 'cycle_b', 'disc_a', 'disc_b'

+ ]

+

+ if self.is_train and self.lambda_idt > 0:

+ losses += [ 'idt_a', 'idt_b' ]

+

+ return NamedDict(*losses)

+

+ def _setup_optimizers(self, config):

+ optimizers = NamedDict('gen', 'disc')

+

+ optimizers.gen = select_optimizer(

+ itertools.chain(

+ self.models.gen_ab.parameters(),

+ self.models.gen_ba.parameters()

+ ),

+ config.generator.optimizer

+ )

+

+ optimizers.disc = select_optimizer(

+ itertools.chain(

+ self.models.disc_a.parameters(),

+ self.models.disc_b.parameters()

+ ),

+ config.discriminator.optimizer

+ )

+

+ return optimizers

+

+ def __init__(

+ self, savedir, config, is_train, device, pool_size = 50,

+ lambda_a = 10.0, lambda_b = 10.0, lambda_idt = 0.5

+ ):

+ # pylint: disable=too-many-arguments

+ self.lambda_a = lambda_a

+ self.lambda_b = lambda_b

+ self.lambda_idt = lambda_idt

+

+ assert len(config.data.datasets) == 2, \

+ "CycleGAN expects a pair of datasets"

+

+ super().__init__(savedir, config, is_train, device)

+

+ self.criterion_gan = GANLoss(config.loss).to(self.device)

+ self.gradient_penalty = config.gradient_penalty

+ self.criterion_cycle = torch.nn.L1Loss()

+ self.criterion_idt = torch.nn.L1Loss()

+

+ if self.is_train:

+ self.pred_a_pool = ImagePool(pool_size)

+ self.pred_b_pool = ImagePool(pool_size)

+

+ def _set_input(self, inputs, domain):

+ set_two_domain_input(self.images, inputs, domain, self.device)

+

+ def forward(self):

+ def simple_fwd(batch, gen_fwd, gen_bkw):

+ if batch is None:

+ return (None, None)

+

+ fake = gen_fwd(batch)

+ reco = gen_bkw(fake)

+

+ return (fake, reco)

+

+ self.images.fake_b, self.images.reco_a = simple_fwd(

+ self.images.real_a, self.models.gen_ab, self.models.gen_ba

+ )

+

+ self.images.fake_a, self.images.reco_b = simple_fwd(

+ self.images.real_b, self.models.gen_ba, self.models.gen_ab

+ )

+

+ def backward_discriminator_base(self, model, real, fake):

+ pred_real = model(real)

+ loss_real = self.criterion_gan(pred_real, True)

+

+ #

+ # NOTE:

+ # This is a workaround to a pytorch 1.9.0 bug that manifests when

+ # cudnn is enabled. When the bug is solved remove no_grad block and

+ # replace `model(fake)` by `model(fake.detach())`.

+ #

+ # bug: https://github.com/pytorch/pytorch/issues/48439

+ #

+ with torch.no_grad():

+ fake = fake.contiguous()

+

+ pred_fake = model(fake)

+ loss_fake = self.criterion_gan(pred_fake, False)

+

+ loss = (loss_real + loss_fake) * 0.5

+

+ if self.gradient_penalty is not None:

+ loss += cal_gradient_penalty(

+ model, real, fake, real.device, **self.gradient_penalty

+ )[0]

+

+ loss.backward()

+ return loss

+

+ def backward_discriminators(self):

+ fake_a = self.pred_a_pool.query(self.images.fake_a)

+ fake_b = self.pred_b_pool.query(self.images.fake_b)

+

+ self.losses.disc_b = self.backward_discriminator_base(

+ self.models.disc_b, self.images.real_b, fake_b

+ )

+

+ self.losses.disc_a = self.backward_discriminator_base(

+ self.models.disc_a, self.images.real_a, fake_a

+ )

+

+ def backward_generators(self):

+ lambda_idt = self.lambda_idt

+ lambda_a = self.lambda_a

+ lambda_b = self.lambda_b

+

+ self.losses.gen_ab = self.criterion_gan(

+ self.models.disc_b(self.images.fake_b), True

+ )

+ self.losses.gen_ba = self.criterion_gan(

+ self.models.disc_a(self.images.fake_a), True

+ )

+ self.losses.cycle_a = lambda_a * self.criterion_cycle(

+ self.images.reco_a, self.images.real_a

+ )

+ self.losses.cycle_b = lambda_b * self.criterion_cycle(

+ self.images.reco_b, self.images.real_b

+ )

+

+ loss = (

+ self.losses.gen_ab + self.losses.gen_ba

+ + self.losses.cycle_a + self.losses.cycle_b

+ )

+

+ if lambda_idt > 0:

+ self.images.idt_b = self.models.gen_ab(self.images.real_b)

+ self.losses.idt_b = lambda_b * lambda_idt * self.criterion_idt(

+ self.images.idt_b, self.images.real_b

+ )

+

+ self.images.idt_a = self.models.gen_ba(self.images.real_a)

+ self.losses.idt_a = lambda_a * lambda_idt * self.criterion_idt(

+ self.images.idt_a, self.images.real_a

+ )

+

+ loss += (self.losses.idt_a + self.losses.idt_b)

+

+ loss.backward()

+

+ def optimization_step(self):

+ self.forward()

+

+ # Generators

+ self.set_requires_grad([self.models.disc_a, self.models.disc_b], False)

+ self.optimizers.gen.zero_grad()

+ self.backward_generators()

+ self.optimizers.gen.step()

+

+ # Discriminators

+ self.set_requires_grad([self.models.disc_a, self.models.disc_b], True)

+ self.optimizers.disc.zero_grad()

+ self.backward_discriminators()

+ self.optimizers.disc.step()

+

diff --git a/uvcgan_s/cgan/funcs.py b/uvcgan_s/cgan/funcs.py

new file mode 100644

index 0000000..e042d04

--- /dev/null

+++ b/uvcgan_s/cgan/funcs.py

@@ -0,0 +1,55 @@

+import torch

+

+def set_two_domain_input(images, inputs, domain, device):

+ if (domain is None) or (domain == 'both'):

+ images.real_a = inputs[0].to(device, non_blocking = True)

+ images.real_b = inputs[1].to(device, non_blocking = True)

+

+ elif domain in [ 'a', 0 ]:

+ images.real_a = inputs.to(device, non_blocking = True)

+

+ elif domain in [ 'b', 1 ]:

+ images.real_b = inputs.to(device, non_blocking = True)

+

+ else:

+ raise ValueError(

+ f"Unknown domain: '{domain}'."

+ " Supported domains: 'a' (alias 0), 'b' (alias 1), or 'both'"

+ )

+

+def set_asym_two_domain_input(images, inputs, domain, device):

+ if (domain is None) or (domain == 'all'):

+ images.real_a0 = inputs[0].to(device, non_blocking = True)

+ images.real_a1 = inputs[1].to(device, non_blocking = True)

+ images.real_b = inputs[2].to(device, non_blocking = True)

+

+ elif domain in [ 'a0', 0 ]:

+ images.real_a0 = inputs.to(device, non_blocking = True)

+

+ elif domain in [ 'a1', 1 ]:

+ images.real_a1 = inputs.to(device, non_blocking = True)

+

+ elif domain in [ 'b', 2 ]:

+ images.real_b = inputs.to(device, non_blocking = True)

+

+ else:

+ raise ValueError(

+ f"Unknown domain: '{domain}'."

+ " Supported domains: 'a0' (alias 0), 'a1' (alias 1), "

+ "'b' (alias 2), or 'all'"

+ )

+

+def trace_models(models, input_shapes, device):

+ result = {}

+

+ for (name, model) in models.items():

+ if name not in input_shapes:

+ continue

+

+ shape = input_shapes[name]

+ data = torch.randn((1, *shape)).to(device)

+

+ result[name] = torch.jit.trace(model, data)

+

+ return result

+

diff --git a/uvcgan_s/cgan/model_base.py b/uvcgan_s/cgan/model_base.py

new file mode 100644

index 0000000..bbae864

--- /dev/null

+++ b/uvcgan_s/cgan/model_base.py

@@ -0,0 +1,176 @@

+import logging

+import torch

+from torch.optim.lr_scheduler import ReduceLROnPlateau

+

+from uvcgan_s.base.schedulers import get_scheduler

+from .named_dict import NamedDict

+from .checkpoint import find_last_checkpoint_epoch, save, load

+

+PREFIX_MODEL = 'net'

+PREFIX_OPT = 'opt'

+PREFIX_SCHED = 'sched'

+

+LOGGER = logging.getLogger('uvcgan_s.cgan')

+

+class ModelBase:

+ # pylint: disable=too-many-instance-attributes

+

+ def __init__(self, savedir, config, is_train, device):

+ self.is_train = is_train

+ self.device = device

+ self.savedir = savedir

+ self._config = config

+

+ self.models = self._setup_models(config)

+ self.images = self._setup_images(config)

+ self.losses = self._setup_losses(config)

+ self.metric = 0

+ self.epoch = 0

+

+ self.optimizers = NamedDict()

+ self.schedulers = NamedDict()

+

+ if is_train:

+ self.optimizers = self._setup_optimizers(config)

+ self.schedulers = self._setup_schedulers(config)

+

+ def set_input(self, inputs, domain = None):

+ for key in self.images:

+ self.images[key] = None

+

+ self._set_input(inputs, domain)

+

+ def forward(self):

+ raise NotImplementedError

+

+ def optimization_step(self):

+ raise NotImplementedError

+

+ def _set_input(self, inputs, domain):

+ raise NotImplementedError

+

+ def _setup_images(self, config):

+ raise NotImplementedError

+

+ def _setup_models(self, config):

+ raise NotImplementedError

+

+ def _setup_losses(self, config):

+ raise NotImplementedError

+

+ def _setup_optimizers(self, config):

+ raise NotImplementedError

+

+ def _setup_schedulers(self, config):

+ schedulers = { }

+

+ for (name, opt) in self.optimizers.items():

+ schedulers[name] = get_scheduler(opt, config.scheduler)

+

+ return NamedDict(**schedulers)

+

+ def _save_model_state(self, epoch):

+ pass

+

+ def _load_model_state(self, epoch):

+ pass

+

+ def _handle_epoch_end(self):

+ pass

+

+ def eval(self):

+ self.is_train = False

+

+ for model in self.models.values():

+ model.eval()

+

+ def train(self):

+ self.is_train = True

+

+ for model in self.models.values():

+ model.train()

+

+ def forward_nograd(self):

+ with torch.no_grad():

+ self.forward()

+

+ def find_last_checkpoint_epoch(self):

+ return find_last_checkpoint_epoch(self.savedir, PREFIX_MODEL)

+

+ def load(self, epoch):

+ if (epoch is not None) and (epoch <= 0):

+ return

+

+ LOGGER.debug('Loading model from epoch %s', epoch)

+

+ load(self.models, self.savedir, PREFIX_MODEL, epoch, self.device)

+ load(self.optimizers, self.savedir, PREFIX_OPT, epoch, self.device)

+ load(self.schedulers, self.savedir, PREFIX_SCHED, epoch, self.device)

+

+ self.epoch = epoch

+ self._load_model_state(epoch)

+ self._handle_epoch_end()

+

+ def save(self, epoch = None):

+ LOGGER.debug('Saving model at epoch %s', epoch)

+

+ save(self.models, self.savedir, PREFIX_MODEL, epoch)

+ save(self.optimizers, self.savedir, PREFIX_OPT, epoch)

+ save(self.schedulers, self.savedir, PREFIX_SCHED, epoch)

+

+ self._save_model_state(epoch)

+

+ def end_epoch(self, epoch = None):

+ for scheduler in self.schedulers.values():

+ if scheduler is None:

+ continue

+

+ if isinstance(scheduler, ReduceLROnPlateau):

+ scheduler.step(self.metric)

+ else:

+ scheduler.step()

+

+ self._handle_epoch_end()

+

+ if epoch is None:

+ self.epoch = self.epoch + 1

+ else:

+ self.epoch = epoch

+

+ def pprint(self, verbose):

+ for name,model in self.models.items():

+ num_params = 0

+

+ for param in model.parameters():

+ num_params += param.numel()

+

+ if verbose:

+ print(model)

+

+ print(

+ '[Network %s] Total number of parameters : %.3f M' % (

+ name, num_params / 1e6

+ )

+ )

+

+ def set_requires_grad(self, models, requires_grad = False):

+ # pylint: disable=no-self-use

+ if not isinstance(models, list):

+ models = [models, ]

+

+ for model in models:

+ for param in model.parameters():

+ param.requires_grad = requires_grad

+

+ def get_current_losses(self):

+ result = {}

+

+ for (k,v) in self.losses.items():

+ result[k] = float(v)

+

+ return result

+

+ def get_images(self):

+ return self.images

+

+

diff --git a/uvcgan_s/cgan/named_dict.py b/uvcgan_s/cgan/named_dict.py

new file mode 100644

index 0000000..02c0180

--- /dev/null

+++ b/uvcgan_s/cgan/named_dict.py

@@ -0,0 +1,48 @@

+from collections.abc import Mapping

+

+class NamedDict(Mapping):

+ # pylint: disable=too-many-instance-attributes

+

+ _fields = None

+

+ def __init__(self, *args, **kwargs):

+ self._fields = {}

+

+ for arg in args:

+ self._fields[arg] = None

+

+ self._fields.update(**kwargs)

+

+ def __contains__(self, key):

+ return (key in self._fields)

+

+ def __getitem__(self, key):

+ return self._fields[key]

+

+ def __setitem__(self, key, value):

+ self._fields[key] = value

+

+ def __getattr__(self, key):

+ return self._fields[key]

+

+ def __setattr__(self, key, value):

+ if (self._fields is not None) and (key in self._fields):

+ self._fields[key] = value

+ else:

+ super().__setattr__(key, value)

+

+ def __iter__(self):

+ return iter(self._fields)

+

+ def __len__(self):

+ return len(self._fields)

+

+ def items(self):

+ return self._fields.items()

+

+ def keys(self):

+ return self._fields.keys()

+

+ def values(self):

+ return self._fields.values()

+

diff --git a/uvcgan_s/cgan/pix2pix.py b/uvcgan_s/cgan/pix2pix.py

new file mode 100644

index 0000000..ac97b41

--- /dev/null

+++ b/uvcgan_s/cgan/pix2pix.py

@@ -0,0 +1,166 @@

+# pylint: disable=not-callable

+# NOTE: Mistaken lint:

+# E1102: self.criterion_gan is not callable (not-callable)

+

+import torch

+

+from uvcgan_s.torch.select import select_optimizer

+from uvcgan_s.base.losses import GANLoss, cal_gradient_penalty

+from uvcgan_s.models.discriminator import construct_discriminator

+from uvcgan_s.models.generator import construct_generator

+

+from .model_base import ModelBase

+from .named_dict import NamedDict

+from .funcs import set_two_domain_input

+

+class Pix2PixModel(ModelBase):

+

+ def _setup_images(self, _config):

+ return NamedDict('real_a', 'fake_b', 'real_b', 'fake_a')

+

+ def _setup_models(self, config):

+ models = { }

+

+ image_shape_a = config.data.datasets[0].shape

+ image_shape_b = config.data.datasets[1].shape

+

+ assert image_shape_a[1:] == image_shape_b[1:], \

+ "Pix2Pix needs images in both domains to have the same size"

+

+ models['gen_ab'] = construct_generator(

+ config.generator, image_shape_a, image_shape_b, self.device

+ )

+ models['gen_ba'] = construct_generator(

+ config.generator, image_shape_b, image_shape_a, self.device

+ )

+

+ if self.is_train:

+ extended_image_shape = (

+ image_shape_a[0] + image_shape_b[0], *image_shape_a[1:]

+ )

+

+ for name in [ 'disc_a', 'disc_b' ]:

+ models[name] = construct_discriminator(

+ config.discriminator, extended_image_shape, self.device

+ )

+

+ return NamedDict(**models)

+

+ def _setup_losses(self, config):

+ return NamedDict(

+ 'gen_ab', 'gen_ba', 'l1_ab', 'l1_ba', 'disc_a', 'disc_b'

+ )

+

+ def _setup_optimizers(self, config):

+ optimizers = NamedDict('gen_ab', 'gen_ba', 'disc_a', 'disc_b')

+

+ optimizers.gen_ab = select_optimizer(

+ self.models.gen_ab.parameters(), config.generator.optimizer

+ )

+ optimizers.gen_ba = select_optimizer(

+ self.models.gen_ba.parameters(), config.generator.optimizer

+ )

+

+ optimizers.disc_a = select_optimizer(

+ self.models.disc_a.parameters(), config.discriminator.optimizer

+ )

+ optimizers.disc_b = select_optimizer(

+ self.models.disc_b.parameters(), config.discriminator.optimizer

+ )

+

+ return optimizers

+

+ def __init__(self, savedir, config, is_train, device):

+ super().__init__(savedir, config, is_train, device)

+

+ assert len(config.data.datasets) == 2, \

+ "Pix2Pix expects a pair of datasets"

+

+ self.criterion_gan = GANLoss(config.loss).to(self.device)

+ self.criterion_l1 = torch.nn.L1Loss()

+ self.gradient_penalty = config.gradient_penalty

+

+ def _set_input(self, inputs, domain):

+ set_two_domain_input(self.images, inputs, domain, self.device)

+

+ def forward(self):

+ if self.images.real_a is not None:

+ self.images.fake_b = self.models.gen_ab(self.images.real_a)

+

+ if self.images.real_b is not None:

+ self.images.fake_a = self.models.gen_ba(self.images.real_b)

+

+ def backward_discriminator_base(self, model, real, fake, preimage):

+ cond_real = torch.cat([real, preimage], dim = 1)

+ cond_fake = torch.cat([fake, preimage], dim = 1).detach()

+

+ pred_real = model(cond_real)

+ loss_real = self.criterion_gan(pred_real, True)

+

+ pred_fake = model(cond_fake)

+ loss_fake = self.criterion_gan(pred_fake, False)

+

+ loss = (loss_real + loss_fake) * 0.5

+

+ if self.gradient_penalty is not None:

+ loss += cal_gradient_penalty(

+ model, cond_real, cond_fake, real.device,

+ **self.gradient_penalty

+ )[0]

+

+ loss.backward()

+ return loss

+

+ def backward_discriminators(self):

+ self.losses.disc_b = self.backward_discriminator_base(

+ self.models.disc_b,

+ self.images.real_b, self.images.fake_b, self.images.real_a

+ )

+