diff --git a/.gitmodules b/.gitmodules

new file mode 100644

index 0000000..e69de29

diff --git a/.idea/.gitignore b/.idea/.gitignore

new file mode 100644

index 0000000..73f69e0

--- /dev/null

+++ b/.idea/.gitignore

@@ -0,0 +1,8 @@

+# Default ignored files

+/shelf/

+/workspace.xml

+# Datasource local storage ignored files

+/dataSources/

+/dataSources.local.xml

+# Editor-based HTTP Client requests

+/httpRequests/

diff --git a/.idea/deployment.xml b/.idea/deployment.xml

new file mode 100644

index 0000000..6a6d49b

--- /dev/null

+++ b/.idea/deployment.xml

@@ -0,0 +1,22 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/.idea/hpt.iml b/.idea/hpt.iml

new file mode 100644

index 0000000..3e2e3fe

--- /dev/null

+++ b/.idea/hpt.iml

@@ -0,0 +1,19 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/.idea/inspectionProfiles/profiles_settings.xml b/.idea/inspectionProfiles/profiles_settings.xml

new file mode 100644

index 0000000..105ce2d

--- /dev/null

+++ b/.idea/inspectionProfiles/profiles_settings.xml

@@ -0,0 +1,6 @@

+

+

+

+

\ No newline at end of file

diff --git a/.idea/misc.xml b/.idea/misc.xml

new file mode 100644

index 0000000..8598883

--- /dev/null

+++ b/.idea/misc.xml

@@ -0,0 +1,7 @@

+

+

+

+

+

\ No newline at end of file

diff --git a/.idea/modules.xml b/.idea/modules.xml

new file mode 100644

index 0000000..b0929b6

--- /dev/null

+++ b/.idea/modules.xml

@@ -0,0 +1,8 @@

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/.idea/other.xml b/.idea/other.xml

new file mode 100644

index 0000000..a708ec7

--- /dev/null

+++ b/.idea/other.xml

@@ -0,0 +1,6 @@

+

+

+

+

+

\ No newline at end of file

diff --git a/.idea/runConfigurations/single_train.xml b/.idea/runConfigurations/single_train.xml

new file mode 100644

index 0000000..c310ab9

--- /dev/null

+++ b/.idea/runConfigurations/single_train.xml

@@ -0,0 +1,30 @@

+

+

+

+

+

+

\ No newline at end of file

diff --git a/.idea/vcs.xml b/.idea/vcs.xml

new file mode 100644

index 0000000..b120a90

--- /dev/null

+++ b/.idea/vcs.xml

@@ -0,0 +1,8 @@

+

+

+

+

+

\ No newline at end of file

diff --git a/README.md b/README.md

index 492d5dd..bf59ef4 100644

--- a/README.md

+++ b/README.md

@@ -2,14 +2,14 @@

This is a research repository for the submission "Self-Supervised Pretraining Improves Self-Supervised Pretraining"

-For initial setup, refer to [setup instructions](references/setup.md).

+For initial setup, refer to [setup instructions](setup_pretraining.md).

## Setup Weight & Biases Tracking

```bash

export WANDB_API_KEY=

export WANDB_ENTITY=cal-capstone

-export WANDB_PROJECT=hpt

+export WANDB_PROJECT=scene_classification

#export WANDB_MODE=dryrun

```

@@ -58,54 +58,102 @@ bands_std = {'s1_std': [4.525339, 4.3586307],

1082.4341, 1057.7628, 1136.1942, 1132.7898, 991.48016]}

```

-**NEXT**, copy the pretraining template

+## Pre-training with SEN12MS Dataset

+[OpenSelfSup](https://github.com/Berkeley-Data/OpenSelfSup)

+- see `src/utils/pretrain-runner.sh` for end-to-end run (require prep creating config files).

+

+Check installation by pretraining using mocov2, extracting the model weights, evaluating the representations, and then viewing the results on tensorboard or [wandb](https://wandb.ai/cal-capstone/hpt):

+

+Set up experimental tracking and model versioning:

+```bash

+export WANDB_API_KEY=

+export WANDB_ENTITY=cal-capstone

+export WANDB_PROJECT=hpt4

+```

+

+#### Run pre-training

```bash

-cd src/utils

-cp templates/pretraining-config-template.sh pretrain-configs/sen12ms-small.sh

-# edit pretrain-configs/sen12ms-small.sh

+cd OpenSelfSup

+

+# set which GPUs to use

+# CUDA_VISIBLE_DEVICES=1

+# CUDA_VISIBLE_DEVICES=0,1,2,3

+

+# (sanity check) Single GPU training on samll dataset

+/tools/single_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_basetrain_aug_20ep.py --debug

+

+# (sanity check) Single GPU training on samll dataset on sen12ms fusion

+./tools/single_train.sh configs/selfsup/moco/r50_v2_sen12ms_fusion_in_smoke_aug.py --debug

+

+# (sanity check) 4 GPUs training on samll dataset

+./tools/dist_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_basetrain_aug_20ep.py 4

-# once edited, generate the project

-./gen-pretrain-project.sh pretrain-configs/my-dataset-config.sh

+# (sanity check) 4 GPUs training on samll fusion dataset

+./tools/dist_train.sh configs/selfsup/moco/r50_v2_sen12ms_fusion_in_smoke_aug.py 4

+

+# distributed full training

+/tools/dist_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_fulltrain_20ep.py 4

```

-What just happened? We generated a bunch of pretraining configs in the following location (take a look at all of these files to get a feel for how this works):

+#### (OPTIONAL) download pre-trained models

+

+Some of key pre-trained models are on s3 (s3://sen12ms/pretrained):

+- [200 epochs w/o augmentation: vivid-resonance-73](https://wandb.ai/cjrd/BDOpenSelfSup-tools/runs/3qjvxo2p/overview?workspace=user-cjrd)

+- [20 epochs w/o augmentation: silvery-oath7-2rr3864e](https://wandb.ai/cal-capstone/hpt2/runs/2rr3864e?workspace=user-taeil)

+- [sen12ms-baseline: soft-snowflake-3.pth](https://wandb.ai/cal-capstone/SEN12MS/runs/3gjhe4ff/overview?workspace=user-taeil)

+

```

-OpenSelfSup/configs/hpt-pretrain/${shortname}

+aws configure

+aws s3 sync s3://sen12ms/pretrained . --dryrun

+aws s3 sync s3://sen12ms/pretrained_sup . --dryrun

```

-**NEXT**, you're ready to kick off a trial run to make sure the pretraining is working as expected =)

+#### Extract pre-trained model

+Any other models can be restored by run ID if stored with W&B. Go to files section under the run to find `*.pth` files

```bash

-# the `-t` flag means `trial`: it'll only run a 50 iter pretraining

- ./utils/pretrain-runner.sh -t -d OpenSelfSup/configs/hpt-pretrain/${shortname}

+BACKBONE=work_dirs/selfsup/moco/r50_v2_sen12ms_in_basetrain_20ep/epoch_20_moco_in_baseline.pth

+

+# method 1: From working dir(same system for pre-training)

+# CHECKPOINT=work_dirs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep/epoch_20.pth

+

+# method 2: from W&B, {projectid}/{W&B run id} (any system)

+CHECKPOINT=hpt2/3l4yg63k

+

+# Extract the backbone

+python tools/extract_backbone_weights.py ${BACKBONE} ${CHECKPOINT}

+

```

-**NEXT**, if this works, kick off the full training. NOTE: you can kick this off multiple times as long as the config directories share the same filesystem

+

+## Evaluating Pretrained Representations

+

+Using OpenSelfSup

```bash

-# simply removing the `-t` flag from above

- ./utils/pretrain-runner.sh -d OpenSelfSup/configs/hpt-pretrain/${shortname}

+python tools/train.py $CFG --pretrained $PRETRAIN

+

+# RESISC finetune example

+tools/train.py --local_rank=0 configs/benchmarks/linear_classification/resisc45/r50_last.py --pretrained work_dirs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep/epoch_20_moco_in_basetrain.pth --work_dir work_dirs/benchmarks/linear_classification/resisc45/moco-selfsup/r50_v2_resisc_in_basetrain_20ep-r50_last --seed 0 --launcher=pytorch

+

+

+

```

-**NEXT**, if you want to perform BYOL pretraining, add `-b` flag.

+

+Using Sen12ms

```bash

-# simply add the `-b` flag to above.

- ./utils/pretrain-runner.sh -d OpenSelfSup/configs/hpt-pretrain/${shortname} -b

```

-Congratulations: you've launch a full hierarchical pretraining experiment.

-**FAQs/PROBLEMS?**

-* How does `pretrain-runner.sh` keep track of what's been pretrained?

- * In each config directory, it creates a `.pretrain-status` folder to keep track of what's processing/finished. See them with e.g. `find OpenSelfSup/configs/hpt-pretrain -name '.pretrain-status'`

-* How to redo a pretraining, e.g. because it crashed or something changed? Remove the

- * Remove the associate `.proc` or `.done` file. Find these e.g.

- ```bash

- find OpenSelfSup/configs/hpt-pretrain -name '.proc'

- find OpenSelfSup/configs/hpt-pretrain -name '.done'

- ```

-## Evaluating Pretrained Representations

+

+#### Previous

+```

+# Evaluate the representations (NOT SURE)

+./benchmarks/dist_train_linear.sh configs/benchmarks/linear_classification/resisc45/r50_last.py ${BACKBONE}

+```

+

This has been simplified to simply:

```bash

./utils/pretrain-evaluator.sh -b OpenSelfSup/work_dirs/hpt-pretrain/${shortname}/ -d OpenSelfSup/configs/hpt-pretrain/${shortname}

@@ -301,3 +349,22 @@ python tools/train.py configs/hpt-pretrain/resisc/moco_v2_800ep_basetrain/500-it

```

+## (Other) BigEarthNet bands mean and standard deviation

+

+For S-1 data, band name {'VV', 'VH'}.

+

+For S-2 data, band name {'B01', 'B02', 'B03', 'B04', 'B05', 'B06', 'B07', 'B08', 'B09', 'B11', 'B12', 'B8A'}.

+

+```

+cd into hpt/src/data/

+```

+Calc band stats by running

+```bash

+bash dataset_calc_BigEarthNet.sh

+```

+

+

+

+

+

+

diff --git a/data/small_sample.pkl b/data/small_sample.pkl

new file mode 100644

index 0000000..aebd3e1

Binary files /dev/null and b/data/small_sample.pkl differ

diff --git a/evaluation_sen12ms_assessment.md b/evaluation_sen12ms_assessment.md

new file mode 100644

index 0000000..5eb9466

--- /dev/null

+++ b/evaluation_sen12ms_assessment.md

@@ -0,0 +1,98 @@

+# A. Question & Response

+### 1. The evaluation options:

+> a. Scene Classification -- land cover label (currently on wandb).

+

+> b. Semantic segmentation -- assigning a class label to every pixel of the input image (not implement).

+

+### 2. What metrics they used:

+> a. Scene Classification -- Average Accuracy (single-label); Overall Accuracy (multi-label); f-1, precision, and recall refer to the [repo](https://github.com/schmitt-muc/SEN12MS).

+

+> b. Semantic segmentation -- class-wise and average accuracy -- refer to the [repo](https://github.com/lukasliebel/dfc2020_baseline).

+

+**Recalling from the meeting with Colorado, whether these metrics are standard? -- the answer is yes -- hence, Instead of using the author's evaluation system, there may be options for the use of openselfsup ecosystem.**

+

+

+# B. Summary of the Benchmark Evaluation Deep Dive

+

+### 1. Scene Classification

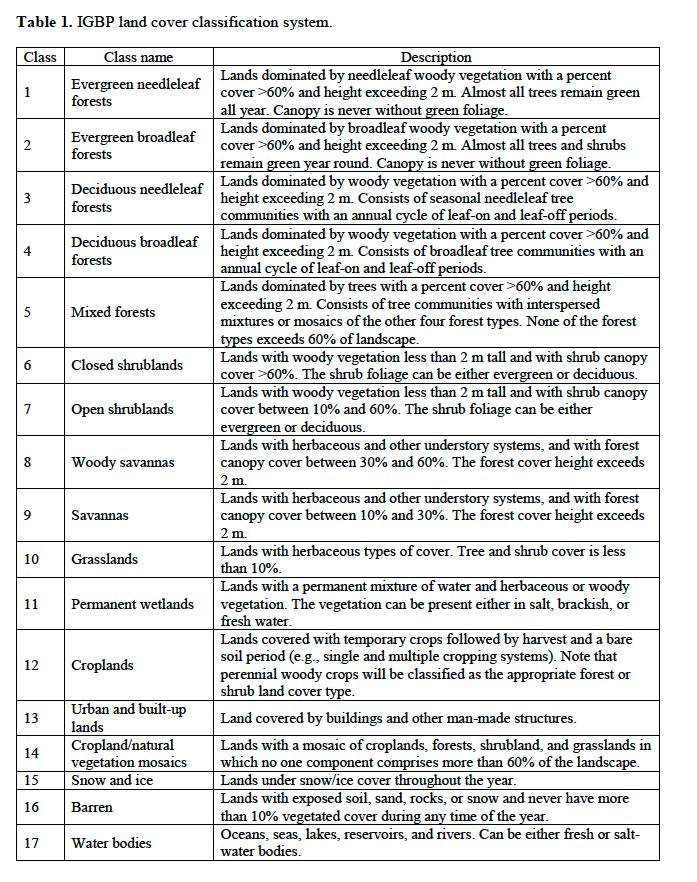

+1. Label were used -- **IGBP land cover scheme**.

+

+> a. the original IGBP land cover scheme has **17** classes.

+

+> b. the simplified version of IGBP classes has **10** classes, which derived and consolidated from the original 17 classes.

+

+2. Definition of single-label and multi-label.

+

+> a. For every scene (patch), we can identify the labels through land cover images from MODIS, in which the first band describes the IGBP classification scheme, whereas the rest of the three bands covered the LCCS land cover layer, LCCS land use layer, and the LCCS surface hydrology layer. According to the authors, the overall acc for the layers are about 67% (IGBP), 74% (LCCS land cover), 81% (LCCS land use), and 87% (LCCS surface hydrology). **There are known label noise to the SEN12MS dataset, and hence these accuracies will constitute the upper bound of actually achievable predictive power**.

+

+> b. from (a), the authors have already processed and stored the labels of each image in SEN12MS with full IGBP classes into the file **IGBP_probability_labels.pkl**, meaning the percentage of the image that belongs to each class, where further label types and target classes can be derived during the training steps -- single label or multi-label for a scene (patch). Below are the parameters we can define on the fly when training.

+

+>> - full classes (17) or simplified classes (10)

+>> - single label -- it's derived from the probabilities files that applies the argmax to select the highest probability of class (vector) in a scene (patch).

+>> - multi-label -- it's derived from the probabilities files that some threshold can be applied for each class in a vector.

+

+> c. For the single-label, the authors also provided the processed one-hot encoding for the vector derived from (b).

+

+>> - single-label_IGBPfull_ClsNum: This file contains scene labels based on the full IGBP land cover scheme, represented by actual class numbers.

+>> - single-label_IGBP_full_OneHot: This file contains scene labels based on the full IGBP land cover scheme, represented by a one-hot vector encoding.

+>> - single-label_IGBPsimple_ClsNum: This file contains scene labels based on the simplified IGBP land cover scheme, represented by actual class numbers.

+>> - single-label_IGBPsimple_OneHot: This file contains scene labels based on the simplified IGBP land cover scheme, represented by a one-hot vector encoding. All these files are available both in plain ASCII (.txt) format, as well as .pkl format.

+

+3. Modalities

+The modalities can be chosen when performing the training. Three options can be evaluated.

+>> - _RGB: only S2 RGB imagery is used

+

+>> - _s2: full multi-spectral s-2 data were used

+

+>> - _s1s2: data fusion-based models analyzing both s-1 and s-2 data

+

+**Checked whether _s1s2 would be the most relevant model when it comes to comparison with our approach - s1s2 MOCO, or it does not matter?**

+

+4. Reporting Metrics

+The authors have implemented some metrics in the .py files, but according to the papers, there is no actual reporting for the model described above (**or not found, still searching**). However, the author did mention in the paper as well as in the .py files for the metrics to be reported, which includes:

+>> 1. Average Accuracy (get_AA) -- only applied to single-label types.

+>> 2. Overall Accuracy (OA_multi) -- particular for multi-label cases.

+>> 3. F1-score, precision, and recall -- this is a relatively standard measure.

+

+5. There are pre-trained model(weights) and optimizations parameters can be downloaded.

+

+

+### 2. Semantic Segmentation (WIP)

+-- this task seems to be not straightforward. and the author did not report everything (based on the paper and repo). checking ...

+

+WIP

+

+

+

+# C. Our Evaluation choices

+

+### a. methods

+

+1. potential 1 -- using the exiting scene classification models and the current evaluation in sen12ms dataset to evaluate the moco one

+2. potential 2 -- using openselfsup to evaluate the sen12ms dataset?? (TBD)

+3. potential 3 -- others ?? (TBD)

+

+### b. samples

+1. full or sub-samples? (distributions)

+2. size

+

+

+# D. Results (WIP on wandb, subject to changes)

+### 1. SEN12MS - Supervised Learning Benchmark - Scene Classification

+These models were downloaded from their pre-trained described in B-5, and evaluated.

+

+| Backbone | Land Type | Modalities | Bactch size | Epochs | Accuracy (%) | Macro-F1 (%) | Micro-F1 (%) |

+|---|---|---|---|---|---|---|---|

+|DenseNet|single-label|_s1s2|64|100|51.16|50.78|62.90|

+|DenseNet|single-label|_s2|64|100|54.41|52.32|64.74|

+|ResNet50|single-label|_RGB|64|100|45.11|45.16|58.98|

+|ResNet50|single-label|_s1s2|64|100|45.52|53.21|64.66|

+|ResNet50|single-label|_s2|64|100|57.33|53.39|66.35|

+|ResNet50|multi-label|_RGB|64|100|89.86|47.57|66.51|

+|ResNet50|multi-label|_s1s2|64|100|91.22|57.46|71.40|

+|ResNet50|multi-label|_s2|64|100|90.62|56.14|69.88|

+

+

+# E. Appendix

+1. IGBP Land Cover Classification System

+

diff --git a/python b/python

new file mode 100755

index 0000000..17b6a5f

--- /dev/null

+++ b/python

@@ -0,0 +1,4 @@

+#!/usr/bin/env bash

+source /scratch/crguest/miniconda3/etc/profile.d/conda.sh

+conda activate hp120

+python "$@"

\ No newline at end of file

diff --git a/references/model_architectures.md b/references/model_architectures.md

new file mode 100644

index 0000000..1300689

--- /dev/null

+++ b/references/model_architectures.md

@@ -0,0 +1,30 @@

+#### Key model architectures and terms:

+- Supervised training (full dataset)

+ - baseline: downloaded the pre-trained the models and evaluate without finetuning.

+- Supervised training (1k dataset)

+ - Supervised: original ResNet50 used by Sen12ms

+ - Supervised_1x1: adding conv1x1 block to the ResNet50 used by Sen12ms

+- Finetune/transfer learning (1k dataset)

+ - Moco: the ResNet50 used by Sen12ms is initialized with the weight from Moco backbone

+ - Moco_1x1: adding conv1x1 block to the ResNet50 used by Sen12ms and both input module and ResNet50 layers are initialized with the weight from Moco

+ - Moco_1x1Rnd: adding conv1x1 block to the ResNet50 used by Sen12ms. ResNet50 layers are initialized with the weight from Moco but input module is initialized with random weights

+- Finetune v2 (1k dataset)

+ - freezing ResNet50 fully or partially does not seem to help with accuracy. We will continue explore and share the results once we are sure there is no issue with implementation.

+

+

+#### Key pretrained models

+

+Some pretrained models:

+

+**Sensor Augmentation**

+- [vivid-resonance-73](https://wandb.ai/cjrd/BDOpenSelfSup-tools/runs/3qjvxo2p)

+- [silvery-oath-7](https://wandb.ai/cal-capstone/hpt2/runs/2rr3864e)

+- sen12_crossaugment_epoch_1000.pth: 1000 epocs

+

+**Data Fusion - Augmentation Set 2**

+- [(optional fusion) crimson-pyramid-70](https://wandb.ai/cal-capstone/hpt4/runs/2iu8yfs6): 200 epochs

+- [(partial fusion) laced-water-61](https://wandb.ai/cal-capstone/hpt4/runs/367tz8vs) 200 epochs, 32K

+- [(partial fusion) visionary-lake-62](https://wandb.ai/cal-capstone/hpt4/runs/1srlc7jr/overview?workspace=user-taeil) should deprecate. different number of epochs from other pretrained models

+- [(full fusion) electric-mountain-33](https://wandb.ai/cal-capstone/hpt4/runs/ak0xdbfu)

+

+**Data Fusion - Augmentation Set 1**

\ No newline at end of file

diff --git a/references/setup.md b/references/setup.md

deleted file mode 100644

index 728009e..0000000

--- a/references/setup.md

+++ /dev/null

@@ -1,160 +0,0 @@

-

-

-## (optional) GPU instance

-

-Use `Deep Learning AMI (Ubuntu 18.04) Version 40.0` AMI

-- on us-west-2, ami-084f81625fbc98fa4

-- additional disk may be required for data

-

-Once logged in

-```

-# update conda to the latest

-conda update -n base conda

-

-conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

-

-```

-

-## Installation

-

-**Dependency repo**

-- [modified OpenSelfSup](https://github.com/Berkeley-Data/OpenSelfSup)

-- [modified SEN12MS](https://github.com/Berkeley-Data/SEN12MS)

-- [modified irrigation_detection](https://github.com/Berkeley-Data/irrigation_detection)

-

-```bash

-# clone dependency repo on the same levels as this repo and cd into this repo

-

-# setup environment

-conda create -n hpt python=3.7 ipython

-conda activate hpt

-

-# NOTE: if you are not using CUDA 10.2, you need to change the 10.2 in this command appropriately. Make sure to use torch 1.6.0

-# (check CUDA version with e.g. `cat /usr/local/cuda/version.txt`)

-# latest

-conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

-

-# 1.6 torch (no support for torchvision transform on tensor)

-conda install pytorch==1.6.0 torchvision==0.7.0 cudatoolkit=10.2 -c pytorch

-#colorado machine

-conda install pytorch==1.2.0 torchvision==0.7.0 cudatoolkit=10.2 -c pytorch

-

-# install local submodules

-cd OpenSelfSup

-pip install -v -e .

-```

-

-## Data installation

-

-Installing and setting up all 16 datsets is a bit of work, so this tutorial shows how to install and setup RESISC-45, and provides links to repeat those steps with other datasets.

-

-### RESISC-45

-RESISC-45 contains 31,500 aerial images, covering 45 scene classes with 700 images in each class.

-

-``` shell

-# cd to the directory where you want the data, $DATA

-wget -q https://bit.ly/3pfkHYp -O resisc45.tar.gz

-md5sum resisc45.tar.gz # this should be 964dafcfa2dff0402d0772514fb4540b

-tar xf resisc45.tar.gz

-

-mkdir ~/data

-mv resisc45 ~/data

-

-# replace/set $DATA and $CODE as appropriate

-# e.g., ln -s /home/ubuntu/data/resisc45 /home/ubuntu/hpt/OpenSelfSup/data/resisc45/all

-ln -s $DATA/resisc45 $CODE/OpenSelfSup/data/resisc45/all

-

-e.g., ln -s /home/ubuntu/data/resisc45 /home/ubuntu/hpt/OpenSelfSup/data/resisc45/all

-```

-

-### Download Pretrained Models

-``` shell

-cd OpenSelfSup/data/basetrain_chkpts/

-./download-pretrained-models.sh

-```

-

-## Verify Install With RESISC DataSet

-[OpenSelfSup](https://github.com/Berkeley-Data/OpenSelfSup)

-

-Check installation by pretraining using mocov2, extracting the model weights, evaluating the representations, and then viewing the results on tensorboard or [wandb](https://wandb.ai/cal-capstone/hpt):

-

-

-```bash

-export WANDB_API_KEY=

-export WANDB_ENTITY=cal-capstone

-export WANDB_PROJECT=hpt2

-#export WANDB_MODE=dryrun

-

-cd OpenSelfSup

-

-# Sanity check with single train and single epoch

-CUDA_VISIBLE_DEVICES=1 ./tools/single_train.sh configs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep.py --debug

-

-CUDA_VISIBLE_DEVICES=1 ./tools/single_train.sh /scratch/crguest/OpenSelfSup/configs/selfsup/moco/r50_v2_sen12ms_in_basetrain_20ep.py --work_dir work_dirs/selfsup/moco/r50_v2_sen12ms_in_basetrain_20ep/ --debug

-

-# Sanity check: MoCo for 20 epoch on 4 gpus

-./tools/dist_train.sh configs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep.py 4

-

-# if debugging, use

-tools/train.py configs/selfsup/moco/r50_v2_resisc_in_basetrain_1ep.py --work_dir work_dirs/selfsup/moco/r50_v2_resisc_in_basetrain_1ep/ --debug

-

-# make some variables so its clear what's happening

-CHECKPOINT=work_dirs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep/epoch_20.pth

-BACKBONE=work_dirs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep/epoch_20_moco_in_basetrain.pth

-# Extract the backbone

-python tools/extract_backbone_weights.py ${CHECKPOINT} ${BACKBONE}

-

-# Evaluate the representations

-./benchmarks/dist_train_linear.sh configs/benchmarks/linear_classification/resisc45/r50_last.py ${BACKBONE}

-

-# View the results (optional if wandb is not configured)

-cd work_dirs

-# you may need to install tensorboard

-tensorboard --logdir .

-```

-

-

-## Verify Install With SEN12MS Dataset

-[OpenSelfSup](https://github.com/Berkeley-Data/OpenSelfSup)

-

-Check installation by pretraining using mocov2, extracting the model weights, evaluating the representations, and then viewing the results on tensorboard or [wandb](https://wandb.ai/cal-capstone/hpt):

-

-```bash

-export WANDB_API_KEY=

-export WANDB_ENTITY=cal-capstone

-export WANDB_PROJECT=hpt2

-

-cd OpenSelfSup

-

-# single GPU training

-CUDA_VISIBLE_DEVICES=1 ./tools/single_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_basetrain_20ep.py --debug

-

-CUDA_VISIBLE_DEVICES=1 ./tools/single_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_fulltrain_20ep.py --debug

-

-

-# command for remote debugging, use full path

-python /scratch/crguest/OpenSelfSup/tools/train.py /scratch/crguest/OpenSelfSup/configs/selfsup/moco/r50_v2_sen12ms_in_fulltrain_20ep.py --debug

-

-CUDA_VISIBLE_DEVICES=1 python ./tools/single_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_fulltrain_20ep.py --debug

-

-# Sanity check: MoCo for 20 epoch on 4 gpus

-#CUDA_VISIBLE_DEVICES=0,1,2,3

-CUDA_VISIBLE_DEVICES=1 ./tools/dist_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_basetrain_20ep.py 4

-

-# distributed training

-#CUDA_VISIBLE_DEVICES=0,1,2,3

-./tools/dist_train.sh configs/selfsup/moco/r50_v2_sen12ms_in_fulltrain_20ep.py 4

-

-BACKBONE=work_dirs/selfsup/moco/r50_v2_sen12ms_in_basetrain_20ep/epoch_20_moco_in_baseline.pth

-# method 1: from working dir

-CHECKPOINT=work_dirs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep/epoch_20.pth

-# method 2: from W&B, {projectid}/{W&B run id}

-CHECKPOINT=hpt2/3l4yg63k

-

-# Extract the backbone

-python tools/extract_backbone_weights.py ${BACKBONE} ${CHECKPOINT}

-

-# Evaluate the representations

-./benchmarks/dist_train_linear.sh configs/benchmarks/linear_classification/resisc45/r50_last.py ${BACKBONE}

-

-```

\ No newline at end of file

diff --git a/references/setup_pretraining.md b/references/setup_pretraining.md

new file mode 100644

index 0000000..e9e2ae3

--- /dev/null

+++ b/references/setup_pretraining.md

@@ -0,0 +1,125 @@

+

+

+## (optional) GPU instance

+

+Use `Deep Learning AMI (Ubuntu 18.04) Version 40.0` AMI

+- on us-west-2, ami-084f81625fbc98fa4

+- additional disk may be required for data

+

+Once logged in

+```

+# update conda to the latest

+conda update -n base conda

+

+conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

+

+```

+

+## Installation

+

+**Dependency repo**

+- [modified OpenSelfSup](https://github.com/Berkeley-Data/OpenSelfSup)

+- [modified SEN12MS](https://github.com/Berkeley-Data/SEN12MS)

+- [modified irrigation_detection](https://github.com/Berkeley-Data/irrigation_detection)

+

+```bash

+# clone dependency repo on the same levels as this repo and cd into this repo

+

+# setup environment

+conda create -n hpt python=3.7 ipython

+conda activate hpt

+

+# NOTE: if you are not using CUDA 10.2, you need to change the 10.2 in this command appropriately. Make sure to use torch 1.6.0

+# (check CUDA version with e.g. `cat /usr/local/cuda/version.txt`)

+

+# latest torch

+conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

+

+# 1.6 torch (no support for torchvision transform on tensor)

+conda install pytorch==1.6.0 torchvision==0.7.0 cudatoolkit=10.2 -c pytorch

+

+#llano machine

+conda install pytorch==1.2.0 torchvision==0.7.0 cudatoolkit=10.2 -c pytorch

+

+# install local submodules

+cd OpenSelfSup

+pip install -v -e .

+```

+

+## Data installation

+

+Installing and setting up all 16 datsets is a bit of work, so this tutorial shows how to install and setup RESISC-45, and provides links to repeat those steps with other datasets.

+

+### RESISC-45

+RESISC-45 contains 31,500 aerial images, covering 45 scene classes with 700 images in each class.

+

+``` shell

+# cd to the directory where you want the data, $DATA

+wget -q https://bit.ly/3pfkHYp -O resisc45.tar.gz

+md5sum resisc45.tar.gz # this should be 964dafcfa2dff0402d0772514fb4540b

+tar xf resisc45.tar.gz

+

+mkdir ~/data

+mv resisc45 ~/data

+

+# replace/set $DATA and $CODE as appropriate

+# e.g., ln -s /home/ubuntu/data/resisc45 /home/ubuntu/OpenSelfSup/data/resisc45/all

+ln -s $DATA/resisc45 $CODE/OpenSelfSup/data/resisc45/all

+

+e.g., ln -s /home/ubuntu/data/resisc45 /home/ubuntu/hpt/OpenSelfSup/data/resisc45/all

+```

+

+### Download Pretrained Models

+``` shell

+tools/download-pretrained-models.sh

+mkdir OpenSelfSup/data/basetrain_chkpts

+mv

+```

+

+## Verify Install With RESISC DataSet

+[OpenSelfSup](https://github.com/Berkeley-Data/OpenSelfSup)

+

+Check installation by pretraining using mocov2, extracting the model weights, evaluating the representations, and then viewing the results on tensorboard or [wandb](https://wandb.ai/cal-capstone/hpt):

+

+

+```bash

+cd OpenSelfSup

+

+CUDA_VISIBLE_DEVICES=0,1,2,3

+

+# Sanity check with single train and single epoch

+./tools/single_train.sh configs/selfsup/moco/r50_v2_resisc_in_basetrain_1ep.py --debug

+

+# Sanity check: MoCo for 20 epoch on 4 gpus

+ ./tools/dist_train.sh configs/selfsup/moco/r50_v2_resisc_in_basetrain_20ep.py 4

+```

+

+

+## setup sub-modules for sen12ms and openselfsup repo

+

+Cloning

+```console

+git clone --recurse-submodules https://github.com/Berkeley-Data/hpt.git

+

+```

+

+or alternatiely

+```

+git submodule init

+git submodule update

+```

+

+additional config

+```

+git config push.recurseSubmodules on-demand

+# show status including submodule

+git config status.submodulesummary 1

+```

+

+update

+```

+git submodule update --remote

+```

+

+For mroe info: [7.11 Git Tools - Submodules](https://git-scm.com/book/en/v2/Git-Tools-Submodules)

+

\ No newline at end of file

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/base-imagenet-config.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/base-imagenet-config.py

new file mode 100644

index 0000000..4059a92

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/base-imagenet-config.py

@@ -0,0 +1,84 @@

+_base_ = '../../base.py'

+# model settings

+model = dict(

+ type='MOCO',

+ pretrained=None,

+ queue_len=65536,

+ feat_dim=128,

+ momentum=0.999,

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ in_channels=3,

+ out_indices=[4], # 0: conv-1, x: stage-x

+ norm_cfg=dict(type='BN')),

+ neck=dict(

+ type='NonLinearNeckV1',

+ in_channels=2048,

+ hid_channels=2048,

+ out_channels=128,

+ with_avg_pool=True),

+ head=dict(type='ContrastiveHead', temperature=0.2))

+# dataset settings

+data_source_cfg = dict(

+ type='ImageNet',

+ memcached=False,

+ mclient_path='/not/used')

+

+data_train_list = "data/imagenet/meta/train+val.txt"

+data_train_root = "data/imagenet"

+

+dataset_type = 'ContrastiveDataset'

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224, scale=(0.2, 1.)),

+ dict(

+ type='RandomAppliedTrans',

+ transforms=[

+ dict(

+ type='ColorJitter',

+ brightness=0.4,

+ contrast=0.4,

+ saturation=0.4,

+ hue=0.4)

+ ],

+ p=0.8),

+ dict(type='RandomGrayscale', p=0.2),

+ dict(

+ type='RandomAppliedTrans',

+ transforms=[

+ dict(

+ type='GaussianBlur',

+ sigma_min=0.1,

+ sigma_max=2.0)

+ ],

+ p=0.5),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=256,

+ workers_per_gpu=2,

+ drop_last=True,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline))

+# optimizer

+optimizer = dict(type='SGD', lr=0.03, weight_decay=0.0001, momentum=0.9)

+# learning policy

+lr_config = dict(policy='CosineAnnealing', min_lr=0.)

+

+# cjrd added this flag, since OSS didn't support training by iters(?)

+by_iter = True

+

+log_config = dict(

+ interval=25,

+ by_epoch=False,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=False),

+ dict(type='TensorboardLoggerHook', by_epoch=False)

+ ])

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/2500-iter-0_001-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/2500-iter-0_001-lr-finetune.py

new file mode 100644

index 0000000..bf2a5cb

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/2500-iter-0_001-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train-1000.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =True

+

+# learning policy

+lr_config = dict(

+ by_epoch=False,

+ policy='step',

+ step=[833,1667],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.001, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_iters=2500

+checkpoint_config = dict(interval=2500)

+

+log_config = dict(

+ interval=1,

+ by_epoch=False,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=False),

+ dict(type='TensorboardLoggerHook', by_epoch=False)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/2500-iter-0_01-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/2500-iter-0_01-lr-finetune.py

new file mode 100644

index 0000000..46dd647

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/2500-iter-0_01-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train-1000.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =True

+

+# learning policy

+lr_config = dict(

+ by_epoch=False,

+ policy='step',

+ step=[833,1667],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_iters=2500

+checkpoint_config = dict(interval=2500)

+

+log_config = dict(

+ interval=1,

+ by_epoch=False,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=False),

+ dict(type='TensorboardLoggerHook', by_epoch=False)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/90-epoch-0_001-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/90-epoch-0_001-lr-finetune.py

new file mode 100644

index 0000000..3e84fed

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/90-epoch-0_001-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train-1000.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =False

+

+# learning policy

+lr_config = dict(

+ by_epoch=True,

+ policy='step',

+ step=[30,60],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.001, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_epochs=90

+checkpoint_config = dict(interval=90)

+

+log_config = dict(

+ interval=1,

+ by_epoch=True,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=True),

+ dict(type='TensorboardLoggerHook', by_epoch=True)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/90-epoch-0_01-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/90-epoch-0_01-lr-finetune.py

new file mode 100644

index 0000000..b093dcf

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/90-epoch-0_01-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train-1000.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =False

+

+# learning policy

+lr_config = dict(

+ by_epoch=True,

+ policy='step',

+ step=[30,60],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_epochs=90

+checkpoint_config = dict(interval=90)

+

+log_config = dict(

+ interval=1,

+ by_epoch=True,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=True),

+ dict(type='TensorboardLoggerHook', by_epoch=True)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/finetune-eval-base.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/finetune-eval-base.py

new file mode 100644

index 0000000..78b99d2

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/1000-labels/finetune-eval-base.py

@@ -0,0 +1,30 @@

+train_cfg = {}

+test_cfg = {}

+optimizer_config = dict() # grad_clip, coalesce, bucket_size_mb

+# yapf:disable

+# yapf:enable

+# runtime settings

+dist_params = dict(backend='nccl')

+cudnn_benchmark = True

+log_level = 'INFO'

+load_from = None

+resume_from = None

+workflow = [('train', 1)]

+

+# model settings

+model = dict(

+ type='Classification',

+ pretrained=None,

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ in_channels=3,

+ out_indices=[4], # 0: conv-1, x: stage-x

+ norm_cfg=dict(type='BN')),

+ head=dict(

+ type='ClsHead', with_avg_pool=True, in_channels=2048,

+ num_classes=10,

+

+ )

+)

+prefetch = False

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/2500-iter-0_001-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/2500-iter-0_001-lr-finetune.py

new file mode 100644

index 0000000..1f82c17

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/2500-iter-0_001-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =True

+

+# learning policy

+lr_config = dict(

+ by_epoch=False,

+ policy='step',

+ step=[833,1667],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.001, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_iters=2500

+checkpoint_config = dict(interval=2500)

+

+log_config = dict(

+ interval=1,

+ by_epoch=False,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=False),

+ dict(type='TensorboardLoggerHook', by_epoch=False)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/2500-iter-0_01-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/2500-iter-0_01-lr-finetune.py

new file mode 100644

index 0000000..2801f70

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/2500-iter-0_01-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=False,

+ initial=False,

+ interval=25,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =True

+

+# learning policy

+lr_config = dict(

+ by_epoch=False,

+ policy='step',

+ step=[833,1667],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_iters=2500

+checkpoint_config = dict(interval=2500)

+

+log_config = dict(

+ interval=1,

+ by_epoch=False,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=False),

+ dict(type='TensorboardLoggerHook', by_epoch=False)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/90-epoch-0_001-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/90-epoch-0_001-lr-finetune.py

new file mode 100644

index 0000000..54e7cf5

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/90-epoch-0_001-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =False

+

+# learning policy

+lr_config = dict(

+ by_epoch=True,

+ policy='step',

+ step=[30,60],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.001, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_epochs=90

+checkpoint_config = dict(interval=90)

+

+log_config = dict(

+ interval=1,

+ by_epoch=True,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=True),

+ dict(type='TensorboardLoggerHook', by_epoch=True)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/90-epoch-0_01-lr-finetune.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/90-epoch-0_01-lr-finetune.py

new file mode 100644

index 0000000..b5b8809

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/90-epoch-0_01-lr-finetune.py

@@ -0,0 +1,109 @@

+_base_ = "finetune-eval-base.py"

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/no/matter',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+

+data_train_list = "data/imagenet/meta/train.txt"

+data_train_root = 'data/imagenet'

+

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = 'data/imagenet'

+

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = 'data/imagenet'

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=64, # x4 from update_interval

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ dataset=data['test'],

+ by_epoch=True,

+ initial=False,

+ interval=1,

+ imgs_per_gpu=32,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+]

+

+by_iter =False

+

+# learning policy

+lr_config = dict(

+ by_epoch=True,

+ policy='step',

+ step=[30,60],

+ gamma=0.1 # multiply LR by this number at each step

+)

+

+# momentum and weight decay from VTAB and IDRL

+optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.,

+ paramwise_options={'\Ahead.': dict(lr_mult=100)})

+

+

+# runtime settings

+# total iters or total epochs

+total_epochs=90

+checkpoint_config = dict(interval=90)

+

+log_config = dict(

+ interval=1,

+ by_epoch=True,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=True),

+ dict(type='TensorboardLoggerHook', by_epoch=True)

+ ])

+

+optimizer_config = dict(update_interval=4)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/finetune-eval-base.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/finetune-eval-base.py

new file mode 100644

index 0000000..78b99d2

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/finetune/all-labels/finetune-eval-base.py

@@ -0,0 +1,30 @@

+train_cfg = {}

+test_cfg = {}

+optimizer_config = dict() # grad_clip, coalesce, bucket_size_mb

+# yapf:disable

+# yapf:enable

+# runtime settings

+dist_params = dict(backend='nccl')

+cudnn_benchmark = True

+log_level = 'INFO'

+load_from = None

+resume_from = None

+workflow = [('train', 1)]

+

+# model settings

+model = dict(

+ type='Classification',

+ pretrained=None,

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ in_channels=3,

+ out_indices=[4], # 0: conv-1, x: stage-x

+ norm_cfg=dict(type='BN')),

+ head=dict(

+ type='ClsHead', with_avg_pool=True, in_channels=2048,

+ num_classes=10,

+

+ )

+)

+prefetch = False

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/50-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/50-iters.py

new file mode 100644

index 0000000..b8a01db

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/50-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/imagenet_r50_supervised.pth')

+

+# epoch related

+total_iters=50

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/500-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/500-iters.py

new file mode 100644

index 0000000..3548ce6

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/500-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/imagenet_r50_supervised.pth')

+

+# epoch related

+total_iters=500

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/5000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/5000-iters.py

new file mode 100644

index 0000000..399a69d

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/5000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/imagenet_r50_supervised.pth')

+

+# epoch related

+total_iters=5000

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/50000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/50000-iters.py

new file mode 100644

index 0000000..1a1a739

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/imagenet_r50_supervised_basetrain/50000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/imagenet_r50_supervised.pth')

+

+# epoch related

+total_iters=50000

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-base.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-base.py

new file mode 100644

index 0000000..3857484

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-base.py

@@ -0,0 +1,120 @@

+_base_ = '../../../base.py'

+# model settings

+model = dict(

+ type='Classification',

+ pretrained=None,

+ with_sobel=False,

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ in_channels=3,

+ out_indices=[4], # 0: conv-1, x: stage-x

+ norm_cfg=dict(type='BN'),

+ frozen_stages=4),

+ head=dict(

+ type='ClsHead', with_avg_pool=True, in_channels=2048,

+ num_classes=10,

+

+ )

+)

+

+# dataset settings

+data_source_cfg = dict(

+ type="ImageNet",

+ memcached=False,

+ mclient_path='/not/used',

+ # this will be ignored if type != ImageListMultihead

+

+)

+

+# used to trian the linear classifier

+data_train_list = "data/imagenet/meta/train.txt"

+data_train_root = "data/imagenet"

+

+# used for val (ie picking the final model)

+data_val_list = "data/imagenet/meta/val.txt"

+data_val_root = "data/imagenet"

+

+# used for testing evaluation: we've never seen this data before (not even during pretraining)

+data_test_list = "data/imagenet/meta/test.txt"

+data_test_root = "data/imagenet"

+

+dataset_type = "ClassificationDataset"

+img_norm_cfg = dict(mean=[0.5,0.6,0.7], std=[0.1,0.2,0.3])

+train_pipeline = [

+ dict(type='RandomResizedCrop', size=224),

+ dict(type='RandomHorizontalFlip'),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+test_pipeline = [

+ dict(type='Resize', size=256),

+ dict(type='CenterCrop', size=224),

+ dict(type='ToTensor'),

+ dict(type='Normalize', **img_norm_cfg),

+]

+data = dict(

+ batch_size=512,

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_train_list, root=data_train_root,

+ **data_source_cfg),

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_val_list, root=data_val_root, **data_source_cfg),

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ data_source=dict(

+ list_file=data_test_list, root=data_test_root, **data_source_cfg),

+ pipeline=test_pipeline))

+

+# additional hooks

+custom_hooks = [

+ dict(

+ name="val",

+ type='ValidateHook',

+ dataset=data['val'],

+ by_epoch=False,

+ initial=False,

+ interval=100,

+ imgs_per_gpu=128,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5))),

+ dict(

+ name="test",

+ type='ValidateHook',

+ by_epoch=False,

+ dataset=data['test'],

+ initial=False,

+ interval=100,

+ imgs_per_gpu=128,

+ workers_per_gpu=2,

+ eval_param=dict(topk=(1,5)))

+]

+

+# learning policy

+lr_config = dict(

+ by_epoch=False,

+ policy='step',

+ step=[1651,3333])

+checkpoint_config = dict(interval=5000)

+

+# runtime settings

+total_iters = 5000

+checkpoint_config = dict(interval=total_iters)

+

+# cjrd added this flag, since OSS didn't support training by iters(?)

+by_iter = True

+

+log_config = dict(

+ interval=10,

+ by_epoch=False,

+ hooks=[

+ dict(type='TextLoggerHook', by_epoch=False),

+ dict(type='TensorboardLoggerHook', by_epoch=False)

+ ])

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s0.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s0.py

new file mode 100644

index 0000000..730e7fa

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s0.py

@@ -0,0 +1,4 @@

+_base_="linear-eval-base.py"

+

+# optimizer

+optimizer = dict(type='SGD', lr=30., momentum=0.9, weight_decay=0.)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s1.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s1.py

new file mode 100644

index 0000000..730e7fa

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s1.py

@@ -0,0 +1,4 @@

+_base_="linear-eval-base.py"

+

+# optimizer

+optimizer = dict(type='SGD', lr=30., momentum=0.9, weight_decay=0.)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s2.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s2.py

new file mode 100644

index 0000000..730e7fa

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/linear-eval/linear-eval-lr-s2.py

@@ -0,0 +1,4 @@

+_base_="linear-eval-base.py"

+

+# optimizer

+optimizer = dict(type='SGD', lr=30., momentum=0.9, weight_decay=0.)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/50-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/50-iters.py

new file mode 100644

index 0000000..94798de

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/50-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/moco_v2_800ep.pth')

+

+# epoch related

+total_iters=50

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/500-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/500-iters.py

new file mode 100644

index 0000000..0cba86c

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/500-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/moco_v2_800ep.pth')

+

+# epoch related

+total_iters=500

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/5000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/5000-iters.py

new file mode 100644

index 0000000..2b29885

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/5000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/moco_v2_800ep.pth')

+

+# epoch related

+total_iters=5000

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/50000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/50000-iters.py

new file mode 100644

index 0000000..6403853

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/moco_v2_800ep_basetrain/50000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+model=dict(pretrained='data/basetrain_chkpts/moco_v2_800ep.pth')

+

+# epoch related

+total_iters=50000

+checkpoint_config = dict(interval=total_iters)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/100000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/100000-iters.py

new file mode 100644

index 0000000..19e030f

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/100000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+

+# epoch related

+total_iters=100000

+checkpoint_config = dict(interval=total_iters)

+checkpoint_config = dict(interval=total_iters//2)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/200000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/200000-iters.py

new file mode 100644

index 0000000..d013f98

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/200000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+

+# epoch related

+total_iters=200000

+checkpoint_config = dict(interval=total_iters)

+checkpoint_config = dict(interval=total_iters//2)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/5000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/5000-iters.py

new file mode 100644

index 0000000..0026346

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/5000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+

+# epoch related

+total_iters=5000

+checkpoint_config = dict(interval=total_iters)

+checkpoint_config = dict(interval=total_iters//2)

diff --git a/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/50000-iters.py b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/50000-iters.py

new file mode 100644

index 0000000..5085eea

--- /dev/null

+++ b/src/OpenSelfSup/configs/hpt-pretrain/imagenet/no_basetrain/50000-iters.py

@@ -0,0 +1,8 @@

+_base_="../base-imagenet-config.py"

+

+# this will merge with the parent

+

+# epoch related

+total_iters=50000

+checkpoint_config = dict(interval=total_iters)

+checkpoint_config = dict(interval=total_iters//2)

diff --git a/src/data/__pycache__/dataset.cpython-37.pyc b/src/data/__pycache__/dataset.cpython-37.pyc

new file mode 100644

index 0000000..f83ec2f

Binary files /dev/null and b/src/data/__pycache__/dataset.cpython-37.pyc differ